Difference between revisions of "Projects:2018s1-122 NI Autonomous Robotics Competition"

| Line 54: | Line 54: | ||

MyRIO processor and FPGA were programmed using LabVIEW 2017, a graphical programming environment. Additional LabVIEW modules were used to process the received sensor information, such as the 'Vision Development Module' and 'Control Design and Simulation Module'. | MyRIO processor and FPGA were programmed using LabVIEW 2017, a graphical programming environment. Additional LabVIEW modules were used to process the received sensor information, such as the 'Vision Development Module' and 'Control Design and Simulation Module'. | ||

| − | == Environment Sensors == | + | == Robot Construction == |

| + | === Robot Frame === | ||

| + | Laser cut acrylic chassis designed in�Autodesk AutoCAD | ||

| + | Curved aluminium sensor mounting bar | ||

| + | |||

| + | |||

| + | |||

| + | === Environment Sensors === | ||

The robot required a variety of sensors so that it could "see" track, obstacles, boundaries and the surrounding environment. Various sensors were required so the robot could calculate its position and the locations of surrounding elements. | The robot required a variety of sensors so that it could "see" track, obstacles, boundaries and the surrounding environment. Various sensors were required so the robot could calculate its position and the locations of surrounding elements. | ||

| Line 84: | Line 91: | ||

Before any image processing was attempted on the myRIO, the pipeline was determined using Matlab and its image processing toolbox. | Before any image processing was attempted on the myRIO, the pipeline was determined using Matlab and its image processing toolbox. | ||

| − | ==== Determining Image Processing Pipeline in Matlab | + | === Hough Transform === |

| + | Lane following? | ||

| + | |||

| + | === EKF === | ||

| + | Line following? | ||

| + | |||

| + | === Determining Image Processing Pipeline in Matlab === | ||

An overview of the pipeline is as follows: | An overview of the pipeline is as follows: | ||

| Line 97: | Line 110: | ||

The produced line equations were converted to obstacle locations referenced to the robot. Path planning could make decisions based on locations, and the robot could avoid them just as it would avoid obstacles. | The produced line equations were converted to obstacle locations referenced to the robot. Path planning could make decisions based on locations, and the robot could avoid them just as it would avoid obstacles. | ||

| + | |||

| + | == Localisation == | ||

| + | Motor encoders vs. EKF? | ||

| + | |||

| + | == Object Detection and Avoidance == | ||

| + | === Vector Field Histogram === | ||

| + | It does stuff using LIDAR | ||

| + | |||

| + | == Smooth Motion == | ||

| + | === Dynamic Window === | ||

| + | Scores best linear speed and yaw for optimum trajectory to a target point | ||

| + | |||

| + | === PID === | ||

| + | Control the motors so they don't wig out on us | ||

| + | |||

| + | == Achievements == | ||

| + | === Partial success at NIARC 2018 === | ||

| + | * The team completed all required competition milestones and group deliverables | ||

| + | * A faculty grant was awarded to the team to fund travel expenses for the live competition in Sydney after demonstration of the robot capabilities | ||

| + | * While the robot was quite capable, the robot was knocked out of contention in the elimination rounds | ||

Revision as of 14:30, 18 October 2018

Contents

Supervisors

Dr Hong Gunn Chew

Dr Braden Phillips

Honours Students

Alexey Havrilenko

Bradley Thompson

Joseph Lawrie

Michael Prendergast

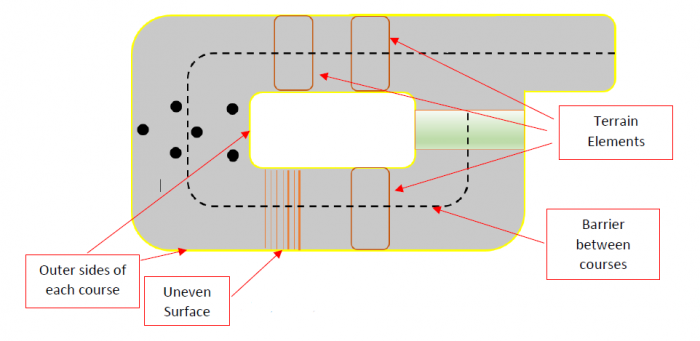

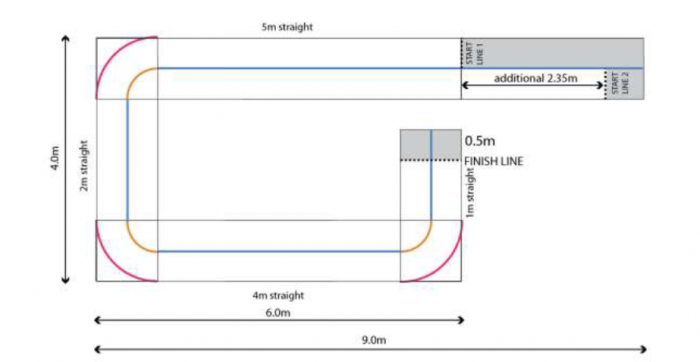

Project Introduction

Each year, National Instruments (NI) sponsors a competition to showcase the robotics capabilities of students by building autonomous robots using one of their reconfigurable processor and FPGA products. In 2018, the competition focuses on the theme 'Fast Track to the Future' where robots must perform various tasks on a track that incorporates various hazardous terrain, and unforeseen obstacles to be avoided autonomously. This project investigated the use of the NI MyRIO-1900 platform to achieve autonomous localisation, path planning, environmental awareness, and autonomous decision making. The live final took place in September where university teams across Australia, New Zealand, and Asia competed against each other for the grand prize.

Background

Autonomous Transportation

By removing a human driver from behind the wheel of a vehicle, the likelihood of a crash due to human error is essentially eliminated. By utilising autonomous systems designed and rigorously tested by experienced automation engineers, passengers in autonomous vehicles will be able to use their commute time for work or leisure activities. By enabling communication between autonomous vehicles, traffic flow can be more efficient, and overall commute times can be reduced. Long-haul freight efficiency can also be increased as autonomous vehicles do not experience fatigue nor hunger, thereby travelling further in shorter times.

Aims and Objectives

Competition Milestones and Requirements

To qualify for the live competition, milestones set at regular intervals by NI were met. These milestones included completion of training courses, a project proposal, obstacle avoidance, navigation and localisation.

Extended Functionality

The team also decided to implement additional functionality to showcase at the project exhibition, including lane following using the RGB camera and manual control of the robot with a joystick. In both cases, the autonomous systems maintain obstacle avoidance.

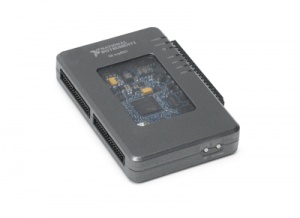

Processing Platform

NI MyRIO-1900 Real-Time Embedded Evaluation Board

- Xilinx Zynq 7010 FPGA - parallel sensor data acquisition and webcam image processing

- ARM microprocessor – computation, code execution and decision making.

NI LabVIEW 2017 Programming Environment

- System-design platform and graphical development environment provided by NI

- Expansive library of MyRIO toolkits, including FPGA, sensor I/O and data processing tools

- Real-time simulation of virtual instruments to predict real-world robot behaviour

- Graphical visualisation of code execution

MyRIO processor and FPGA were programmed using LabVIEW 2017, a graphical programming environment. Additional LabVIEW modules were used to process the received sensor information, such as the 'Vision Development Module' and 'Control Design and Simulation Module'.

Robot Construction

Robot Frame

Laser cut acrylic chassis designed in�Autodesk AutoCAD Curved aluminium sensor mounting bar

Environment Sensors

The robot required a variety of sensors so that it could "see" track, obstacles, boundaries and the surrounding environment. Various sensors were required so the robot could calculate its position and the locations of surrounding elements.

The sensors used:

- Image sensor: Logitech C922 webcam

- Range sensors: LIDAR (VL53LOX time of flight sensors)

- Independent DC motor encoders

Image Sensor

A Logitech C922 was used for image or video acquisition. The webcam is capable to full HD image recording with a field of view of 78 degrees. The webcam was aimed at the floor in front of the robot chassis with the top of the image ending near the horizon.

LIDAR Time of Flight Sensor Array

8 independent LIDAR time of flight sensors were contained in a curved aluminium sensor mounting bar. Each sensor returned a value representing the time taken for a laser to reach a surface and back, to determine the range of free space on the front and sides of the robot.

Independent DC Motor Encoders

4 independent DC motors are used on the robot for movement and steering. Each motor is fitted with a motor encoder that returns a value representing the rotation of the motor. Using the encoders, accurate localisation of the robot was achieved.

RGB Image Processing

The team decided to use the colour images for the following purposes:

- Identify boundaries on the floor that are marked with coloured tape

- Identify wall boundaries

The competition track boundaries were marked on the floor with 75mm wide yello tape. The RGB images were processed to extract useful information. Before any image processing was attempted on the myRIO, the pipeline was determined using Matlab and its image processing toolbox.

Hough Transform

Lane following?

EKF

Line following?

Determining Image Processing Pipeline in Matlab

An overview of the pipeline is as follows:

- Import/read captured RGB image

- Convert RGB (Red-Green-Blue) to HSV (Hue-Saturation-Value)

- The HSV representation of images allows us to easliy: isolate particular colours (Hue range), select colour intensity (Saturation range), and select brightness (Value range)

- Produce mask around desired colour

- Erode mask to reduce noise regions to nothing

- Dilate mask to return mask to original size

- Isolate edges of mask

- Calculate equations of the lines that run through the edges

The produced line equations were converted to obstacle locations referenced to the robot. Path planning could make decisions based on locations, and the robot could avoid them just as it would avoid obstacles.

Localisation

Motor encoders vs. EKF?

Object Detection and Avoidance

Vector Field Histogram

It does stuff using LIDAR

Smooth Motion

Dynamic Window

Scores best linear speed and yaw for optimum trajectory to a target point

PID

Control the motors so they don't wig out on us

Achievements

Partial success at NIARC 2018

- The team completed all required competition milestones and group deliverables

- A faculty grant was awarded to the team to fund travel expenses for the live competition in Sydney after demonstration of the robot capabilities

- While the robot was quite capable, the robot was knocked out of contention in the elimination rounds