Difference between revisions of "Projects:2019s2-22702 Barkbusters 2.0"

m |

|||

| (20 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| − | The aim of the project is to develop a small | + | The aim of the project is to develop a small deployable device which can automatically record, identify a dog barking from an individual dog and generate a log with the date and time tag. This device is developed to give evidence to the dog and cat management office. |

| − | |||

| − | |||

| − | |||

| − | Bark busters 2.0 is designed not to control the barking of the dog but to log the barking activity of a specific animal. This can be used as | + | |

| − | When dealing with dogs, there are legal issues that need to be considered. Legally, the person owning the dog is solely responsible for the control of the dog is guilty of an offence if a dog” creates a noise, by barking or otherwise, which persistently occurs or continues to such a degree or extent that | + | == Introduction == |

| + | |||

| + | === Project description === | ||

| + | Bark busters 2.0 is designed not to control the barking of the dog but to log the barking activity of a specific animal. This can be used as proof by the person who is facing a nuisance due to a specific animal and make a complaint to the concerned officer. This is a simple solution to collect proof against a disturbing animal. | ||

| + | When dealing with dogs, there are legal issues that need to be considered. Legally, the person owning the dog is solely responsible for the control of the dog is guilty of an offence if a dog” creates a noise, by barking or otherwise, which persistently occurs or continues to such a degree or extent that unreasonably interferes with the peace or convenience of a person.” Bark busters 2.0 helps the person facing inconvenience with a specific animal to collect proof against it and complaint to the concerned officer. | ||

We will have to design the device in such a way that it should be able to differentiate the noises and able to record only the log activity of a specific animal. It should also be able to differentiate the bark of one animal to another. | We will have to design the device in such a way that it should be able to differentiate the noises and able to record only the log activity of a specific animal. It should also be able to differentiate the bark of one animal to another. | ||

| + | === Project team === | ||

| + | |||

| + | Project students | ||

| + | * Mingde Jiang | ||

| + | * Nityasri Gallennagari | ||

| + | Supervisors | ||

| + | * Prof. Langford White | ||

| + | * Dr. Brian Ng | ||

| + | |||

| + | === Objectives === | ||

| + | This project aims to develop a small field deployable device which automatically detects the an individual dog bark from other ambient sounds in the environment and stores it in the storage space of the device.. | ||

| + | |||

| + | == Background == | ||

| + | |||

| + | According to the data from the Brisbane City Council (BCC), barking dogs, crowing roosters and screeching cats top the list when it comes to complaints to the council for noise, with 7,245 complaints in 2016/17 [1]. This news indicated that the noise of animals from the field or even from their neighbours are annoying residents. According to the South Australian Dog and Cat Management Act 1995, a person who owns or is responsible for the control of a dog is guilty of an offence if the dog (either alone or together with other dogs, whether or not in the same ownership) creates a noise, by barking or otherwise, which persistently occurs or continues to such a degree or extent that it unreasonably interferes with the peace, comfort or convenience of a person [2]. People who are suffering from the noise emitted by the dogs are allowed to make a complaint to the city council. To do that, the city council will provide a dog barking pack which include a 7 day diary to complaints. Complaints have to complete the diary with the dog barking activity record to prove that they are really troubled by dog barks. However, self-completed records by the complaints are inaccurate and may not be fair. That’s why a device is needed with capabilities of recognizing and recording in this case. | ||

| + | |||

| + | The standard program of the Campbelltown City Council [3] dealing with complaints about the dog barks is to provide a Barking Dog pack which includes a diary for complainants to record the barking activity. The following action is once there is sufficient evidence in the diary, the council will contact the dog owner to rectifier the situation and perform a 14 days monitor. If the circumstances have not improved, the council will review information collected from nearby residents to determine whether a further action will be performed. If it can be established that the noise is persistent and is an unreasonable interference, the council will take action, however, civil action by those effected is also possible. | ||

| + | |||

| + | However, the only solution currently available to the disturbed residents is to buy a recorder with enough storage space and power [4]. They are suggested to turn the recorder on and leave it in the yard under a shelter cover. “Just leave it under cover outside recording for whatever periods you want and download the audio files from it. Don't do more than a few hours at a time or it's just too big a file to easily deal with.”, said by Zambuck, a replier to this complaint [5]. | ||

| + | |||

| + | This method has two main problems. The first one is that the recorder is way too expensive to be used only for complaint proof collecting. People maybe not willing to pay that much for others fault. The second defect is a recorder cannot identify whether the barking belongs to an individual dog. This gives the dog owner opportunity to argue that the barking recorded is not from his or her dog. | ||

| + | |||

| + | The device we developed simplifies the complex process by providing a device which can automatically record, identify a dog barking from an individual dog and generate a log with time tag instead of investigating by the council. People who are annoyed by the dog bark can offer an evidence of noise by simply place the device near the barking source. Also, the evidence recorded by the device is more accurate and convincing than the complainer's manual record. This is the significance of this project. | ||

| + | |||

| + | == Method == | ||

| + | |||

| + | This section introduced the background theory, hardware implementation and software implementation of the project. | ||

| + | |||

| + | === System Design === | ||

| + | |||

| + | The system design for the device is shown as Figure 1. | ||

| + | |||

| + | [[File:SystemDesignDiagram.png|700px|Image:700pixels|center]] | ||

| + | |||

| + | <center>Figure 1. System Design Diagram</center> | ||

| + | |||

| + | === Background theory === | ||

| + | |||

| + | ==== '' Mel Frequency Cepstral Coefficients (MFCCs) '' ==== | ||

| + | |||

| + | MFCC is one of the methods for extracting speech feature parameters. Because of its unique cepstrum-based extraction method, it is more in line with human auditory principles, and thus is the most common and effective speech feature extraction algorithm. The MFCC is a cepstral coefficient extracted from the Mel scale frequency domain. Since humans have a very accurate sense of sound, they can distinguish between different sound sources by their timbre. | ||

| + | |||

| + | The Mel scale exactly describes the nonlinear characteristics of the human ear's perception of frequency to simulate the human's accurate discrimination of timbre [6]. | ||

| + | |||

| + | ==== '' Gaussian Mixture Model (GMM) '' ==== | ||

| + | |||

| + | A Gaussian Mixture Model (GMM) is a parametric probability density function represented as a weighted sum of Gaussian component densities. GMMs are commonly used as a parametric model of the probability distribution of continuous measurements or features in a biometric system, such as vocal tract related spectral features in a speaker recognition system. GMM parameters are estimated from training data using the iterative Expectation-Maximization (EM) algorithm or Maximum A Posteriori (MAP) estimation from a well-trained prior model. [7] | ||

| + | |||

| + | ==== '' Diagnostic Feature Designer App '' ==== | ||

| + | |||

| + | The time domain and spectral domain features of the audio data samples are extracted using Diagnostic feature designer app in MATLAB. The features extracted include shape factor, clearance factor, kurtosis etc. The generated features can be exported to MATLAB workspace to be used as inputs for Classification algorithms. | ||

| + | |||

| + | ==== '' Naive Bayes Classifier '' ==== | ||

| + | |||

| + | Naive Bayes Classifier is a type of supervised machine learning algorithm which assumes that the features are statistically independent. The probability of each class is estimated using Bayes rule and the class with highest probability is the outcome of the algorithm. This classifier can be used to train large datasets within short computational time. This is the simplest algorithm used in speech recognition systems[8]. | ||

| + | |||

| + | ==== '' K-Means Clustering '' ==== | ||

| + | |||

| + | K-means clustering is an unsupervised machine learning algorithm which looks for a fixed number of clusters(k) in the data like automatic speech recognition. K-means algorithm identifies k number of centroids and allocates each data point to the nearest cluster by an iteration process until the centroids meet a convergence criterion[9]. | ||

| + | |||

| + | |||

| + | === Software Development (Model Training) === | ||

| + | |||

| + | The software development of the project is implemented in MATLAB platform. The database of the signals are collected from an online source. The collected signals consists of dog barks and other ambient sounds like car horns, siren, children playing etc. and are processed. Features of these audio samples are extracted using Diagnostic Feature Designer App in MATLAB. The dimensions of the extracted features are reduced by principal component analysis(PCA). The Naive Bayes classifier is trained using Statistics and Machine Learning Toolbox with the original extracted data. The Naive Bayes Classifier worked with an accuracy of 78%. Also, a model is trained using k-means algorithm in MATLAB with the original data set of features and Principal components obtained from PCA. The K-means algorithm classifies the data and clusters the data into two classes(Dog bark and non-dog bark). The GMM clustering is performed on the data in MATLAB but it did not work well on this dataset. | ||

| + | |||

| + | |||

| + | === Hardware selection === | ||

| + | |||

| + | ==== '' Raspberry Pi 4b '' ==== | ||

| + | |||

| + | Raspberry Pi 4 Model B is the latest product in the popular Raspberry Pi range of computers. This version beats the Raspberry Pi in the following aspects [10]: | ||

| + | |||

| + | *Broadcom BCM2711, Quad-core Cortex-<u>A72</u> (ARM v8) 64-bit SoC @ <u>1.5GHz</u>. | ||

| + | |||

| + | *1GB, <u>2GB, or 4GB</u> LPDDR4-3200 SDRAM (depending on model). | ||

| + | |||

| + | *2.4GHz and 5.0GHz IEEE 802.11ac wireless, Bluetooth <u>5.0</u>, BLE. | ||

| + | |||

| + | *<u>2 × USB 3.0 ports</u>; 2 × USB 2.0 ports. | ||

| + | |||

| + | *5V DC via <u>USB-C</u> connector (minimum 3A*). | ||

| + | |||

| + | ==== '' USB microphone '' ==== | ||

| + | |||

| + | Since the Raspberry Pi 4b has only audio input from USB, it is panned to use a USB microphone. | ||

| + | |||

| + | ==== '' Portable battery '' ==== | ||

| + | |||

| + | The portable battery is needed for powering the board, it is planned to use a 10000mAh Mi Power Bank Pro. | ||

| + | |||

| + | ==== '' Prototype photo '' ==== | ||

| + | |||

| + | [[File:PrototypePhoto.png|700px|Image:700pixels|center]] | ||

| + | |||

| + | <center>Figure 2. Prototype photo</center> | ||

| + | |||

| + | == Results == | ||

| + | |||

| + | This section shows the testing results of the product prototype. Functions such as automatically recording, identifying and saving are tested. | ||

| + | |||

| + | === Automatically recording and records saving === | ||

| + | |||

| + | With the USB microphone plugged in, the python program can directly run for recording. For the test, we set the volume threshold to 4000. Two dog bark recoding samples and two human speaking recording samples are chosen to be used. One of the dog bark recording samples is from a small dog, the other one is from a large one. One of the human speaking recording samples is from myself (male), the other one is from my colleague Nityasri (female). The volumes of these recording samples are adjusted to the same level by human hearing. The test result is shown in Figure 3. | ||

| + | |||

| + | [[File:DistanceTest.png|700px|Image:700pixels|center]] | ||

| + | |||

| + | <center>Figure 3. The test results for recording at different distances</center> | ||

| + | |||

| + | === Sound classification === | ||

| + | |||

| + | As a proof providing device, the accuracy of classifying should be acceptable. Hence the classification test is performed. 100 samples are selected for testing. 50 of them are human speaking samples which are recorded by different people in different genders and ages. The other 50 are dog bark samples which are collected from the internet in different breeds and sizes. The test result are shown as a confusion matrix. | ||

| + | |||

| + | [[File:ConfusionMatrix.png|500px|Image:500pixels|center]] | ||

| + | |||

| + | <center>Figure 4. Confusion matrix of classification tests</center> | ||

| + | |||

| + | === Recordings saving and uploading === | ||

| + | |||

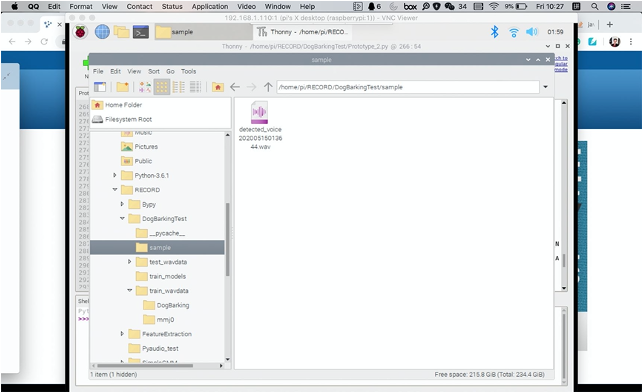

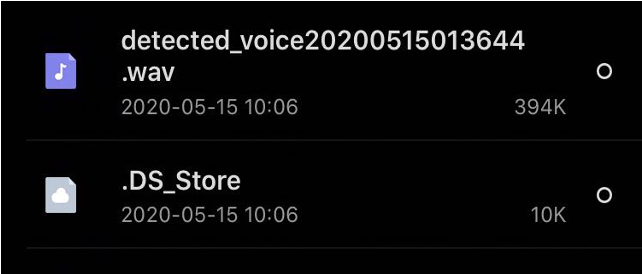

| + | If a sound sample is identified as a dog bark, it will be stored in a specific folder in the Raspbian system with time labels which is shown in Figure 5. | ||

| + | |||

| + | [[File:LocalStorage.png|700px|Image:700pixels|center]] | ||

| + | |||

| + | <center>Figure 5. Recordings saved in local storage</center> | ||

| + | |||

| + | It is set that to upload all files to the cloud disk at 10.05 am. The figure shows the file list in the cloud disk. | ||

| + | |||

| + | [[File:CloudDiskStorage.png|700px|Image:700pixels|center]] | ||

| + | |||

| + | <center>Figure 6. Recording saved in cloud disk</center> | ||

| + | === Naive Bayes Classifier=== | ||

| + | A data sample of 2000 sample is taken as the input of the Naïve Bayes classifier with one class consisting of 1000 dog bark sounds and another class with the rest 1000 samples which consists of other environmental sounds. The confusion matrix of Naïve Bayes classifier is shown in the figure 7 below. | ||

| + | [[File:Confusionmatrix.png|700px|Image:700pixels|center]] | ||

| + | <center> Figure 7. Confusion matrix of a Naive Bayes Classifier</center> | ||

| + | === K-means Clustering === | ||

| + | |||

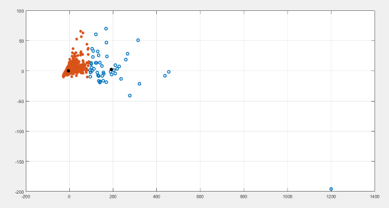

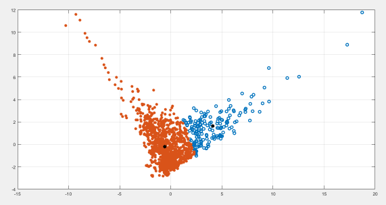

| + | Clustering is performed using k-means algorithm with both the datasets, one with the original extracted feature dataset and the other with the principal components from PCA. | ||

| + | |||

| + | [[File:K-Means1.png|500px|Image:500pixels|center]] | ||

| + | <center> Figure 8. Clustering using principal components with K-means Algorithm </center> | ||

| + | |||

| + | [[File:K-Means2.png|500px|Image:500pixels|center]] | ||

| + | <center> Figure 9. Clustering using original features with K-means algorithm </Center> | ||

| + | |||

| + | == Conclusion == | ||

| + | |||

| + | === Results conclusion === | ||

| + | |||

| + | The device can be triggered by a sound volume threshold. There may be some syllables missing at the beginning and the end of the recording. The file can be successfully stored in a specific folder in the Raspbian system for further processing. | ||

| + | |||

| + | The classifier can identify the dog barks from human speaks with the accuracy close to 100%. | ||

| + | The time-domain and spectral-domain features are successfully extracted from Diagnostic Feature Designer App. The MATLAB generated Naive Bayes Classifier works with an accuracy of 78%. The k-means Clustering algorithm clusters the data fairly good. The GMM Clustering did not work on the collected data set. | ||

| + | |||

| + | The device can save recordings with time labels which are identified as dog barks. It can upload recordings to a cloud disk at anytime. Users can get access to the dog bark recordings online and free to download. | ||

| + | |||

| + | === Future work === | ||

| + | |||

| + | ==== ''Sound signal amplifier'' ==== | ||

| + | |||

| + | From the results gained in the testing section, the device has no recording capability for sound far away. In the future development it may consider to add a sound amplifier. One of the methods of amplifying the sound volume is linear amplification. The linear sound signal amplifier is basically the volume amplifier. The function can be described as following steps. | ||

| + | |||

| + | The first step is to set the threshold to a very low value to assure a piece of voice with low volume (long distance away) can be recorded. The second step is to load the sound sample into the digital signal. The third step is to multiply the original signal by a amplification factor. It should be noticed that the amplication factor should be calculated based on the amplitude of the original signal to avoid the distortion of the sound. It is considered to calculate the factor using multiple of the amplitude. | ||

| + | |||

| + | ==== ''Identification of an individual dog'' ==== | ||

| + | |||

| + | Since the model training is performed on board, the training sound database cannot be too large. The calculating speed is limited for the Raspberry Pi 4b, it will take more than 5 times of the calculating time of a PC. Thus, a better model can be trained on a PC by enlarging the database. | ||

| + | |||

| + | == References == | ||

| + | [1] “Barking dogs, crowing roosters and screeching cats top noise complaints list in Brisbane” (https://www.abc.net.au/news/2017-10-19/most-common-noise-complaints-brisbane-and-what-to-do-about-it/9059182) | ||

| + | |||

| + | [2] Dog and Cat Management Act 1995, 45A (5). | ||

| − | + | [3] “Barking Dog Information Pack” (https://www.campbelltown.sa.gov.au/__data/assets/pdf_file/0027/236961/Barking-Dog-Information-Pack.pdf) | |

| − | + | [4] "2GB Digital Voice Recorder" (https://www.jaycar.com.au/2gb-digital-voice-recorder/p/XC0387) | |

| − | + | [5] Online post: “Any Good Android Recorders to Prove a Barking Dog?” (https://www.ozbargain.com.au/node/373772) | |

| − | |||

| − | + | [6] “Speech Processing for Machine Learning: Filter banks, Mel-Frequency Cepstral Coefficients (MFCCs) and What's In-Between” (https://haythamfayek.com/2016/04/21/speech-processing-for-machine-learning.html) | |

| − | + | [7] L. Baum er al., A maximization technique occurring in the statistical analysis of probabilistic functions of Markov chains, Ann. Math Srar., vol. 41, pp. 164-171, 1970. | |

| − | |||

| − | + | [8] S. B. Kotsiantis, “Supervised machine learning: a review of classification techniques,” informatica, vol. 31, no. 3, p. 249(20), october 2007 | |

| + | [9]D. M. J. Garbade, “Understanding K-means Clustering in Machine Learning,” 13 Sep 2018. [Online]. Available: https://towardsdatascience.com/understanding-k-means-clustering-in-machine-learning-6a6e67336aa1. [Accessed 28 May 2020]. | ||

| − | + | [10] “Raspberry Pi 4 vs Raspberry Pi 3B+”(https://magpi.raspberrypi.org/articles/raspberry-pi-4-vs-raspberry-pi-3b-plus) | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

Latest revision as of 10:30, 9 June 2020

The aim of the project is to develop a small deployable device which can automatically record, identify a dog barking from an individual dog and generate a log with the date and time tag. This device is developed to give evidence to the dog and cat management office.

Contents

Introduction

Project description

Bark busters 2.0 is designed not to control the barking of the dog but to log the barking activity of a specific animal. This can be used as proof by the person who is facing a nuisance due to a specific animal and make a complaint to the concerned officer. This is a simple solution to collect proof against a disturbing animal. When dealing with dogs, there are legal issues that need to be considered. Legally, the person owning the dog is solely responsible for the control of the dog is guilty of an offence if a dog” creates a noise, by barking or otherwise, which persistently occurs or continues to such a degree or extent that unreasonably interferes with the peace or convenience of a person.” Bark busters 2.0 helps the person facing inconvenience with a specific animal to collect proof against it and complaint to the concerned officer. We will have to design the device in such a way that it should be able to differentiate the noises and able to record only the log activity of a specific animal. It should also be able to differentiate the bark of one animal to another.

Project team

Project students

- Mingde Jiang

- Nityasri Gallennagari

Supervisors

- Prof. Langford White

- Dr. Brian Ng

Objectives

This project aims to develop a small field deployable device which automatically detects the an individual dog bark from other ambient sounds in the environment and stores it in the storage space of the device..

Background

According to the data from the Brisbane City Council (BCC), barking dogs, crowing roosters and screeching cats top the list when it comes to complaints to the council for noise, with 7,245 complaints in 2016/17 [1]. This news indicated that the noise of animals from the field or even from their neighbours are annoying residents. According to the South Australian Dog and Cat Management Act 1995, a person who owns or is responsible for the control of a dog is guilty of an offence if the dog (either alone or together with other dogs, whether or not in the same ownership) creates a noise, by barking or otherwise, which persistently occurs or continues to such a degree or extent that it unreasonably interferes with the peace, comfort or convenience of a person [2]. People who are suffering from the noise emitted by the dogs are allowed to make a complaint to the city council. To do that, the city council will provide a dog barking pack which include a 7 day diary to complaints. Complaints have to complete the diary with the dog barking activity record to prove that they are really troubled by dog barks. However, self-completed records by the complaints are inaccurate and may not be fair. That’s why a device is needed with capabilities of recognizing and recording in this case.

The standard program of the Campbelltown City Council [3] dealing with complaints about the dog barks is to provide a Barking Dog pack which includes a diary for complainants to record the barking activity. The following action is once there is sufficient evidence in the diary, the council will contact the dog owner to rectifier the situation and perform a 14 days monitor. If the circumstances have not improved, the council will review information collected from nearby residents to determine whether a further action will be performed. If it can be established that the noise is persistent and is an unreasonable interference, the council will take action, however, civil action by those effected is also possible.

However, the only solution currently available to the disturbed residents is to buy a recorder with enough storage space and power [4]. They are suggested to turn the recorder on and leave it in the yard under a shelter cover. “Just leave it under cover outside recording for whatever periods you want and download the audio files from it. Don't do more than a few hours at a time or it's just too big a file to easily deal with.”, said by Zambuck, a replier to this complaint [5].

This method has two main problems. The first one is that the recorder is way too expensive to be used only for complaint proof collecting. People maybe not willing to pay that much for others fault. The second defect is a recorder cannot identify whether the barking belongs to an individual dog. This gives the dog owner opportunity to argue that the barking recorded is not from his or her dog.

The device we developed simplifies the complex process by providing a device which can automatically record, identify a dog barking from an individual dog and generate a log with time tag instead of investigating by the council. People who are annoyed by the dog bark can offer an evidence of noise by simply place the device near the barking source. Also, the evidence recorded by the device is more accurate and convincing than the complainer's manual record. This is the significance of this project.

Method

This section introduced the background theory, hardware implementation and software implementation of the project.

System Design

The system design for the device is shown as Figure 1.

Background theory

Mel Frequency Cepstral Coefficients (MFCCs)

MFCC is one of the methods for extracting speech feature parameters. Because of its unique cepstrum-based extraction method, it is more in line with human auditory principles, and thus is the most common and effective speech feature extraction algorithm. The MFCC is a cepstral coefficient extracted from the Mel scale frequency domain. Since humans have a very accurate sense of sound, they can distinguish between different sound sources by their timbre.

The Mel scale exactly describes the nonlinear characteristics of the human ear's perception of frequency to simulate the human's accurate discrimination of timbre [6].

Gaussian Mixture Model (GMM)

A Gaussian Mixture Model (GMM) is a parametric probability density function represented as a weighted sum of Gaussian component densities. GMMs are commonly used as a parametric model of the probability distribution of continuous measurements or features in a biometric system, such as vocal tract related spectral features in a speaker recognition system. GMM parameters are estimated from training data using the iterative Expectation-Maximization (EM) algorithm or Maximum A Posteriori (MAP) estimation from a well-trained prior model. [7]

Diagnostic Feature Designer App

The time domain and spectral domain features of the audio data samples are extracted using Diagnostic feature designer app in MATLAB. The features extracted include shape factor, clearance factor, kurtosis etc. The generated features can be exported to MATLAB workspace to be used as inputs for Classification algorithms.

Naive Bayes Classifier

Naive Bayes Classifier is a type of supervised machine learning algorithm which assumes that the features are statistically independent. The probability of each class is estimated using Bayes rule and the class with highest probability is the outcome of the algorithm. This classifier can be used to train large datasets within short computational time. This is the simplest algorithm used in speech recognition systems[8].

K-Means Clustering

K-means clustering is an unsupervised machine learning algorithm which looks for a fixed number of clusters(k) in the data like automatic speech recognition. K-means algorithm identifies k number of centroids and allocates each data point to the nearest cluster by an iteration process until the centroids meet a convergence criterion[9].

Software Development (Model Training)

The software development of the project is implemented in MATLAB platform. The database of the signals are collected from an online source. The collected signals consists of dog barks and other ambient sounds like car horns, siren, children playing etc. and are processed. Features of these audio samples are extracted using Diagnostic Feature Designer App in MATLAB. The dimensions of the extracted features are reduced by principal component analysis(PCA). The Naive Bayes classifier is trained using Statistics and Machine Learning Toolbox with the original extracted data. The Naive Bayes Classifier worked with an accuracy of 78%. Also, a model is trained using k-means algorithm in MATLAB with the original data set of features and Principal components obtained from PCA. The K-means algorithm classifies the data and clusters the data into two classes(Dog bark and non-dog bark). The GMM clustering is performed on the data in MATLAB but it did not work well on this dataset.

Hardware selection

Raspberry Pi 4b

Raspberry Pi 4 Model B is the latest product in the popular Raspberry Pi range of computers. This version beats the Raspberry Pi in the following aspects [10]:

- Broadcom BCM2711, Quad-core Cortex-A72 (ARM v8) 64-bit SoC @ 1.5GHz.

- 1GB, 2GB, or 4GB LPDDR4-3200 SDRAM (depending on model).

- 2.4GHz and 5.0GHz IEEE 802.11ac wireless, Bluetooth 5.0, BLE.

- 2 × USB 3.0 ports; 2 × USB 2.0 ports.

- 5V DC via USB-C connector (minimum 3A*).

USB microphone

Since the Raspberry Pi 4b has only audio input from USB, it is panned to use a USB microphone.

Portable battery

The portable battery is needed for powering the board, it is planned to use a 10000mAh Mi Power Bank Pro.

Prototype photo

Results

This section shows the testing results of the product prototype. Functions such as automatically recording, identifying and saving are tested.

Automatically recording and records saving

With the USB microphone plugged in, the python program can directly run for recording. For the test, we set the volume threshold to 4000. Two dog bark recoding samples and two human speaking recording samples are chosen to be used. One of the dog bark recording samples is from a small dog, the other one is from a large one. One of the human speaking recording samples is from myself (male), the other one is from my colleague Nityasri (female). The volumes of these recording samples are adjusted to the same level by human hearing. The test result is shown in Figure 3.

Sound classification

As a proof providing device, the accuracy of classifying should be acceptable. Hence the classification test is performed. 100 samples are selected for testing. 50 of them are human speaking samples which are recorded by different people in different genders and ages. The other 50 are dog bark samples which are collected from the internet in different breeds and sizes. The test result are shown as a confusion matrix.

Recordings saving and uploading

If a sound sample is identified as a dog bark, it will be stored in a specific folder in the Raspbian system with time labels which is shown in Figure 5.

It is set that to upload all files to the cloud disk at 10.05 am. The figure shows the file list in the cloud disk.

Naive Bayes Classifier

A data sample of 2000 sample is taken as the input of the Naïve Bayes classifier with one class consisting of 1000 dog bark sounds and another class with the rest 1000 samples which consists of other environmental sounds. The confusion matrix of Naïve Bayes classifier is shown in the figure 7 below.

K-means Clustering

Clustering is performed using k-means algorithm with both the datasets, one with the original extracted feature dataset and the other with the principal components from PCA.

Conclusion

Results conclusion

The device can be triggered by a sound volume threshold. There may be some syllables missing at the beginning and the end of the recording. The file can be successfully stored in a specific folder in the Raspbian system for further processing.

The classifier can identify the dog barks from human speaks with the accuracy close to 100%. The time-domain and spectral-domain features are successfully extracted from Diagnostic Feature Designer App. The MATLAB generated Naive Bayes Classifier works with an accuracy of 78%. The k-means Clustering algorithm clusters the data fairly good. The GMM Clustering did not work on the collected data set.

The device can save recordings with time labels which are identified as dog barks. It can upload recordings to a cloud disk at anytime. Users can get access to the dog bark recordings online and free to download.

Future work

Sound signal amplifier

From the results gained in the testing section, the device has no recording capability for sound far away. In the future development it may consider to add a sound amplifier. One of the methods of amplifying the sound volume is linear amplification. The linear sound signal amplifier is basically the volume amplifier. The function can be described as following steps.

The first step is to set the threshold to a very low value to assure a piece of voice with low volume (long distance away) can be recorded. The second step is to load the sound sample into the digital signal. The third step is to multiply the original signal by a amplification factor. It should be noticed that the amplication factor should be calculated based on the amplitude of the original signal to avoid the distortion of the sound. It is considered to calculate the factor using multiple of the amplitude.

Identification of an individual dog

Since the model training is performed on board, the training sound database cannot be too large. The calculating speed is limited for the Raspberry Pi 4b, it will take more than 5 times of the calculating time of a PC. Thus, a better model can be trained on a PC by enlarging the database.

References

[1] “Barking dogs, crowing roosters and screeching cats top noise complaints list in Brisbane” (https://www.abc.net.au/news/2017-10-19/most-common-noise-complaints-brisbane-and-what-to-do-about-it/9059182)

[2] Dog and Cat Management Act 1995, 45A (5).

[3] “Barking Dog Information Pack” (https://www.campbelltown.sa.gov.au/__data/assets/pdf_file/0027/236961/Barking-Dog-Information-Pack.pdf)

[4] "2GB Digital Voice Recorder" (https://www.jaycar.com.au/2gb-digital-voice-recorder/p/XC0387)

[5] Online post: “Any Good Android Recorders to Prove a Barking Dog?” (https://www.ozbargain.com.au/node/373772)

[6] “Speech Processing for Machine Learning: Filter banks, Mel-Frequency Cepstral Coefficients (MFCCs) and What's In-Between” (https://haythamfayek.com/2016/04/21/speech-processing-for-machine-learning.html)

[7] L. Baum er al., A maximization technique occurring in the statistical analysis of probabilistic functions of Markov chains, Ann. Math Srar., vol. 41, pp. 164-171, 1970.

[8] S. B. Kotsiantis, “Supervised machine learning: a review of classification techniques,” informatica, vol. 31, no. 3, p. 249(20), october 2007

[9]D. M. J. Garbade, “Understanding K-means Clustering in Machine Learning,” 13 Sep 2018. [Online]. Available: https://towardsdatascience.com/understanding-k-means-clustering-in-machine-learning-6a6e67336aa1. [Accessed 28 May 2020].

[10] “Raspberry Pi 4 vs Raspberry Pi 3B+”(https://magpi.raspberrypi.org/articles/raspberry-pi-4-vs-raspberry-pi-3b-plus)