Difference between revisions of "Projects:2015s1-31 Cracking the Voynich manuscript code"

(Initial Wiki Page Structure) |

(→Approach) |

||

| (42 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| + | This wiki page gives a small overview and summary of the project, briefly detailing the project information, approach, deliverables, concluding statements on results and future pathways. For further details on the results and analyses please visit [https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Cracking_the_Voynich_Code_2015_-_Final_Report our final report] page. | ||

| + | |||

=Introduction= | =Introduction= | ||

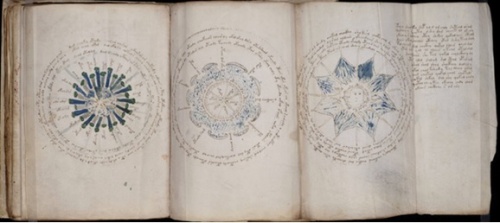

| + | An unknown script that has been a mystery for several decades, powerful computational tools and access to a large array of data on the internet. This project looks at the famous linguistic and cryptologic mystery, The Voynich Manuscript, and attempts to find possible relationships to other languages from a basic statistical perspective. Utilizing a digital transcription of the Voynich, statistics are data-mined and analysed in various techniques and compared with any previous research available on the internet and a corpus of texts from various languages. | ||

| + | |||

| + | [[File:Astronomical Section.jpg|500px|thumb|center|An Example (Astronomical) Section of the Voynich Manuscript]] | ||

=Team= | =Team= | ||

| Line 15: | Line 20: | ||

=Project Information= | =Project Information= | ||

| + | |||

| + | ==Background== | ||

| + | |||

| + | The Voynich Manuscript is a document written in an unknown script that has been carbon dated back to the early 15th century <ref name=Stolte/> and believed to be created within Europe <ref name=Reddy/>. Named after Wilfrid Voynich, whom purchased the folio in 1912, the manuscript has become a well-known mystery within linguistics and cryptology. It is divided into several different sections based on the nature of the drawings <ref name=Landini/>. These sections are: | ||

| + | *[https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/File:Herbal_Section.jpg Herbal] | ||

| + | *[https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/File:Astronomical_Section.jpg Astronomical] | ||

| + | *[https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/File:Biological_Section.jpg Biological] | ||

| + | *[https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/File:Cosmological_Section.jpg Cosmological] | ||

| + | *[https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/File:Pharmaceutical_Section.jpg Pharmaceutical] | ||

| + | *[https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/File:Recipes_Section.jpg Recipes] | ||

| + | |||

| + | The folio numbers and examples of each section are outlined in appendix section A.2. In general, the Voynich Manuscript has fallen into three particular hypotheses <ref name=Schinner/>. These are as follows: | ||

| + | *Cipher Text: The text is encrypted. | ||

| + | *Plain Text: The text is in a plain, natural language that is currently unidentified. | ||

| + | *Hoax: The text has no meaningful information. | ||

| + | |||

| + | Note that the manuscript may fall into more than one of these hypotheses <ref name=Schinner/>. It may be that the manuscript is written through steganography, the concealing of the true meaning within the possibly meaningless text. | ||

| + | |||

| + | ==Technical Background== | ||

| + | |||

| + | The vast majority of the project relies on a technique known as data mining. Data mining is the process of taking and analysing a large data set in order to uncover particular patterns and correlations within said data thus creating useful knowledge <ref name=Data/>. In terms of the project, data shall be acquired from the Interlinear Archive, a digital archive of transcriptions from the Voynich Manuscript, and other sources of digital texts in known languages. Data mined from the Interlinear Archive will be tested and analysed for specific linguistic properties using varying statistical methods. | ||

| + | |||

| + | The Interlinear Archive, as mentioned, will be the main source of data in regards to the Voynich Manuscript. It has been compiled to be a machine readable version of the Voynich Manuscript based on transcriptions from various transcribers. Each transcription has been translated into the [http://www.voynich.nu/extra/eva.html European Voynich Alphabet (EVA)]. | ||

| + | |||

| + | ==Aim== | ||

| + | The aim of the research project is to determine possible features and relationships of the Voynich Manuscript through the analyses of basic linguistic features and to gain knowledge of these linguistic features. These features can be used to aid in the future investigation of unknown languages and linguistics. | ||

| + | |||

| + | The project does not aim to fully decode or understand the Voynich Manuscript itself. This outcome would be beyond excellent but is unreasonable to expect in a single year project from a small team of student engineers with very little initial knowledge on linguistics. | ||

| + | |||

| + | ==Motivation== | ||

| + | |||

| + | The project attempts to find relationships and patterns within unknown text through the usage of basic linguistic properties and analyses. The Voynich Manuscript is a prime candidate for analyses as there is no known accepted translations of any part within the document. The relationships found can be used help narrow future research and to conclude on specific features of the unknown language within the Voynich Manuscript. | ||

| + | |||

| + | Knowledge produced from the relationships and patterns of languages and linguistics can be used to further the current linguistic computation and encryption/decryption technologies of today <ref name=Amancio/>. | ||

| + | |||

| + | While some may question as to why an unknown text is of any importance to Engineering, a more general view of the research project shows that it deals with data acquisition and analyses. This is integral to a wide array of businesses, including engineering, which can involve a basic service, such as survey analysis, to more complex automated system. | ||

| + | |||

| + | ==Significance== | ||

| + | |||

| + | There are many computational linguistic and encryption/decryption technologies that are in use today. As mentioned in section 1.3, knowledge produced from this research can help advance these technologies in a range of different applications <ref name=Amancio/>. These include, but are not limited to, information retrieval systems, search engines, machine translators, automatic summarizers, and social networks <ref name=Amancio/>. | ||

| + | |||

| + | Particular technologies, that are widely used today, that can benefit from the research, include: | ||

| + | *Turn-It-In (Authorship/Plagiarism Detection) | ||

| + | *Google (Search Engines) | ||

| + | *Google Translate (Machine Runnable Language Translations) | ||

| + | |||

| + | ==Approach== | ||

| + | |||

| + | The project was broken down into several phases where each phase considered a specific feature of the Voynich Manuscript and/or linguistics while attempting to build onto what was learned in the previous phase. The results found were used to compare and complement other results from previous research where possible. | ||

| + | |||

| + | All phases were coded and therefore included testing as all code must be verified for results to be considered accurate. | ||

| + | |||

| + | Code was written in C++ and MATLAB languages as the project members have experience using these programming languages. MATLAB, in particular, is chosen as it provides a simple, easy to use mathematical toolbox that is readily available on the University systems. Other programming languages may be used if it is found to be more suitable. | ||

| + | |||

| + | BASH scripts were also been used for fast sorting of the Interlinear Archive files. | ||

| + | |||

| + | ===Phase 1 - Characterization of the Text=== | ||

| + | |||

| + | Characterization of the text involved determining the first-order statistics of the Voynich Manuscript. This first involves pre-processing the Interlinear Archive into a simpler machine-readable format. | ||

| + | |||

| + | The pre-processed files are then characterized through MATLAB code by finding and determining: | ||

| + | *Unique word tokens | ||

| + | *Unique character tokens | ||

| + | *Frequency of word tokens | ||

| + | *Frequency of character tokens | ||

| + | *Word token length frequency | ||

| + | *Character tokens that only appear at the start, end, or middle of word tokens | ||

| + | |||

| + | A 'unique' token is considered a token that is different than any of the other tokens. In terms of character tokens, difference is attributed to the token itself being visually (machine-readable) different than another. In terms of word tokens, difference is attributed to the structure of the word. | ||

| + | |||

| + | Resulting statistics was then be compared with other known languages through using the same code on the various translations of the Universal Declaration of Human Rights. Unfortunately the Universal Declaration of Human Rights is, by comparison, a small document which will limit results. However it will give a basis for follow-up research into the languages that have a possible relationship to the Voynich Manuscript based on the first-order statistics. | ||

| + | |||

| + | ===Phase 2 - English Investigation=== | ||

| + | |||

| + | The English investigation looks into the elementary structure of English text. It specifically examines the representation of the English alphabet and how the alphabetical tokens can be extracted from an English text using statistics. This is done to grasp a better understanding on how character tokens are used within text and how data and statistics relating to these character tokens can be used to characterize each token. | ||

| + | |||

| + | Initially, a corpus of English texts shall be passed through the characterization code of phase 1 to determine the first-order statistics of each text. These will be compared to grasp a basic understanding of how each of the tokens can be statistically represented and how these statistics differ between texts. These tokens include alphabetical, numerical, and punctuation tokens. | ||

| + | |||

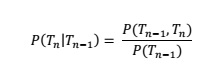

| + | The characterization code was then expanded upon to include character token bigrams to further define the differences between character tokens. Bigrams give the conditional probability, P, of a token, Tn, given the proceeding token, Tn-1. This is given in the following formula: | ||

| + | |||

| + | [[File:Bigram Prob.jpg]] | ||

| + | |||

| + | In general, the extractor used simple rules to determine if the character token is of a specific category. These include: | ||

| + | |||

| + | * Does the character token only (or the vast majority) appear at the end of a word token? | ||

| + | |||

| + | Tokens that only appear that the end of a word token are generally only punctuation characters when using a large sample text or corpus. However, depending on the type of text, some punctuation characters may appear before another punctuation character, hence majority was taken into account. | ||

| + | |||

| + | * Does the character token only appear at the start of a word token and does it have a high relative frequency when compared to others only appearing at the start of a word token? | ||

| + | |||

| + | In English, character tokens that only appear at the start of a word token are generally upper-case alphabet characters. Some punctuation characters may also only appear at the start of a word token, hence the relative frequencies were also taken into account. | ||

| + | |||

| + | * Does the character token have a high relative frequency? | ||

| + | |||

| + | Tokens with a high relative frequency are generally alphabet characters, with the highest consisting of the vowels and commonly used consonants. | ||

| + | |||

| + | * Does the character token have a high bigram ‘validity’? | ||

| + | |||

| + | Over a large English corpus, alphabetical characters generally appear alongside many more other tokens than non-alphabetical characters. Validity is defined as a bigram that occurs with a frequency greater than zero. Low validity suggests the character token is probably a non-alphabet character. | ||

| + | |||

| + | It was expected that the probability of the different tokens along with the first-order statistics, obtained through the phase 1 code, would show definitive differences between alphabetical, numerical, and punctuation tokens. However it was found that, depending on the writing-style and domain of a text, these statistics could differ significantly. Therefore the results could differ depending on the input text. | ||

| + | |||

| + | It was shown that it is possible to extract and categorize the character tokens of an text to a certain extent but, if using raw statistics, the results would generally be biased towards the type of input text and language. Larger sample sizes and additional statistics, along with knowledge of the specific language, may need to be included to increase the accuracy and precision of alphabet extraction. | ||

| + | |||

| + | Code will be written that takes these statistical findings into account to attempt to extract the English alphabet from any given English text with no prior knowledge of English itself. This will be used to examine the Voynich Manuscript to search for any character token relationships. | ||

| + | |||

| + | ===Phase 3 - Morphology Investigation=== | ||

| + | |||

| + | Linguistic morphology, broadly speaking, deals with the study of the internal structure of the words, particularly the meaningful segments that make up a word <ref name=Aronoff/>. Specifically, phase 3 will be looking into the possibility of affixes within the Voynich Manuscript from a strictly concatenative perspective. | ||

| + | |||

| + | Previous research has found the possibility of morphological structure within the Voynich Manuscript <ref name=Reddy/>. Within this small experiment, the most common affixes in English are found and compared with those found within the Voynich Manuscript. Due to the unknown word structure and small relative size of the Voynich, this experiment defines an affix as a sequence of characters that appear at the word edges. Using this basic affix definition, a simple ranking of the affixes of various lengths could reveal potential relationships between the Voynich and other known languages. | ||

| + | |||

| + | The basis of the code extracts and counts each possible character sequence within a word for a specific character sequence length. These are then ranked, according to frequency, and compared with all languages tested. By comparing the relative frequencies and their difference ratios, we are able to find if any of the tested languages share a possible relationship with the Voynich Manuscript. | ||

| + | |||

| + | Results found here are assume concatenative affixes within the Voynich Manuscript and the other languages. As shown in many languages, morphology is full of different ambiguities dependent on the language. As such the results here can only give very baseline experimental data and will require further research. | ||

| + | |||

| + | Code is written for MATLAB which will allow for use on the Interlinear Archive. The code will also be used on English and other Languages texts to provide a quantitative comparison of the differing languages. | ||

| + | |||

| + | ===Phase 4 - Illustration Investigation=== | ||

| + | |||

| + | The illustration investigation looks into the illustrations contained in the Voynich Manuscript. It will examine the possible relation between texts, words and illustrations. The different sections in the Voynich Manuscript are based on the drawings and illustrations in pages. Almost all the sections are texts with illustrations except recipes section. | ||

| + | |||

| + | In Phase 4, the basis of the code will be achieving the following functions: | ||

| + | *Finding unique word tokens in each pages and sections | ||

| + | *Determine the location of a given word token | ||

| + | *Determine the frequency of a given word token | ||

| + | |||

| + | The resulting statistics from the code can then be used into investigation. However, it should be noted that the manuscript may have been written by multiple authors and in multiple languages <ref name=Currier/>. Sections of the manuscript will need to be investigated separately, particularly those written in different languages, along with the manuscript as a whole. | ||

| + | |||

| + | ===Phase 5: Collocations=== | ||

| + | Collocations have no universally accepted formal definition <ref name=Melcuk/> but deals with the words within a language that co-occur more often than would be expected by chance <ref name=Smadja/>. Natural languages are full of collocations <ref name=Smadja/> and can vary significantly depending on the metric, such as length or pattern, used to define a collocation <ref name=ThanopoulosFakotakisKokkinakis/>. | ||

| + | |||

| + | In this research experiment, the definition used for a collocation is that of two words occurring directly next to each other. As collocations have varying significance within different languages, by extracting and comparing all possible collocations within the Voynich Manuscript and the corpus, a relationship based on word association could be found or provide evidence of the possibility of a hoax. | ||

| + | |||

| + | Initially collocations are ranked by frequency, from most frequent to least frequent, followed by a Pointwise Mutual Information (PMI) metric. PMI is a widely accepted method to quantify the strength of word association <ref name=ThanopoulosFakotakisKokkinakis/> <ref name=WermterHahn/> and is mathematically defined as: | ||

| + | |||

| + | [[File:PMI.png]] | ||

| + | |||

| + | Where P(x,y) is the probability of two words appearing coincidentally int the text and P(x) and P(y) are the probabilities of each word appearing within the text. | ||

| + | |||

| + | ==Results== | ||

| + | |||

| + | For details and discussions of the results please visit [https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Cracking_the_Voynich_Code_2015_-_Final_Report our report]. Concluding statements of these results can be found below. | ||

| + | |||

| + | ==Deliverables== | ||

| + | |||

| + | The deliverables of the project are summarized in the table below, detailing the deliverable and the respective deadline. | ||

| + | |||

| + | {| border="1" class="wikitable" | ||

| + | |+ | ||

| + | ! Deliverable | ||

| + | ! Deadline | ||

| + | |- | ||

| + | ! <div style="text-align: left;">Proposal Seminar</div> | ||

| + | | 31st of March, 2015 | ||

| + | |- | ||

| + | ! <div style="text-align: left;">Research Proposal Draft</div> | ||

| + | | 17th of April, 2015 | ||

| + | |- | ||

| + | ! <div style="text-align: left;">Research Proposal and Progress Report</div> | ||

| + | | 5th of June, 2015 | ||

| + | |- | ||

| + | ! <div style="text-align: left;">Final Seminar</div> | ||

| + | | 13th of October, 2015 | ||

| + | |- | ||

| + | ! <div style="text-align: left;">Thesis</div> | ||

| + | | 21st of October, 2015 | ||

| + | |- | ||

| + | ! <div style="text-align: left;">Wiki Report</div> | ||

| + | | Week 11, Semester 2 | ||

| + | |- | ||

| + | ! <div style="text-align: left;">Expo Poster</div> | ||

| + | | 23rd of October, 2015 | ||

| + | |- | ||

| + | ! <div style="text-align: left;">Expo Presentation</div> | ||

| + | | 26th and 27th of October, 2015 | ||

| + | |- | ||

| + | ! <div style="text-align: left;">YouTube Video</div> | ||

| + | | Week 13, Semester 2 | ||

| + | |- | ||

| + | ! <div style="text-align: left;">USB Flash Drive of all Code and Work</div> | ||

| + | | Week 13, Semester 2 | ||

| + | |- | ||

| + | |} | ||

| + | |||

| + | ==Concluding Statements== | ||

| + | |||

| + | Using simple statistical measures found within written texts, it is possible to indicate possible linguistic relationships between the Voynich and different linguistic properties of other languages. | ||

| + | |||

| + | *The Voynich appears to follow Zipf’s Law, suggesting it is a natural language, the binomial distribution of the word lengths also suggest it may be a type of code, cipher or abjad. | ||

| + | |||

| + | *Basic character and bigram frequencies can be used to identify possible alphabet and non-alphabet characters but can be influenced by the sample size and the writing-style of a given text. | ||

| + | |||

| + | *Using a simple affix definitions it is determined that the Voynich may have weak relationships with French, Greek and Latin. This however relies on the simple, restrictive definition for affixes which, in terms of morphological structure, isn’t necessarily simple nor as restrictive. | ||

| + | |||

| + | *Similarly, a simple definition of collocations shows possible relationships between the Voynich and Hebrew. As with the affixes, this also relies on a simple definition. This also showed that, even with the same definition, the metric used to rank the collocations could greatly vary the relationships between languages but did keep a very low word association measure for the Voynich. This again suggests that the Voynich may be a type of code, cipher or even a hoax. | ||

| + | |||

| + | Without much more in-depth research and testing, the relationships found using simple statistical measures lack conclusive evidence. The results found for each different linguistic property tested showed features that could be related to multiple languages or hypotheses, but this can be used to narrow down possible options for future research. | ||

| + | |||

| + | ==Future Pathways== | ||

| + | |||

| + | With a project such as this, unless the Voynich Manuscript is finally 'cracked' further research will always be required. This may include: | ||

| + | |||

| + | *Investigate codes and ciphers of a similar time period to determine if there are any possible relationships. | ||

| + | *Improving an alphabet extraction algorithm by incorporating other languages and statistics if possible. | ||

| + | *Investigate stylometry, writing-style can affect statistics so is there a particular writing-style or author that the Voynich may relate to? | ||

| + | *Expanding on morphology to use a more realistic and less restrictive definition while possibly including word stem extraction. | ||

| + | *Attempting a different style of collocation or using a different metric for comparison. | ||

| + | |||

| + | It may be recommended to focus on less areas such that research and testing can go further in-depth but will depend on how well ideas can translate into possible results. | ||

=Resources= | =Resources= | ||

| Line 29: | Line 244: | ||

*[https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Cracking_the_Voynich_code_2015 Cracking the Voynich Code 2015] | *[https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Cracking_the_Voynich_code_2015 Cracking the Voynich Code 2015] | ||

| + | *[https://www.eleceng.adelaide.edu.au/personal/dabbott/wiki/index.php/Cracking_the_Voynich_Code_2015_-_Final_Report Cracking the Voynich Code 2015 - Final Report] | ||

=References= | =References= | ||

| + | |||

| + | {{Reflist|colwidth=30em|refs= | ||

| + | |||

| + | <ref name=Stolte> D. Stolte, “Experts determine age of book 'nobody can read',” 10 February 2011. [Online]. Available: http://phys.org/news/2011-02-experts-age.html. [Accessed 12 March 2015]. </ref> | ||

| + | |||

| + | <ref name=Reddy> S. Reddy and K. Knight, “What We Know About The Voynich Manuscript,” LaTeCH '11 Proceedings of the 5th ACL-HLT Workshop on Language Technology for Cultural Heritage, Social Sciences, and Humanities, pp. 78-86, 2011. </ref> | ||

| + | |||

| + | <ref name=Landini> G. Landini, “Evidence Of Linguistic Structure In The Voynich Manuscript Using Spectral Analysis,” Cryptologia, pp. 275-295, 2001. </ref> | ||

| + | |||

| + | <ref name=Schinner> A. Schinner, “The Voynich Manuscript: Evidence of the Hoax Hypothesis,” Cryptologia, pp. 95-107, 2007.</ref> | ||

| + | |||

| + | <ref name=Amancio> D. R. Amancio, E. G. Altmann, D. Rybski, O. N. Oliveira Jr. and L. d. F. Costa, “Probing the Statistical Properties of Unknown Texts: Application to the Voynich Manuscript,” PLoS ONE 8(7), vol. 8, no. 7, pp. 1-10, 2013. </ref> | ||

| + | |||

| + | <ref name=Data> S. Chakrabarti, M. Ester, U. Fayyad, J. Gehrke, J. Han, S. Morishita, G. Piatetsky-Shapiro and W. Wang, “Data Mining Curriculum: A Proposal (Version 1.0),” 12 April 2015. [Online]. Available: http://www.kdd.org/curriculum/index.html.</ref> | ||

| + | |||

| + | <ref name=Currier> P. Currier, “New Research on the Voynich Manuscript: Proceedings of a Seminar,” 30 November 1976. [Online]. Available: http://www.voynich.nu/extra/curr_main.html. </ref> | ||

| + | |||

| + | <ref name=Aronoff> M. Aronoff, and K. Fudeman, “What is morphology,” Vol. 8., John Wiley & Sons, pp. 1-25, 2011.</ref> | ||

| + | |||

| + | <ref name=Melcuk> I. A. Mel’čuk, “Collocations and Lexical Functions,” in Phraseology. Theory, Analysis, and Applications., Oxford, Clarendon Press, 1998, pp. 23-53.</ref> | ||

| + | |||

| + | <ref name=Smadja> F. Smadja, “Retrieving Collocations from Text: Xtract,” Computational Linguistics, vol. 19, no. 1, pp. 143-177, 1993. </ref> | ||

| + | |||

| + | <ref name=WermterHahn> J. Wermter and U. Hahn, “You Can't Beat Frequency (Unless You Use Linguistic Knowledge) - A Qualitative Evaluation of Association Measures for Collocation and Term Extraction,” in ACL-44 Proceedings of the 21st International Conference on Computational Linguistics and the 44th annual meeting of the Association for Computational Linguistics, Stroudsburg, 2006.</ref> | ||

| + | |||

| + | <ref name=ThanopoulosFakotakisKokkinakis> A. Thanopoulos, N. Fakotakis and G. Kokkinakis, “Comparative Evaluation of Collocation Extraction Metrics,” LREC, vol. 2, pp. 620-625, 2002.</ref> | ||

| + | |||

| + | }} | ||

| + | |||

| + | =Back= | ||

| + | |||

| + | *[https://www.eleceng.adelaide.edu.au/students/wiki/projects/index.php/Main_Page Projects Main Page] | ||

Latest revision as of 12:12, 23 October 2015

This wiki page gives a small overview and summary of the project, briefly detailing the project information, approach, deliverables, concluding statements on results and future pathways. For further details on the results and analyses please visit our final report page.

Contents

Introduction

An unknown script that has been a mystery for several decades, powerful computational tools and access to a large array of data on the internet. This project looks at the famous linguistic and cryptologic mystery, The Voynich Manuscript, and attempts to find possible relationships to other languages from a basic statistical perspective. Utilizing a digital transcription of the Voynich, statistics are data-mined and analysed in various techniques and compared with any previous research available on the internet and a corpus of texts from various languages.

Team

Supervisors

Honours Students

Project Information

Background

The Voynich Manuscript is a document written in an unknown script that has been carbon dated back to the early 15th century [1] and believed to be created within Europe [2]. Named after Wilfrid Voynich, whom purchased the folio in 1912, the manuscript has become a well-known mystery within linguistics and cryptology. It is divided into several different sections based on the nature of the drawings [3]. These sections are:

The folio numbers and examples of each section are outlined in appendix section A.2. In general, the Voynich Manuscript has fallen into three particular hypotheses [4]. These are as follows:

- Cipher Text: The text is encrypted.

- Plain Text: The text is in a plain, natural language that is currently unidentified.

- Hoax: The text has no meaningful information.

Note that the manuscript may fall into more than one of these hypotheses [4]. It may be that the manuscript is written through steganography, the concealing of the true meaning within the possibly meaningless text.

Technical Background

The vast majority of the project relies on a technique known as data mining. Data mining is the process of taking and analysing a large data set in order to uncover particular patterns and correlations within said data thus creating useful knowledge [5]. In terms of the project, data shall be acquired from the Interlinear Archive, a digital archive of transcriptions from the Voynich Manuscript, and other sources of digital texts in known languages. Data mined from the Interlinear Archive will be tested and analysed for specific linguistic properties using varying statistical methods.

The Interlinear Archive, as mentioned, will be the main source of data in regards to the Voynich Manuscript. It has been compiled to be a machine readable version of the Voynich Manuscript based on transcriptions from various transcribers. Each transcription has been translated into the European Voynich Alphabet (EVA).

Aim

The aim of the research project is to determine possible features and relationships of the Voynich Manuscript through the analyses of basic linguistic features and to gain knowledge of these linguistic features. These features can be used to aid in the future investigation of unknown languages and linguistics.

The project does not aim to fully decode or understand the Voynich Manuscript itself. This outcome would be beyond excellent but is unreasonable to expect in a single year project from a small team of student engineers with very little initial knowledge on linguistics.

Motivation

The project attempts to find relationships and patterns within unknown text through the usage of basic linguistic properties and analyses. The Voynich Manuscript is a prime candidate for analyses as there is no known accepted translations of any part within the document. The relationships found can be used help narrow future research and to conclude on specific features of the unknown language within the Voynich Manuscript.

Knowledge produced from the relationships and patterns of languages and linguistics can be used to further the current linguistic computation and encryption/decryption technologies of today [6].

While some may question as to why an unknown text is of any importance to Engineering, a more general view of the research project shows that it deals with data acquisition and analyses. This is integral to a wide array of businesses, including engineering, which can involve a basic service, such as survey analysis, to more complex automated system.

Significance

There are many computational linguistic and encryption/decryption technologies that are in use today. As mentioned in section 1.3, knowledge produced from this research can help advance these technologies in a range of different applications [6]. These include, but are not limited to, information retrieval systems, search engines, machine translators, automatic summarizers, and social networks [6].

Particular technologies, that are widely used today, that can benefit from the research, include:

- Turn-It-In (Authorship/Plagiarism Detection)

- Google (Search Engines)

- Google Translate (Machine Runnable Language Translations)

Approach

The project was broken down into several phases where each phase considered a specific feature of the Voynich Manuscript and/or linguistics while attempting to build onto what was learned in the previous phase. The results found were used to compare and complement other results from previous research where possible.

All phases were coded and therefore included testing as all code must be verified for results to be considered accurate.

Code was written in C++ and MATLAB languages as the project members have experience using these programming languages. MATLAB, in particular, is chosen as it provides a simple, easy to use mathematical toolbox that is readily available on the University systems. Other programming languages may be used if it is found to be more suitable.

BASH scripts were also been used for fast sorting of the Interlinear Archive files.

Phase 1 - Characterization of the Text

Characterization of the text involved determining the first-order statistics of the Voynich Manuscript. This first involves pre-processing the Interlinear Archive into a simpler machine-readable format.

The pre-processed files are then characterized through MATLAB code by finding and determining:

- Unique word tokens

- Unique character tokens

- Frequency of word tokens

- Frequency of character tokens

- Word token length frequency

- Character tokens that only appear at the start, end, or middle of word tokens

A 'unique' token is considered a token that is different than any of the other tokens. In terms of character tokens, difference is attributed to the token itself being visually (machine-readable) different than another. In terms of word tokens, difference is attributed to the structure of the word.

Resulting statistics was then be compared with other known languages through using the same code on the various translations of the Universal Declaration of Human Rights. Unfortunately the Universal Declaration of Human Rights is, by comparison, a small document which will limit results. However it will give a basis for follow-up research into the languages that have a possible relationship to the Voynich Manuscript based on the first-order statistics.

Phase 2 - English Investigation

The English investigation looks into the elementary structure of English text. It specifically examines the representation of the English alphabet and how the alphabetical tokens can be extracted from an English text using statistics. This is done to grasp a better understanding on how character tokens are used within text and how data and statistics relating to these character tokens can be used to characterize each token.

Initially, a corpus of English texts shall be passed through the characterization code of phase 1 to determine the first-order statistics of each text. These will be compared to grasp a basic understanding of how each of the tokens can be statistically represented and how these statistics differ between texts. These tokens include alphabetical, numerical, and punctuation tokens.

The characterization code was then expanded upon to include character token bigrams to further define the differences between character tokens. Bigrams give the conditional probability, P, of a token, Tn, given the proceeding token, Tn-1. This is given in the following formula:

In general, the extractor used simple rules to determine if the character token is of a specific category. These include:

- Does the character token only (or the vast majority) appear at the end of a word token?

Tokens that only appear that the end of a word token are generally only punctuation characters when using a large sample text or corpus. However, depending on the type of text, some punctuation characters may appear before another punctuation character, hence majority was taken into account.

- Does the character token only appear at the start of a word token and does it have a high relative frequency when compared to others only appearing at the start of a word token?

In English, character tokens that only appear at the start of a word token are generally upper-case alphabet characters. Some punctuation characters may also only appear at the start of a word token, hence the relative frequencies were also taken into account.

- Does the character token have a high relative frequency?

Tokens with a high relative frequency are generally alphabet characters, with the highest consisting of the vowels and commonly used consonants.

- Does the character token have a high bigram ‘validity’?

Over a large English corpus, alphabetical characters generally appear alongside many more other tokens than non-alphabetical characters. Validity is defined as a bigram that occurs with a frequency greater than zero. Low validity suggests the character token is probably a non-alphabet character.

It was expected that the probability of the different tokens along with the first-order statistics, obtained through the phase 1 code, would show definitive differences between alphabetical, numerical, and punctuation tokens. However it was found that, depending on the writing-style and domain of a text, these statistics could differ significantly. Therefore the results could differ depending on the input text.

It was shown that it is possible to extract and categorize the character tokens of an text to a certain extent but, if using raw statistics, the results would generally be biased towards the type of input text and language. Larger sample sizes and additional statistics, along with knowledge of the specific language, may need to be included to increase the accuracy and precision of alphabet extraction.

Code will be written that takes these statistical findings into account to attempt to extract the English alphabet from any given English text with no prior knowledge of English itself. This will be used to examine the Voynich Manuscript to search for any character token relationships.

Phase 3 - Morphology Investigation

Linguistic morphology, broadly speaking, deals with the study of the internal structure of the words, particularly the meaningful segments that make up a word [7]. Specifically, phase 3 will be looking into the possibility of affixes within the Voynich Manuscript from a strictly concatenative perspective.

Previous research has found the possibility of morphological structure within the Voynich Manuscript [2]. Within this small experiment, the most common affixes in English are found and compared with those found within the Voynich Manuscript. Due to the unknown word structure and small relative size of the Voynich, this experiment defines an affix as a sequence of characters that appear at the word edges. Using this basic affix definition, a simple ranking of the affixes of various lengths could reveal potential relationships between the Voynich and other known languages.

The basis of the code extracts and counts each possible character sequence within a word for a specific character sequence length. These are then ranked, according to frequency, and compared with all languages tested. By comparing the relative frequencies and their difference ratios, we are able to find if any of the tested languages share a possible relationship with the Voynich Manuscript.

Results found here are assume concatenative affixes within the Voynich Manuscript and the other languages. As shown in many languages, morphology is full of different ambiguities dependent on the language. As such the results here can only give very baseline experimental data and will require further research.

Code is written for MATLAB which will allow for use on the Interlinear Archive. The code will also be used on English and other Languages texts to provide a quantitative comparison of the differing languages.

Phase 4 - Illustration Investigation

The illustration investigation looks into the illustrations contained in the Voynich Manuscript. It will examine the possible relation between texts, words and illustrations. The different sections in the Voynich Manuscript are based on the drawings and illustrations in pages. Almost all the sections are texts with illustrations except recipes section.

In Phase 4, the basis of the code will be achieving the following functions:

- Finding unique word tokens in each pages and sections

- Determine the location of a given word token

- Determine the frequency of a given word token

The resulting statistics from the code can then be used into investigation. However, it should be noted that the manuscript may have been written by multiple authors and in multiple languages [8]. Sections of the manuscript will need to be investigated separately, particularly those written in different languages, along with the manuscript as a whole.

Phase 5: Collocations

Collocations have no universally accepted formal definition [9] but deals with the words within a language that co-occur more often than would be expected by chance [10]. Natural languages are full of collocations [10] and can vary significantly depending on the metric, such as length or pattern, used to define a collocation [11].

In this research experiment, the definition used for a collocation is that of two words occurring directly next to each other. As collocations have varying significance within different languages, by extracting and comparing all possible collocations within the Voynich Manuscript and the corpus, a relationship based on word association could be found or provide evidence of the possibility of a hoax.

Initially collocations are ranked by frequency, from most frequent to least frequent, followed by a Pointwise Mutual Information (PMI) metric. PMI is a widely accepted method to quantify the strength of word association [11] [12] and is mathematically defined as:

Where P(x,y) is the probability of two words appearing coincidentally int the text and P(x) and P(y) are the probabilities of each word appearing within the text.

Results

For details and discussions of the results please visit our report. Concluding statements of these results can be found below.

Deliverables

The deliverables of the project are summarized in the table below, detailing the deliverable and the respective deadline.

| Deliverable | Deadline |

|---|---|

Proposal Seminar

|

31st of March, 2015 |

Research Proposal Draft

|

17th of April, 2015 |

Research Proposal and Progress Report

|

5th of June, 2015 |

Final Seminar

|

13th of October, 2015 |

Thesis

|

21st of October, 2015 |

Wiki Report

|

Week 11, Semester 2 |

Expo Poster

|

23rd of October, 2015 |

Expo Presentation

|

26th and 27th of October, 2015 |

YouTube Video

|

Week 13, Semester 2 |

USB Flash Drive of all Code and Work

|

Week 13, Semester 2 |

Concluding Statements

Using simple statistical measures found within written texts, it is possible to indicate possible linguistic relationships between the Voynich and different linguistic properties of other languages.

- The Voynich appears to follow Zipf’s Law, suggesting it is a natural language, the binomial distribution of the word lengths also suggest it may be a type of code, cipher or abjad.

- Basic character and bigram frequencies can be used to identify possible alphabet and non-alphabet characters but can be influenced by the sample size and the writing-style of a given text.

- Using a simple affix definitions it is determined that the Voynich may have weak relationships with French, Greek and Latin. This however relies on the simple, restrictive definition for affixes which, in terms of morphological structure, isn’t necessarily simple nor as restrictive.

- Similarly, a simple definition of collocations shows possible relationships between the Voynich and Hebrew. As with the affixes, this also relies on a simple definition. This also showed that, even with the same definition, the metric used to rank the collocations could greatly vary the relationships between languages but did keep a very low word association measure for the Voynich. This again suggests that the Voynich may be a type of code, cipher or even a hoax.

Without much more in-depth research and testing, the relationships found using simple statistical measures lack conclusive evidence. The results found for each different linguistic property tested showed features that could be related to multiple languages or hypotheses, but this can be used to narrow down possible options for future research.

Future Pathways

With a project such as this, unless the Voynich Manuscript is finally 'cracked' further research will always be required. This may include:

- Investigate codes and ciphers of a similar time period to determine if there are any possible relationships.

- Improving an alphabet extraction algorithm by incorporating other languages and statistics if possible.

- Investigate stylometry, writing-style can affect statistics so is there a particular writing-style or author that the Voynich may relate to?

- Expanding on morphology to use a more realistic and less restrictive definition while possibly including word stem extraction.

- Attempting a different style of collocation or using a different metric for comparison.

It may be recommended to focus on less areas such that research and testing can go further in-depth but will depend on how well ideas can translate into possible results.

Resources

- Standard University Computers

- MATLAB Computing Environment

- C++ Programming Language

- BASH Scripts

- Electronic Voynich Transcriptions

- Universal Declaration of Human Rights in various languages

- Various electronic English texts

Further Project Information

References

- ↑ D. Stolte, “Experts determine age of book 'nobody can read',” 10 February 2011. [Online]. Available: http://phys.org/news/2011-02-experts-age.html. [Accessed 12 March 2015].

- ↑ 2.0 2.1 S. Reddy and K. Knight, “What We Know About The Voynich Manuscript,” LaTeCH '11 Proceedings of the 5th ACL-HLT Workshop on Language Technology for Cultural Heritage, Social Sciences, and Humanities, pp. 78-86, 2011.

- ↑ G. Landini, “Evidence Of Linguistic Structure In The Voynich Manuscript Using Spectral Analysis,” Cryptologia, pp. 275-295, 2001.

- ↑ 4.0 4.1 A. Schinner, “The Voynich Manuscript: Evidence of the Hoax Hypothesis,” Cryptologia, pp. 95-107, 2007.

- ↑ S. Chakrabarti, M. Ester, U. Fayyad, J. Gehrke, J. Han, S. Morishita, G. Piatetsky-Shapiro and W. Wang, “Data Mining Curriculum: A Proposal (Version 1.0),” 12 April 2015. [Online]. Available: http://www.kdd.org/curriculum/index.html.

- ↑ 6.0 6.1 6.2 D. R. Amancio, E. G. Altmann, D. Rybski, O. N. Oliveira Jr. and L. d. F. Costa, “Probing the Statistical Properties of Unknown Texts: Application to the Voynich Manuscript,” PLoS ONE 8(7), vol. 8, no. 7, pp. 1-10, 2013.

- ↑ M. Aronoff, and K. Fudeman, “What is morphology,” Vol. 8., John Wiley & Sons, pp. 1-25, 2011.

- ↑ P. Currier, “New Research on the Voynich Manuscript: Proceedings of a Seminar,” 30 November 1976. [Online]. Available: http://www.voynich.nu/extra/curr_main.html.

- ↑ I. A. Mel’čuk, “Collocations and Lexical Functions,” in Phraseology. Theory, Analysis, and Applications., Oxford, Clarendon Press, 1998, pp. 23-53.

- ↑ 10.0 10.1 F. Smadja, “Retrieving Collocations from Text: Xtract,” Computational Linguistics, vol. 19, no. 1, pp. 143-177, 1993.

- ↑ 11.0 11.1 A. Thanopoulos, N. Fakotakis and G. Kokkinakis, “Comparative Evaluation of Collocation Extraction Metrics,” LREC, vol. 2, pp. 620-625, 2002.

- ↑ J. Wermter and U. Hahn, “You Can't Beat Frequency (Unless You Use Linguistic Knowledge) - A Qualitative Evaluation of Association Measures for Collocation and Term Extraction,” in ACL-44 Proceedings of the 21st International Conference on Computational Linguistics and the 44th annual meeting of the Association for Computational Linguistics, Stroudsburg, 2006.