Difference between revisions of "Projects:2017s1-176 Smart Mirror with Raspberry Pi"

(→System Description) |

|||

| (10 intermediate revisions by the same user not shown) | |||

| Line 8: | Line 8: | ||

There have been many smart mirror projects conducted by others. They have predominantly used the Python and C programming languages to develop the software for their mirrors, and used operating systems such as Raspbian and Ubuntu. These mirrors are able to show the basic GUI and other information updated from website, such as weather and links of latest news. In addition, some smart mirror projects have voice control as well, which is supported by Amazon Alexa. | There have been many smart mirror projects conducted by others. They have predominantly used the Python and C programming languages to develop the software for their mirrors, and used operating systems such as Raspbian and Ubuntu. These mirrors are able to show the basic GUI and other information updated from website, such as weather and links of latest news. In addition, some smart mirror projects have voice control as well, which is supported by Amazon Alexa. | ||

| − | Our primary aim | + | Our primary aim was to incorporate face and voice recognition technology into a smart mirror. To the best of our knowledge, this had not been done before as part of previous smart mirror projects. We also desired to use Android OS on the Raspberry Pi, and utilise user usage behaviour to customise their home screen. |

== Motivation == | == Motivation == | ||

| Line 22: | Line 22: | ||

== System Description == | == System Description == | ||

| + | |||

| + | [[File:System_desc.jpg|300px|thumb|Figure 1. System flow diagram]] | ||

Both voice and image streams are obtained from the | Both voice and image streams are obtained from the | ||

| Line 28: | Line 30: | ||

face is identified, the device is unlocked, otherwise | face is identified, the device is unlocked, otherwise | ||

access to smart mirror is denied. | access to smart mirror is denied. | ||

| − | |||

| − | |||

Voice commands are processed once the device is | Voice commands are processed once the device is | ||

| Line 39: | Line 39: | ||

== Face Recognition == | == Face Recognition == | ||

| − | + | Zhao et al. provided a critical survey of still and video based facial recognition research [2]. They offer some insights into the studies of machine recognition of faces. The authors establish that the problem of automatic facial recognition involves three key steps [2]: | |

| − | tested | + | |

| − | + | • detection and normalisation of faces, | |

| + | |||

| + | • feature extraction and accurate normalisation of faces, and | ||

| + | |||

| + | • identification/verification. | ||

| + | |||

| + | Face detection is deemed successful if the presence and rough location of a face has been identified correctly. However, without accurate face and feature location, recognition performance is noticeably degraded. | ||

| + | |||

| + | The face detection and recognition algorithms tested were the Viola-Jones algorithm, Eigen-face method, Local Binary Pattern (LBP) algorithm, and Histogram of Oriented Gradients (HOG) with Neural Network. These were tested on Matlab and Python, using the FERET Sep96 testing technique. | ||

| + | |||

| + | The Local Binary | ||

Pattern (LBP) Histogram and Viola-Jones algorithms | Pattern (LBP) Histogram and Viola-Jones algorithms | ||

performed best, and were selected for the Android | performed best, and were selected for the Android | ||

implementation. The LBP algorithm achieved a | implementation. The LBP algorithm achieved a | ||

| − | recognition accuracy rate of 93%. | + | recognition accuracy rate of 93%, and Viola-Jones achieved a detection accuracy of 97.9%. |

| + | |||

| + | [[File:Lbp.jpg|300px|thumb|Figure 2. LBP encoding of an image]] | ||

| + | |||

| + | LBP works by splitting an image into neighbourhoods of pixels, and compares the intensity of the centre pixel to the surrounding ones. Depending on if the intensity of the centre pixel is greater or lower than the surrounding pixel, the pixel in the neighbourhood is assigned a binary value. The binary encodings of the neighbourhood pixels are concatenated to create a binary encoding of the entire pixel neighbourhood, which are then combined with the encodings of other neighbourhoods to create a histogram representation. The histograms of different images are compared to determine if they contain the same face. | ||

== Voice Recognition == | == Voice Recognition == | ||

| Line 74: | Line 88: | ||

== Problems Encountered == | == Problems Encountered == | ||

| − | HMM Matlab... | + | During the implementation of HMM on the Matlab, some problems occurred. Since voice recognition based on HMM also uses Gaussian probability distribution, the multi-dimension probability density function is involved. With the inappropriate implementation of probability density function, the HMM did not work properly on Matlab. Combining Gaussian distribution and HMM was failed, which resulted in the problem of implementing voice recognition based on HMM on the Matlab. |

== Future Work and Recommendations == | == Future Work and Recommendations == | ||

| Line 83: | Line 97: | ||

Similarly, the research, tests and development made for the face recognition aspect can be used to to incorporate face | Similarly, the research, tests and development made for the face recognition aspect can be used to to incorporate face | ||

recognition, and other functionality such as multiuser | recognition, and other functionality such as multiuser | ||

| − | capability into the system | + | capability into the system. |

| + | |||

| + | It is also possible to realise the ultimate aim of the project which is to integrate | ||

the device into a smart home system. | the device into a smart home system. | ||

Latest revision as of 14:10, 29 October 2017

Contents

Abstract

The future is here! Imagine yourself reading news feed or checking today weather while dressing or shaving in front of the mirror. The aim of the project is to achieve such a device, and incorporate cutting edge features. The Pi will connect with the internet to retrieve real-time information to display on a monitor. By hacking the monitor open, a one-way mirror can be placed on top to achieve a smart mirror!

Background

There have been many smart mirror projects conducted by others. They have predominantly used the Python and C programming languages to develop the software for their mirrors, and used operating systems such as Raspbian and Ubuntu. These mirrors are able to show the basic GUI and other information updated from website, such as weather and links of latest news. In addition, some smart mirror projects have voice control as well, which is supported by Amazon Alexa.

Our primary aim was to incorporate face and voice recognition technology into a smart mirror. To the best of our knowledge, this had not been done before as part of previous smart mirror projects. We also desired to use Android OS on the Raspberry Pi, and utilise user usage behaviour to customise their home screen.

Motivation

Such a device is expected to have a beneficial effect on society. It will streamline the lives of the users by allowing them to view information important to them and provide easy access to important services they may require. Furthermore, being able to control various household electronic systems from a single device will remove the need for a separate control for each device.

System Description

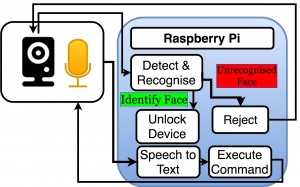

Both voice and image streams are obtained from the webcam. For face recognition, the image stream is processed by the face recognition algorithm. If the face is identified, the device is unlocked, otherwise access to smart mirror is denied.

Voice commands are processed once the device is unlocked. After being processed by the application program interface (API), the voice stream is converted to text. If the text matches existing commands, that specific command is executed.

Face Recognition

Zhao et al. provided a critical survey of still and video based facial recognition research [2]. They offer some insights into the studies of machine recognition of faces. The authors establish that the problem of automatic facial recognition involves three key steps [2]:

• detection and normalisation of faces,

• feature extraction and accurate normalisation of faces, and

• identification/verification.

Face detection is deemed successful if the presence and rough location of a face has been identified correctly. However, without accurate face and feature location, recognition performance is noticeably degraded.

The face detection and recognition algorithms tested were the Viola-Jones algorithm, Eigen-face method, Local Binary Pattern (LBP) algorithm, and Histogram of Oriented Gradients (HOG) with Neural Network. These were tested on Matlab and Python, using the FERET Sep96 testing technique.

The Local Binary Pattern (LBP) Histogram and Viola-Jones algorithms performed best, and were selected for the Android implementation. The LBP algorithm achieved a recognition accuracy rate of 93%, and Viola-Jones achieved a detection accuracy of 97.9%.

LBP works by splitting an image into neighbourhoods of pixels, and compares the intensity of the centre pixel to the surrounding ones. Depending on if the intensity of the centre pixel is greater or lower than the surrounding pixel, the pixel in the neighbourhood is assigned a binary value. The binary encodings of the neighbourhood pixels are concatenated to create a binary encoding of the entire pixel neighbourhood, which are then combined with the encodings of other neighbourhoods to create a histogram representation. The histograms of different images are compared to determine if they contain the same face.

Voice Recognition

Two voice recognition algorithms were implemented and tested on Matlab. Dynamic Time Warping was found to be more accurate and simple when applied to isolated word recognition, while Hidden Markov Model was more reliable for sentence recognition. The DTW algorithm implemented on Matlab has an accuracy rate of 95%.

Android Development

Google Voice Search function was used to convert voice into text. Java class android.speech.RecognizerIntent simply activates Google Voice Search in Android Application and receive text commands returned from API.

Open source libraries were used to access the video stream from the USB webcam. However, the OpenCV libraries required for face recognition did not interface correctly with the video stream, hence face recognition was not successful on the Pi. A demo was however developed in an Unix environment

Problems Encountered

During the implementation of HMM on the Matlab, some problems occurred. Since voice recognition based on HMM also uses Gaussian probability distribution, the multi-dimension probability density function is involved. With the inappropriate implementation of probability density function, the HMM did not work properly on Matlab. Combining Gaussian distribution and HMM was failed, which resulted in the problem of implementing voice recognition based on HMM on the Matlab.

Future Work and Recommendations

Successful implementation of voice control was achieved. The progress made can be leveraged to incorporate more variety of voice commands.

Similarly, the research, tests and development made for the face recognition aspect can be used to to incorporate face recognition, and other functionality such as multiuser capability into the system.

It is also possible to realise the ultimate aim of the project which is to integrate the device into a smart home system.

Project Team

Team Members

- Anbang Huang

- Syed Maruful Aziz

Project Supervisors

- Dr Withawat Withayachumnankul

- Dr Hong Gunn Chew