Difference between revisions of "Projects:2018s1-103 Improving Usability and User Interaction with KALDI Open-Source Speech Recogniser"

(→Improvement On GUI) |

(→Improvement On GUI) |

||

| (36 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| + | '''Introduction''' | ||

| + | |||

| + | ---- | ||

| + | |||

| + | |||

| + | KALDI is an open source speech transcription toolkit intended for use by speech recognition researchers. The software allows the utilisation of integration of newly developed speech transcription algorithms. The software usability is limited due to the requirements of using complex scripting language and operating system specific commands. In this project, a Graphical User Interface (GUI) has been developed, that allows non-technical individuals to use the software and make it easier to interact with. The GUI allows for different language and acoustic models selections and transcription either from a file or live input – live decoding. Also, two newly trained models have been added, one which uses Gaussian Mixture Models, while the second uses a Neural Network model. The first one was selected to allow for benchmark performance evaluation, while the second to demonstrate an improved transcription accuracy. | ||

| + | |||

== '''Project Team''' == | == '''Project Team''' == | ||

| Line 22: | Line 29: | ||

This project will involve the use of Deep Learning algorithms (Automatic Speech Recognition related), software development (C++) and performance evaluation through the Word Error Rate formula. Very little hardware will be involved through its entirety. | This project will involve the use of Deep Learning algorithms (Automatic Speech Recognition related), software development (C++) and performance evaluation through the Word Error Rate formula. Very little hardware will be involved through its entirety. | ||

| − | |||

| − | |||

| − | |||

| − | |||

This project is a follow-on from previous year's project of the same name. | This project is a follow-on from previous year's project of the same name. | ||

| Line 33: | Line 36: | ||

===The KALDI Toolkit=== | ===The KALDI Toolkit=== | ||

| − | ---- | + | Initially developed in 2009 by Dan Povey and several others <ref>KALDI, “History of the KALDI Project”, KALDI, n.d. [Online] Available at: http://kaldi-asr.org/doc/history.html [Accessed 22 May 2018].</ref>, KALDI has been aimed at being a low development cost, high quality speech recognition toolkit. Having undergone several improvements over the years, it now consists of recipes on creating custom Acoustic and Language Models that are based on several large corpuses such as the Wall Street Journal, Fisher, TIMIT etc. It's latest addition has been Neural Network Acoustic/Language models that are still in development <ref>KALDI, “Deep Neural Networks in Kaldi - Introduction”, KALDI, n.d. [Online] Available at: http://kaldi-asr.org/doc/dnn.html [Accessed 22 May 2018]. |

| + | </ref>. | ||

| + | |||

| + | KALDI's directory structure, and overall code layout is quite complex. It also requires the user to have a sound knowledge on Ubuntu terminal commands as well as knowledge on C++ and Python. It is, in fact, this complexity that the first-time users face when approaching the toolkit that has led to the inception of the previous iterations of the ASR program. | ||

| + | |||

| + | ===Automatic Speech Recognition (ASR)=== | ||

| + | |||

| + | [[File:ASR.png|thumb|upright=2.0|ASR Process[3]]] | ||

| + | |||

| + | Automatic Speech Recognition (ASR) is the process of converting audio into text <ref>R.E. Gruhn, W. Minker, S. Nakamura, Statistical Pronunciation Modeling for Non-Native Speech Processing, ch. 2, p. 5, Harman/Becker Automotive Systems GmbH, Ulm, Germany: 2011 [E-book]. Available: Springer, https://goo.gl/peFMrZ [Accessed 22 May 2018].</ref>. In general, ASR occurs in the following process: | ||

| + | |||

| + | *Feature Representation | ||

| + | *Phoneme mapping the features through the Acoustic Model (AM) | ||

| + | *Word mapping the phonemes through the Dictionary Model (Lexicon) | ||

| + | *Sentence construction the words through the Language Model (LM) | ||

| + | |||

| + | The final three processes are collectively known as 'Decoding', which is highlighted more clearly through the diagram provided. | ||

| + | |||

| + | |||

| + | |||

| + | ====Feature Representation==== | ||

| + | |||

| + | [[File:Spectrogram.jpg|border|400px]] | ||

| + | |||

| + | |||

| + | Feature Representation is the process of extracting important bits and pieces of the frequencies of sound files, mainly using Spectrograms and other frequency analysis tools. A good Feature Representation manages to capture only salient spectral characters (features that are not speaker-specific). | ||

| + | |||

| + | Obtaining such features can be done in several ways. The method used by the KALDI program is the Mel-frequency Cepstrum Coefficients (MFCC) method. | ||

| + | |||

| + | [[File:MFCC.jpg|thumb|upright=2.5|Method of obtaining MFCC<ref>J. Chiu. GHC 4012. Class Lecture, Topic: “Design and Implementation of Speech Recognition Systems – Feature Implementation”, School of Computer Science, Carnegie Mellon University, Pittsburgh, PA, 27 Jan. 2014.</ref>]] | ||

| + | |||

| + | ====The Acoustic Model==== | ||

| + | |||

| + | The Acoustic Model (AM) essentially converts the values of the parameterised waveform into phonemes. Phonemes, by definition, are a unit of sound in speech <ref>M. Breum, “Playing with Sounds in Words- Part 3 {Phoneme Segmentation}.” thisreadingmama.com, para. 3, 26 Sept. 2016. [Online]. Available: thisreadingmama.com/playing-with-phonemes-part-3/ [Accessed 22 May 2018].</ref>. They do not have any inherent meaning by themselves, but words are constructed when they are considered collectively in different patterns. English is estimated to consist of roughly 40 phonemes. | ||

| + | |||

| + | ====The Dictionary Model==== | ||

| + | |||

| + | The Dictionary Model, also known as a Lexicon, maps the written representations of words or phrases with the pronunciations of them. The pronunciations are described using phonemes that are relevant to the specific language the lexicon is built upon <ref>“About Lexicons and Phonetic Alphabets (Microsoft.Speech)” Microsoft Research Blog, 2018. [Online]. Available: https://goo.gl/SDqZtU [Accessed 22 May 2018].</ref>. | ||

| + | |||

| + | ====The Language Model==== | ||

| + | |||

| + | The task of a Language Model (LM) is to predict the next character/word that may occur in a sentence, given the previous words that have been spoken <ref>R. J. Mooney. CS 388. Class Lecture, Topic: “Natural Language Processing: N-Gram Language Models”, School of Computer Science, University of Texas at Austin, Austin, TX, 2018.</ref>. A good LM can result in contrastingly different results. | ||

| − | + | [[File:LM.png|thumb|upright=2.5|Transcription before and after LM addition<ref>A. Hannun, C. Case, J. Casper, B. Catanzaro, G. Diamos, E. Elsen, R. Prenger, S. Sateesh, S. Sengupta, A. Coates, A. Y. Ng, “Deep Speech: Scaling up end-to-end speech recognition”, p. 3, 19 Dec. 2014 [Abstract]. Available: https://arxiv.org/abs/1412.5567?context=cs [Accessed 22 May 2018].</ref>]] | |

| − | + | The above mentioned elements function together to produce an automatic speech recogniser/transcriber. This methodology is also adapted by the KALDI ASR toolkit used. | |

| − | ==Qt Creator== | + | ===Qt Creator=== |

In order to improve the GUI to suit the current iteration of the program, it was decided to use Qt Creator. This was also the GUI toolkit of choice for the previous students due to several reasons; it's open source, based on C++, backed by a large enterprise that updates the toolkit regularly, available on Linux and is simple to use. | In order to improve the GUI to suit the current iteration of the program, it was decided to use Qt Creator. This was also the GUI toolkit of choice for the previous students due to several reasons; it's open source, based on C++, backed by a large enterprise that updates the toolkit regularly, available on Linux and is simple to use. | ||

| − | Qt | + | [[File:Qt.png|thumb|upright=2.5|The Qt Interface]] |

| − | + | Qt Creator includes a code editor and integrates Qt Designer which allows for designing and building GUI's from Qt widgets. It's also possible to compose and customize your own widgets as required. The developers of Qt Creator, Qt Project, have also ensured that a large library of documentation, tutorials, example code and FAQ’s is kept up to date with newer developments, bugs and bug fixes <ref>Qt, “Category:Tools::QtCreator”, Qt Wiki, 15 Sep. 2016 [Online]. Available: http://wiki.qt.io/Category:Tools::QtCreator [Accessed 22 May 2018].</ref>. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

== '''Results''' == | == '''Results''' == | ||

=== Improvement On GUI === | === Improvement On GUI === | ||

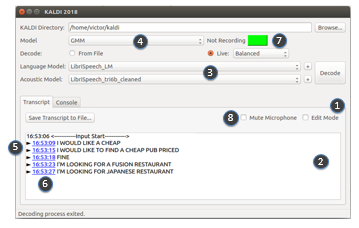

| − | [[File:GUI.png| | + | [[File:GUI.png|border|centre|500px]] |

#Editable transcript | #Editable transcript | ||

#*An edit mode is added to the GUI. If the edit mode checkbox is checked, user will be able to edit the transcription directly from the display window. This allow the user to correct the transcription if a mistake is found. | #*An edit mode is added to the GUI. If the edit mode checkbox is checked, user will be able to edit the transcription directly from the display window. This allow the user to correct the transcription if a mistake is found. | ||

#Interactive display window | #Interactive display window | ||

| + | #*A display window with several interactive functions is integrated into the GUI of the program for ease of use. User is able to choose from the 'Transcript' or 'Console' window tab. The 'Transcript' window allow users to read the transcription directly from the GUI, without having them to open a text file externally. The 'Console' window capture all system output. This is useful for program developer to check the behaviour of the program and identify errors if exist. | ||

#Ability to choose Acoustic and Language Models | #Ability to choose Acoustic and Language Models | ||

#*Instead of having a fixed AM and LM, user is able to choose different AM and LM for their decoding session. | #*Instead of having a fixed AM and LM, user is able to choose different AM and LM for their decoding session. | ||

#Audio recording & playback | #Audio recording & playback | ||

| + | #*For every live decoding session, the audio input will be recorded. After the session, user would be able to playback the utterances corresponding to the transcribed line. This allows the user to check whether the speech has been transcribed accurately. | ||

#Timestamp usage on utterances | #Timestamp usage on utterances | ||

| + | #*A timestamp would be shown for every utterances during live decoding. | ||

#Recording status indicator | #Recording status indicator | ||

| + | #*An indicator for the recording status. It turns red if the program is recording and green if the program is not recording. This would allow the user to check the recording status of the program and avoid having confidential information being recorded accidentally. | ||

#Microphone mute/unmute control | #Microphone mute/unmute control | ||

| − | # | + | #*A control to mute and unmute the microphone. If checked, the microphone would be disable. During a recording session, user can mute the microphone if he/she do not want to his/her speech to be recorded. |

=== Improvement of Functionality of the ASR === | === Improvement of Functionality of the ASR === | ||

| Line 83: | Line 119: | ||

Detailed Documentation of decoding session | Detailed Documentation of decoding session | ||

#For every decoding session, all details from the session will be recorded and saved along with the transcription. These includes the Acoustic and Language Model used for the session, data and time of the session, and also whether a GMM or NNET2 model is used for the session. | #For every decoding session, all details from the session will be recorded and saved along with the transcription. These includes the Acoustic and Language Model used for the session, data and time of the session, and also whether a GMM or NNET2 model is used for the session. | ||

| + | |||

| + | [[File:Results.PNG|border|centre|500px]] | ||

| + | |||

| + | ===Addition of a Feedback Loop=== | ||

| + | |||

| + | Theoretically, the feedback loop should enable the user to improve an existing model using the audio recorded and transcription generated during a live decoding session. We try to use the data from live decoding session, and feed the data back to the Acoustic Model to improve the ASR accuracy. The audio and transcription recorded from live decoding session would be used as input data for the further training of the existing Acoustic Model. | ||

| + | |||

| + | Adaption technique is used to implement the feedback loop due to the similarity of the definition. | ||

| + | We run MAP adaption on an existing model with features trained previously. Instead of retraining the whole tree of the Acoustic Model, only one iteration is ran on top of the existing features. Forced alignment on the training data is needed before the training begin. This is to align the phonemes of the transcription to the speech. | ||

| + | |||

| + | Two experiment is carried out to test the adaption technique, both adaption is ran on a low accuracy Acoustic Model (Vystadial Model). | ||

| + | *Self-recorded audio with a total duration of one minute is used as input data. There is no improvement to the decoding result. | ||

| + | *Audio from LibriSpeech with a total duration of five minutes is used as input data. Minor improvement is observed from the result. | ||

| + | |||

| + | One of the reason for the result might be due to the low accuracy of Vystadial model. (transcription for live decoding on Vystadial Model is very inacurate) | ||

| + | |||

| + | By considering how the feedback training works, large number of feedback loop iteration, or large amount of feedback input data might be needed to get some obvious improvement on the Acoustic Model | ||

| + | |||

| + | Further experiment has not been carried out due to the time constrains. | ||

| + | |||

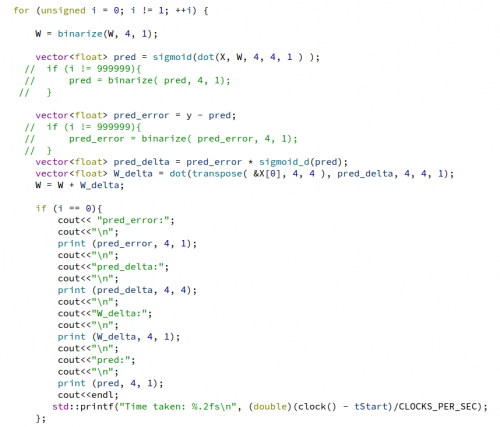

| + | ===Usage of Binarized Neural Networks=== | ||

| + | |||

| + | In order to apply BNN's to KALDI's code, it was decided to | ||

| + | |||

| + | * Research Neural Networks | ||

| + | * Understand KALDI's NN code structure | ||

| + | * Apply BNN to a simple NN | ||

| + | * Proceed to apply BNN to KALDI, if previous step was successful | ||

| + | |||

| + | Neural Networks were researched upon and significant knowledge gained. KALDI NN were researched into great detail. A simple NN in the form of C++ code was chosen to experiment with, in order to apply BNN at a small scale. Qt Creator was used as the coding interface, mainly due to it's ease of access at this point of the project. | ||

| + | |||

| + | [[File:NN2.png|border|500px]] | ||

| + | |||

| + | Time delays through mathematical calculations were purposefully induced in order to clearly see the successful application of BNN to the network. A code that would automatically binarize the code was also written. | ||

| + | [[File:NN.png|thumb|upright=2.0|Vector Binarizing Code]] | ||

| + | This, however, was not successful, with the accuracy of the final result deviating quite a bit from the expected. More research on BNN and it's application was done, but not useful in producing a significant result. It was also understood that the structure used in KALDI's NN was different to the traditional NN's. This posed a significant problem, as the traditional method was crucial to the success of BNN, as it had not been experimented on in other types of structures. | ||

| + | |||

| + | It was decided that application of BNN was not successful for the small scale NN, and hence was not pursued in KALDI as a result. | ||

== '''Conclusion''' == | == '''Conclusion''' == | ||

| + | |||

| + | Many of the pre-planned GUI features were successfully implemented. Two new decoding recipes were added to the program, with one of them being neural network based. The traditional (non-NN) recipe was locally trained, while the NN was obtained pre-trained due to hardware constraints. The accuracy of the two were measured using both speaker dependent and independent data, and the results compared. Research was also done on implementing binarized neural network algorithms in order to cut short training times. Research into a feedback loop was also conducted and applied. | ||

| + | |||

| + | == '''References''' == | ||

| + | <references/> | ||

Latest revision as of 16:18, 21 October 2018

Introduction

KALDI is an open source speech transcription toolkit intended for use by speech recognition researchers. The software allows the utilisation of integration of newly developed speech transcription algorithms. The software usability is limited due to the requirements of using complex scripting language and operating system specific commands. In this project, a Graphical User Interface (GUI) has been developed, that allows non-technical individuals to use the software and make it easier to interact with. The GUI allows for different language and acoustic models selections and transcription either from a file or live input – live decoding. Also, two newly trained models have been added, one which uses Gaussian Mixture Models, while the second uses a Neural Network model. The first one was selected to allow for benchmark performance evaluation, while the second to demonstrate an improved transcription accuracy.

Project Team

Students

- Shi Yik Chin

- Yasasa Saman Tennakoon

Supervisors

- Dr. Said Al-Sarawi

- Dr. Ahmad Hashemi-Sakhtsari (DST Group)

Abstract

This project aims to refine and improve the capabilities of KALDI (an Open Source Speech Recogniser). This will require:

- Improving the current GUI's flexibility

- Introducing new elements or replacing older elements in the GUI for ease of use

- Including a methodology that users (of any skill level) can use to improve or introduce Language or Acoustic models into the software

- Refining current Language and Acoustic models in the software to reduce the Word Error Rate (WER)

- Introducing a neural network in the software to reduce the Word Error Rate (WER)

- Introducing a feedback loop into the software to reduce the Word Error Rate (WER)

- Introducing Binarized Neural Networks into the training methods to reduce training times and increase efficiency

This project will involve the use of Deep Learning algorithms (Automatic Speech Recognition related), software development (C++) and performance evaluation through the Word Error Rate formula. Very little hardware will be involved through its entirety.

This project is a follow-on from previous year's project of the same name.

Background

The KALDI Toolkit

Initially developed in 2009 by Dan Povey and several others [1], KALDI has been aimed at being a low development cost, high quality speech recognition toolkit. Having undergone several improvements over the years, it now consists of recipes on creating custom Acoustic and Language Models that are based on several large corpuses such as the Wall Street Journal, Fisher, TIMIT etc. It's latest addition has been Neural Network Acoustic/Language models that are still in development [2].

KALDI's directory structure, and overall code layout is quite complex. It also requires the user to have a sound knowledge on Ubuntu terminal commands as well as knowledge on C++ and Python. It is, in fact, this complexity that the first-time users face when approaching the toolkit that has led to the inception of the previous iterations of the ASR program.

Automatic Speech Recognition (ASR)

Automatic Speech Recognition (ASR) is the process of converting audio into text [3]. In general, ASR occurs in the following process:

- Feature Representation

- Phoneme mapping the features through the Acoustic Model (AM)

- Word mapping the phonemes through the Dictionary Model (Lexicon)

- Sentence construction the words through the Language Model (LM)

The final three processes are collectively known as 'Decoding', which is highlighted more clearly through the diagram provided.

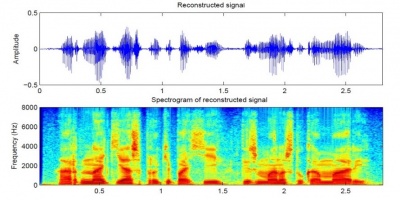

Feature Representation

Feature Representation is the process of extracting important bits and pieces of the frequencies of sound files, mainly using Spectrograms and other frequency analysis tools. A good Feature Representation manages to capture only salient spectral characters (features that are not speaker-specific).

Obtaining such features can be done in several ways. The method used by the KALDI program is the Mel-frequency Cepstrum Coefficients (MFCC) method.

The Acoustic Model

The Acoustic Model (AM) essentially converts the values of the parameterised waveform into phonemes. Phonemes, by definition, are a unit of sound in speech [5]. They do not have any inherent meaning by themselves, but words are constructed when they are considered collectively in different patterns. English is estimated to consist of roughly 40 phonemes.

The Dictionary Model

The Dictionary Model, also known as a Lexicon, maps the written representations of words or phrases with the pronunciations of them. The pronunciations are described using phonemes that are relevant to the specific language the lexicon is built upon [6].

The Language Model

The task of a Language Model (LM) is to predict the next character/word that may occur in a sentence, given the previous words that have been spoken [7]. A good LM can result in contrastingly different results.

The above mentioned elements function together to produce an automatic speech recogniser/transcriber. This methodology is also adapted by the KALDI ASR toolkit used.

Qt Creator

In order to improve the GUI to suit the current iteration of the program, it was decided to use Qt Creator. This was also the GUI toolkit of choice for the previous students due to several reasons; it's open source, based on C++, backed by a large enterprise that updates the toolkit regularly, available on Linux and is simple to use.

Qt Creator includes a code editor and integrates Qt Designer which allows for designing and building GUI's from Qt widgets. It's also possible to compose and customize your own widgets as required. The developers of Qt Creator, Qt Project, have also ensured that a large library of documentation, tutorials, example code and FAQ’s is kept up to date with newer developments, bugs and bug fixes [9].

Results

Improvement On GUI

- Editable transcript

- An edit mode is added to the GUI. If the edit mode checkbox is checked, user will be able to edit the transcription directly from the display window. This allow the user to correct the transcription if a mistake is found.

- Interactive display window

- A display window with several interactive functions is integrated into the GUI of the program for ease of use. User is able to choose from the 'Transcript' or 'Console' window tab. The 'Transcript' window allow users to read the transcription directly from the GUI, without having them to open a text file externally. The 'Console' window capture all system output. This is useful for program developer to check the behaviour of the program and identify errors if exist.

- Ability to choose Acoustic and Language Models

- Instead of having a fixed AM and LM, user is able to choose different AM and LM for their decoding session.

- Audio recording & playback

- For every live decoding session, the audio input will be recorded. After the session, user would be able to playback the utterances corresponding to the transcribed line. This allows the user to check whether the speech has been transcribed accurately.

- Timestamp usage on utterances

- A timestamp would be shown for every utterances during live decoding.

- Recording status indicator

- An indicator for the recording status. It turns red if the program is recording and green if the program is not recording. This would allow the user to check the recording status of the program and avoid having confidential information being recorded accidentally.

- Microphone mute/unmute control

- A control to mute and unmute the microphone. If checked, the microphone would be disable. During a recording session, user can mute the microphone if he/she do not want to his/her speech to be recorded.

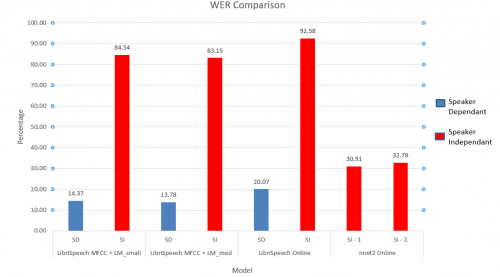

Improvement of Functionality of the ASR

New Acoustic and Language Models are added to the existing program:

- Two new Acoustic Models

- The first Acoustic Model is GMM a model. This is trained by using the corpus provided by LibriSpeech. The LibriSpeech corpus is a large (1000 hour) corpus of English read speech derived from audiobooks, sampled at 16kHz. The accents are various and not marked, but the majority are US English. Several models are trained from this corpus, which includes both monophone model and triphone model (tri2b, tri3b, ..., to tri6b).

- The other Acoustic Model is a NNET2 model. This model is pre-trained using the more than 2000 hours of Fisher corpus and available for download from kaldi website.

- A new Language Model

- This Language Model is a 3-Gram LM and trained by a plain text of 34 MB

Detailed Documentation of decoding session

- For every decoding session, all details from the session will be recorded and saved along with the transcription. These includes the Acoustic and Language Model used for the session, data and time of the session, and also whether a GMM or NNET2 model is used for the session.

Addition of a Feedback Loop

Theoretically, the feedback loop should enable the user to improve an existing model using the audio recorded and transcription generated during a live decoding session. We try to use the data from live decoding session, and feed the data back to the Acoustic Model to improve the ASR accuracy. The audio and transcription recorded from live decoding session would be used as input data for the further training of the existing Acoustic Model.

Adaption technique is used to implement the feedback loop due to the similarity of the definition. We run MAP adaption on an existing model with features trained previously. Instead of retraining the whole tree of the Acoustic Model, only one iteration is ran on top of the existing features. Forced alignment on the training data is needed before the training begin. This is to align the phonemes of the transcription to the speech.

Two experiment is carried out to test the adaption technique, both adaption is ran on a low accuracy Acoustic Model (Vystadial Model).

- Self-recorded audio with a total duration of one minute is used as input data. There is no improvement to the decoding result.

- Audio from LibriSpeech with a total duration of five minutes is used as input data. Minor improvement is observed from the result.

One of the reason for the result might be due to the low accuracy of Vystadial model. (transcription for live decoding on Vystadial Model is very inacurate)

By considering how the feedback training works, large number of feedback loop iteration, or large amount of feedback input data might be needed to get some obvious improvement on the Acoustic Model

Further experiment has not been carried out due to the time constrains.

Usage of Binarized Neural Networks

In order to apply BNN's to KALDI's code, it was decided to

- Research Neural Networks

- Understand KALDI's NN code structure

- Apply BNN to a simple NN

- Proceed to apply BNN to KALDI, if previous step was successful

Neural Networks were researched upon and significant knowledge gained. KALDI NN were researched into great detail. A simple NN in the form of C++ code was chosen to experiment with, in order to apply BNN at a small scale. Qt Creator was used as the coding interface, mainly due to it's ease of access at this point of the project.

Time delays through mathematical calculations were purposefully induced in order to clearly see the successful application of BNN to the network. A code that would automatically binarize the code was also written.

This, however, was not successful, with the accuracy of the final result deviating quite a bit from the expected. More research on BNN and it's application was done, but not useful in producing a significant result. It was also understood that the structure used in KALDI's NN was different to the traditional NN's. This posed a significant problem, as the traditional method was crucial to the success of BNN, as it had not been experimented on in other types of structures.

It was decided that application of BNN was not successful for the small scale NN, and hence was not pursued in KALDI as a result.

Conclusion

Many of the pre-planned GUI features were successfully implemented. Two new decoding recipes were added to the program, with one of them being neural network based. The traditional (non-NN) recipe was locally trained, while the NN was obtained pre-trained due to hardware constraints. The accuracy of the two were measured using both speaker dependent and independent data, and the results compared. Research was also done on implementing binarized neural network algorithms in order to cut short training times. Research into a feedback loop was also conducted and applied.

References

- ↑ KALDI, “History of the KALDI Project”, KALDI, n.d. [Online] Available at: http://kaldi-asr.org/doc/history.html [Accessed 22 May 2018].

- ↑ KALDI, “Deep Neural Networks in Kaldi - Introduction”, KALDI, n.d. [Online] Available at: http://kaldi-asr.org/doc/dnn.html [Accessed 22 May 2018].

- ↑ R.E. Gruhn, W. Minker, S. Nakamura, Statistical Pronunciation Modeling for Non-Native Speech Processing, ch. 2, p. 5, Harman/Becker Automotive Systems GmbH, Ulm, Germany: 2011 [E-book]. Available: Springer, https://goo.gl/peFMrZ [Accessed 22 May 2018].

- ↑ J. Chiu. GHC 4012. Class Lecture, Topic: “Design and Implementation of Speech Recognition Systems – Feature Implementation”, School of Computer Science, Carnegie Mellon University, Pittsburgh, PA, 27 Jan. 2014.

- ↑ M. Breum, “Playing with Sounds in Words- Part 3 {Phoneme Segmentation}.” thisreadingmama.com, para. 3, 26 Sept. 2016. [Online]. Available: thisreadingmama.com/playing-with-phonemes-part-3/ [Accessed 22 May 2018].

- ↑ “About Lexicons and Phonetic Alphabets (Microsoft.Speech)” Microsoft Research Blog, 2018. [Online]. Available: https://goo.gl/SDqZtU [Accessed 22 May 2018].

- ↑ R. J. Mooney. CS 388. Class Lecture, Topic: “Natural Language Processing: N-Gram Language Models”, School of Computer Science, University of Texas at Austin, Austin, TX, 2018.

- ↑ A. Hannun, C. Case, J. Casper, B. Catanzaro, G. Diamos, E. Elsen, R. Prenger, S. Sateesh, S. Sengupta, A. Coates, A. Y. Ng, “Deep Speech: Scaling up end-to-end speech recognition”, p. 3, 19 Dec. 2014 [Abstract]. Available: https://arxiv.org/abs/1412.5567?context=cs [Accessed 22 May 2018].

- ↑ Qt, “Category:Tools::QtCreator”, Qt Wiki, 15 Sep. 2016 [Online]. Available: http://wiki.qt.io/Category:Tools::QtCreator [Accessed 22 May 2018].