Difference between revisions of "Projects:2014s2-79 FPGA-base Hardware Iimplementation of Machine-Learning Methods for Handwriting and Speech Recognition"

(→Machine learning) |

(→summarize) |

||

| (46 intermediate revisions by the same user not shown) | |||

| Line 10: | Line 10: | ||

=== Machine learning === | === Machine learning === | ||

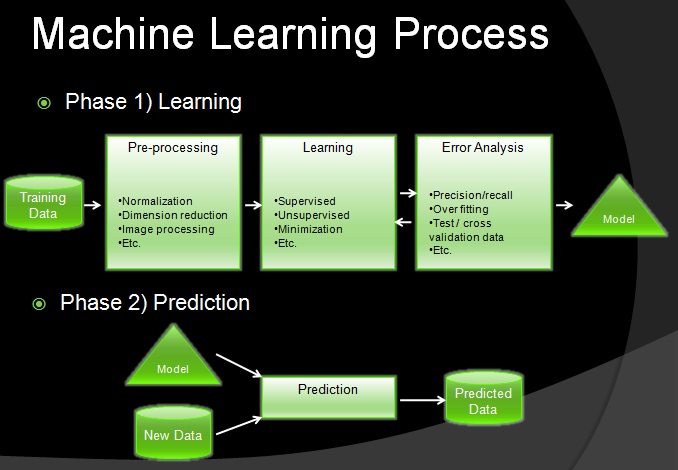

Machine learning is the method that the people build the optimistic construction and algorithms on the machine in order to help it learn from and make predictions on data. | Machine learning is the method that the people build the optimistic construction and algorithms on the machine in order to help it learn from and make predictions on data. | ||

| − | [[File:machine learning.jpg| | + | [[File:machine learning.jpg|900px|center|thumb|Figure.1 Machine learning process]] |

=== Artificial neural network === | === Artificial neural network === | ||

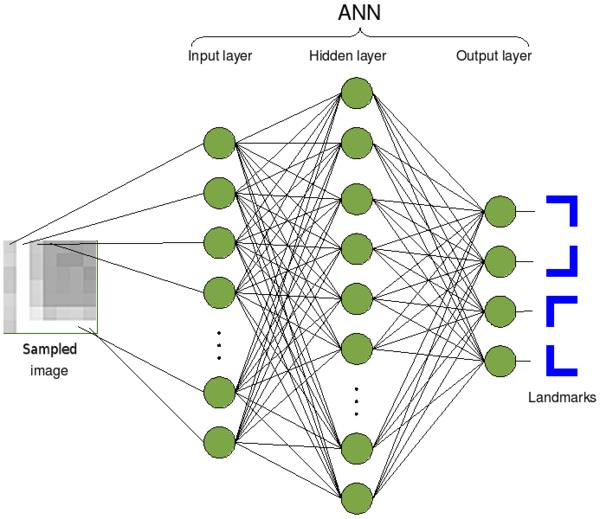

Artificial neural network(ANN) inspired by the sophisticated functionality of human brains where hundreds of billions of interconnected neurons process information in parallel, researchers have successfully tried demonstrating certain levels of intelligence on silicon | Artificial neural network(ANN) inspired by the sophisticated functionality of human brains where hundreds of billions of interconnected neurons process information in parallel, researchers have successfully tried demonstrating certain levels of intelligence on silicon | ||

Artificial neural networks are structures include basic elements, the neurons, connected in the networks with massive parallelism that can greatly benefit from hardware implementation. Generally, it has three layers-input, hidden and output layers. The data come through the input go into the system, after computing in the hidden layer, and then result coming out at the output layer . | Artificial neural networks are structures include basic elements, the neurons, connected in the networks with massive parallelism that can greatly benefit from hardware implementation. Generally, it has three layers-input, hidden and output layers. The data come through the input go into the system, after computing in the hidden layer, and then result coming out at the output layer . | ||

| − | [[File:neural network structure.jpg|Figure 2 neural network structure]] | + | [[File:neural network structure.jpg|600px|center|thumb|Figure 2 neural network structure]] |

=== FPGA === | === FPGA === | ||

A field-programmable gate array (FPGA) is an integrated circuit designed which the customer or the designer can configure after manufacturing – hence "field-programmable". The designers usually use hardware description language (HDL) to realize the configuration, similar to that used for an application-specific integrated circuit (ASIC) | A field-programmable gate array (FPGA) is an integrated circuit designed which the customer or the designer can configure after manufacturing – hence "field-programmable". The designers usually use hardware description language (HDL) to realize the configuration, similar to that used for an application-specific integrated circuit (ASIC) | ||

| + | [[File:FPGA.jpg|300px|right|thumb|Figure 3 FPGA|]] | ||

| + | |||

=== Simulink & System generator === | === Simulink & System generator === | ||

Simulink is a block diagram environment for multidomain simulation and Model-Based Design. It supports simulation, automatic code generation, and continuous test and verification of embedded systems | Simulink is a block diagram environment for multidomain simulation and Model-Based Design. It supports simulation, automatic code generation, and continuous test and verification of embedded systems | ||

| Line 25: | Line 27: | ||

Designs are captured in the DSP friendly Simulink modeling environment using a Xilinx specific blockset. All of the downstream FPGA implementation steps including synthesis and place and route are automatically performed to generate an FPGA programming file | Designs are captured in the DSP friendly Simulink modeling environment using a Xilinx specific blockset. All of the downstream FPGA implementation steps including synthesis and place and route are automatically performed to generate an FPGA programming file | ||

| − | |||

== Algorithm == | == Algorithm == | ||

=== MNIST database === | === MNIST database === | ||

The MNIST database (Mixed National Institute of Standards and Technology database) is a large database of handwritten digits that is commonly used for training and testing in the field of machine learning. The MNIST database of handwritten digits has a training set of 60,000 examples, and a test set of 10,000 examples, each image transform to 28*28 pixels. It is a subset of a larger set available from NIST. The digits have been size-normalized and centered in a fixed-size image. | The MNIST database (Mixed National Institute of Standards and Technology database) is a large database of handwritten digits that is commonly used for training and testing in the field of machine learning. The MNIST database of handwritten digits has a training set of 60,000 examples, and a test set of 10,000 examples, each image transform to 28*28 pixels. It is a subset of a larger set available from NIST. The digits have been size-normalized and centered in a fixed-size image. | ||

| − | [[File:randonm sampling of MNIST.jpg|Figure. | + | [[File:randonm sampling of MNIST.jpg|thumb|Figure.4 randonm sampling of MNIST]] |

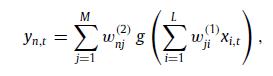

=== Mathematical function === | === Mathematical function === | ||

[[File:function.jpg]] | [[File:function.jpg]] | ||

| − | [[File:parameter.jpg]] | + | [[File:parameter.jpg|400px]] |

| + | === Result in MTLAB === | ||

| + | |||

| + | {| border="0.5" | ||

| + | | valign="center"| | ||

| + | [[File:kinds of activation function per formance in network.jpg|600px|thumb|Figure.5 kinds of activation function per formance in network]] | ||

| + | | | ||

| + | | valign="center"| | ||

| + | [[File:1 bit error.jpg|thumb|600px]] | ||

| + | |} | ||

| + | |||

| + | The table clearly show us, the rate is too larger if the weights choose as 2 bits, however if the bits come to 4 bits, the error dramatically reduces around 10%. Moreover, if the input weight changes to 4 bits with output weight changes to 6 or the input weight changes to 6 and output weight changes to 4, the rate becomes to fewer than 10%. Indeed, two pairs above are suitable for implementation. | ||

| + | |||

| + | == Hardware Design == | ||

| + | According to the neural network basic structure, the neuron calculation include weights multiply, summation and linear mapping. During the FPGA design, the weights should be store in the ROM and each weight will have an address to match the input address. Due to the ROM block have a very large working frequency which can reach to the hundreds of MHz, so the multiply block can use interior multiplying in the FPGA. The figure shown below is the whole structure design on System Generator. | ||

| + | |||

| + | [[File:sts.jpg|900px|centre|thumb|Fig.6 structure on system generator|]] | ||

| + | |||

| + | == Conclusion == | ||

| + | === summarize === | ||

| + | Currently, the neural network is one of the significant ways to realize the artificial intelligence. The project described how to build the neural network and present the important parameter- weight. At the start of the paper, the theory has been introduces that how to use pseudoinverse solutions to optimize the weights as the mathematical part. Moreover, the designer use MNIST database as the experiment resource to test how does the neural work. During the experiment, the designer extract 60000 images as the training data to use the pseudoinverse way to find out the suitable weights and use 10000 images as the testing data to verify the recognition rate. To calculate the weight, the designer used exponent function and five kinds of linear functions as the activation function, and find out the suitable result as the weights for the project. | ||

| + | |||

| + | As the following, based on the Xilinx Virtex-5 FPGA board, the designer demonstrates how to implement the test on the hardware. The paper presents automated implement of the neuron using system generator. The designer only need to configure blocks through the interface to realize the description of the circuit in a hardware description language- VHDL or Verilog. | ||

| + | |||

| + | === Project completion === | ||

| + | Generally the project is completed and reached the aims and requirements in proposal. At this stage, the project achievements are as following aspects: | ||

| + | |||

| + | 1 Find the speech training database for machine-learning | ||

| + | |||

| + | 2 Add the hidden layer neurons | ||

| + | |||

| + | 3 Decrease the error rate as lower than 3% | ||

| + | |||

| + | === Skills training and professional development === | ||

| + | Among the simulation state, the required skills include analysis and modelling neural network, Matlab simulation programming, particularly in System Generator, hardware resource distribution knowledge. During the project period, the team cooperation ability, the ability to communicate or negotiate with supervisor, the capability of literature review, time management and project management skill and other professional skills would be developed. All of the skills are essential for further research activities and could be apply to career. | ||

| + | === Future work === | ||

| + | For the future, the most important things to do in the future are do the speech recognition. Due to the time limitation, we do not have enough time to find out the database for the speech. According to the neural network theory, if the designers have the database for training the machine, the speech recognition will easily to realize. On the other hand, for the handwriting recognition part, our machine just can recognize the digital number from 0 to 9. The designer can use the letter database to train the machine and do the test as the same way with the digital numbers. | ||

| + | |||

| + | Furthermore, if the whole network successfully operated on the FPGA, the designer should consider how to build the peripheral item such as write-pad, microphone and VGA screen. | ||

| + | |||

| + | Moreover, the hardware resources estimation still needs to be discuss. Due to more resources will cause more budget cost in the real life implementation. The designer should use the lowest hardware resources to keep the error rate as small as possible. | ||

| + | |||

| + | == Group members == | ||

| + | Boqian Zhang | ||

| + | |||

| + | Xuan Wang | ||

| + | |||

| + | == Supervisors == | ||

| + | DR. Said Al-Sarawi | ||

| + | |||

| + | DR. Mark McDonnell | ||

| + | |||

| + | == References == | ||

| + | [1] Tapson J, Van Schaik A, “Learning the pseudoinverse solution to network weights,” Neural Networks, VOL.45, PP.94-100, 2013. | ||

| + | |||

| + | [2] Anil K. Jain, Robert P.W. Duin and Jianchang,”statistical Pattern Recognition: A Review,” IEEE Transactions On Pattern Analysis and Machine Intelligence, VOL.22, NO1.1, JANUARY 2000. | ||

| + | |||

| + | [3] LeCun, Y., Bottou, L., Bengio, Y., P. (1998). Gradient-based learning applied to document recognition.Proc. IEEE, 86, pp. 2278-2324. | ||

| + | |||

| + | [4] Xilinx, 2009, “Virtex-5 Family Overview”, Product Specification, VOL.5.0, February 6 2009 | ||

| + | |||

| + | [5] Xilinx, 2011. “Getting started guide”, System generator for DSP,VOL.13.3, October 19 2011 | ||

| + | |||

| + | [6] Yann. L., Corinna. C, “The MNIST database of handwritten digits”, Lecun,< http://yann.lecun.com/exdb/mnist/> | ||

Latest revision as of 09:12, 5 June 2015

Contents

introduction

Automatic (machine) recognition, description, classification, and image processing are important problems in a variety of engineering and scientific disciplines such as biology, psychology, medicine, marketing, computer vision, artificial intelligence, and remote sensing [1]. Handwriting recognition is the ability of the machines that receive and interpret intelligible handwritten input from the sources such as hand-pad, photos and other devices. Neural network is the most commonly way people used to realize the pattern classification tasks and image recognition. Generally, handwriting recognition system is implemented using software technology. However, the speed of software-based implementation is not fast enough for people, and software-based implementation relies the computer which is not suitable for somewhere that high portability is need [2]. FPGA-based handwriting recognition implementation is a good way that can solve the problem very well. FPGA are the construction of programmable logic, which are not only erasable and flexible for design and realize the algorithm like the software, but also have a great speed to operate some kind of algorithm especially running parallel algorithm due to FPGA has parallel execution ability.

Motivation

Nowadays, the technologies of handwriting and speech recognition are used widely in people daily life such as Siri, Hollow Google (the speech recognition system on smart phone) and over 90% portable devices have handwriting recognition function. It can be seen that people enjoyed the writing progress but distressed on the error result. Although the recognition rate on today is much better than many years ago, but it is still cannot satisfy people’s want. Fortunately, with the combination algorithms, clever use of modern computing power, and availability of very big training datasets, benchmarks on accuracy and efficiency for automatic recognition of handwriting and speech will frequently being surpassed.

Background

Handwriting input

Handwriting data is converted to digital form either by scanning the writing on paper or by writing with a special pen on an electronic surface such as a digitizer combined with a liquid crystal display

Machine learning

Machine learning is the method that the people build the optimistic construction and algorithms on the machine in order to help it learn from and make predictions on data.

Artificial neural network

Artificial neural network(ANN) inspired by the sophisticated functionality of human brains where hundreds of billions of interconnected neurons process information in parallel, researchers have successfully tried demonstrating certain levels of intelligence on silicon Artificial neural networks are structures include basic elements, the neurons, connected in the networks with massive parallelism that can greatly benefit from hardware implementation. Generally, it has three layers-input, hidden and output layers. The data come through the input go into the system, after computing in the hidden layer, and then result coming out at the output layer .

FPGA

A field-programmable gate array (FPGA) is an integrated circuit designed which the customer or the designer can configure after manufacturing – hence "field-programmable". The designers usually use hardware description language (HDL) to realize the configuration, similar to that used for an application-specific integrated circuit (ASIC)

Simulink & System generator

Simulink is a block diagram environment for multidomain simulation and Model-Based Design. It supports simulation, automatic code generation, and continuous test and verification of embedded systems

System generator is a digital signal processor (DSP) design tool from Xilinx that enables the use of the Mathworks model-based Simulink design environment for FPGA design.

Designs are captured in the DSP friendly Simulink modeling environment using a Xilinx specific blockset. All of the downstream FPGA implementation steps including synthesis and place and route are automatically performed to generate an FPGA programming file

Algorithm

MNIST database

The MNIST database (Mixed National Institute of Standards and Technology database) is a large database of handwritten digits that is commonly used for training and testing in the field of machine learning. The MNIST database of handwritten digits has a training set of 60,000 examples, and a test set of 10,000 examples, each image transform to 28*28 pixels. It is a subset of a larger set available from NIST. The digits have been size-normalized and centered in a fixed-size image.

Mathematical function

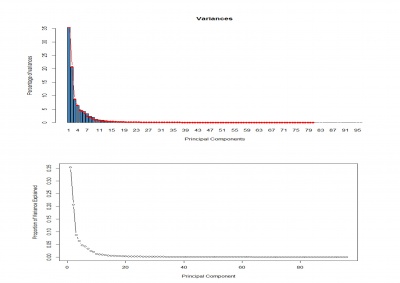

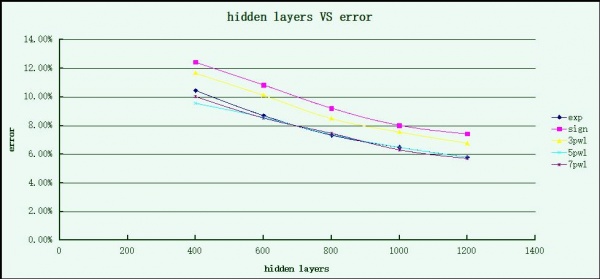

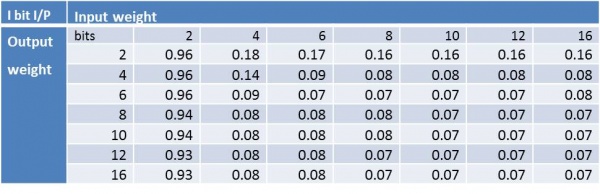

Result in MTLAB

The table clearly show us, the rate is too larger if the weights choose as 2 bits, however if the bits come to 4 bits, the error dramatically reduces around 10%. Moreover, if the input weight changes to 4 bits with output weight changes to 6 or the input weight changes to 6 and output weight changes to 4, the rate becomes to fewer than 10%. Indeed, two pairs above are suitable for implementation.

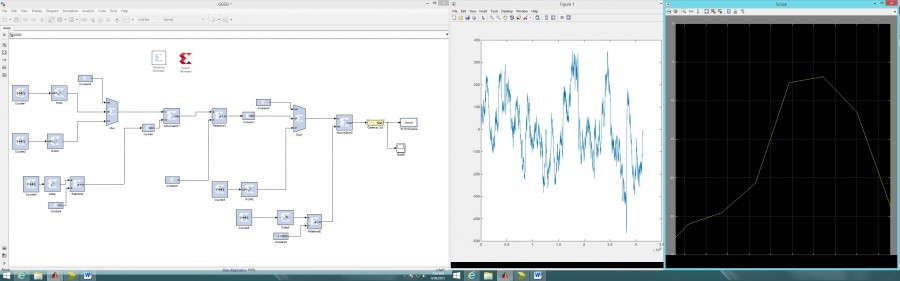

Hardware Design

According to the neural network basic structure, the neuron calculation include weights multiply, summation and linear mapping. During the FPGA design, the weights should be store in the ROM and each weight will have an address to match the input address. Due to the ROM block have a very large working frequency which can reach to the hundreds of MHz, so the multiply block can use interior multiplying in the FPGA. The figure shown below is the whole structure design on System Generator.

Conclusion

summarize

Currently, the neural network is one of the significant ways to realize the artificial intelligence. The project described how to build the neural network and present the important parameter- weight. At the start of the paper, the theory has been introduces that how to use pseudoinverse solutions to optimize the weights as the mathematical part. Moreover, the designer use MNIST database as the experiment resource to test how does the neural work. During the experiment, the designer extract 60000 images as the training data to use the pseudoinverse way to find out the suitable weights and use 10000 images as the testing data to verify the recognition rate. To calculate the weight, the designer used exponent function and five kinds of linear functions as the activation function, and find out the suitable result as the weights for the project.

As the following, based on the Xilinx Virtex-5 FPGA board, the designer demonstrates how to implement the test on the hardware. The paper presents automated implement of the neuron using system generator. The designer only need to configure blocks through the interface to realize the description of the circuit in a hardware description language- VHDL or Verilog.

Project completion

Generally the project is completed and reached the aims and requirements in proposal. At this stage, the project achievements are as following aspects:

1 Find the speech training database for machine-learning

2 Add the hidden layer neurons

3 Decrease the error rate as lower than 3%

Skills training and professional development

Among the simulation state, the required skills include analysis and modelling neural network, Matlab simulation programming, particularly in System Generator, hardware resource distribution knowledge. During the project period, the team cooperation ability, the ability to communicate or negotiate with supervisor, the capability of literature review, time management and project management skill and other professional skills would be developed. All of the skills are essential for further research activities and could be apply to career.

Future work

For the future, the most important things to do in the future are do the speech recognition. Due to the time limitation, we do not have enough time to find out the database for the speech. According to the neural network theory, if the designers have the database for training the machine, the speech recognition will easily to realize. On the other hand, for the handwriting recognition part, our machine just can recognize the digital number from 0 to 9. The designer can use the letter database to train the machine and do the test as the same way with the digital numbers.

Furthermore, if the whole network successfully operated on the FPGA, the designer should consider how to build the peripheral item such as write-pad, microphone and VGA screen.

Moreover, the hardware resources estimation still needs to be discuss. Due to more resources will cause more budget cost in the real life implementation. The designer should use the lowest hardware resources to keep the error rate as small as possible.

Group members

Boqian Zhang

Xuan Wang

Supervisors

DR. Said Al-Sarawi

DR. Mark McDonnell

References

[1] Tapson J, Van Schaik A, “Learning the pseudoinverse solution to network weights,” Neural Networks, VOL.45, PP.94-100, 2013.

[2] Anil K. Jain, Robert P.W. Duin and Jianchang,”statistical Pattern Recognition: A Review,” IEEE Transactions On Pattern Analysis and Machine Intelligence, VOL.22, NO1.1, JANUARY 2000.

[3] LeCun, Y., Bottou, L., Bengio, Y., P. (1998). Gradient-based learning applied to document recognition.Proc. IEEE, 86, pp. 2278-2324.

[4] Xilinx, 2009, “Virtex-5 Family Overview”, Product Specification, VOL.5.0, February 6 2009

[5] Xilinx, 2011. “Getting started guide”, System generator for DSP,VOL.13.3, October 19 2011

[6] Yann. L., Corinna. C, “The MNIST database of handwritten digits”, Lecun,< http://yann.lecun.com/exdb/mnist/>