Difference between revisions of "Projects:2018s1-122 NI Autonomous Robotics Competition"

| Line 21: | Line 21: | ||

== Robot Sensors == | == Robot Sensors == | ||

| − | The robot requires a variety of sensors so that it may "see" track obstacles and boundaries, and so the robot may know its location within the | + | The robot requires a variety of sensors so that it may "see" track obstacles and boundaries, and so the robot may know its location within the track. |

[[File:Map.PNG]] | [[File:Map.PNG]] | ||

| Line 30: | Line 30: | ||

- Range sensors: ultrasonic, time of flight | - Range sensors: ultrasonic, time of flight | ||

- Motor rotation sensors: motor encoders | - Motor rotation sensors: motor encoders | ||

| − | |||

| − | |||

=== Image Sensor === | === Image Sensor === | ||

| Line 40: | Line 38: | ||

[[File:Kinect.png]] | [[File:Kinect.png]] | ||

| − | === RGB Image Processing === | + | ==== RGB Image Processing ==== |

The competition requires the robot to use the colour images for the following purposes: | The competition requires the robot to use the colour images for the following purposes: | ||

1. Identify boundaries on the floor that are marked with 50mm wide coloured tape | 1. Identify boundaries on the floor that are marked with 50mm wide coloured tape | ||

2. Identify wall boundaries | 2. Identify wall boundaries | ||

| − | |||

| − | The competition track | + | The competition track boundaries are marked on the floor with 50mm wide coloured tape. The RGB images are processed to extract useful information. |

| + | Before any image processing was attempted on the myRIO, the pipeline was determined using Matlab and its processing toolbox. | ||

| + | |||

| + | ==== Determining Image Processing Pipeline in Matlab ==== | ||

| + | |||

| + | An overview of the pipeline is as follows: | ||

| + | 1. Import/read captured RGB image | ||

| + | 2. Convert RGB (Red-Green-Blue) to HSV (Hue-Saturation-Value) | ||

| + | The HSV representation of images allows us to easliy: isolate particular colours (Hue range), select colour intensity (Saturation range), and select brightness (Value range) | ||

| + | 3. Produce mask around desired colour | ||

| + | 4. Erode mask to reduce noise regions so nothing | ||

| + | 5. Dilate mask to return mask to correct size | ||

| + | 6. Isolate edges of masked colour region | ||

| + | 7. Calculate equations of remaining lines and line endpoints | ||

| + | |||

| + | |||

| + | |||

| + | ==== Depth Image Processing ==== | ||

Revision as of 17:42, 29 August 2018

Contents

Supervisors

Dr Hong Gunn Chew

Dr Braden Phillips

Honours Students

Alexey Havrilenko

Bradley Thompson

Joseph Lawrie

Michael Prendergast

Project Description

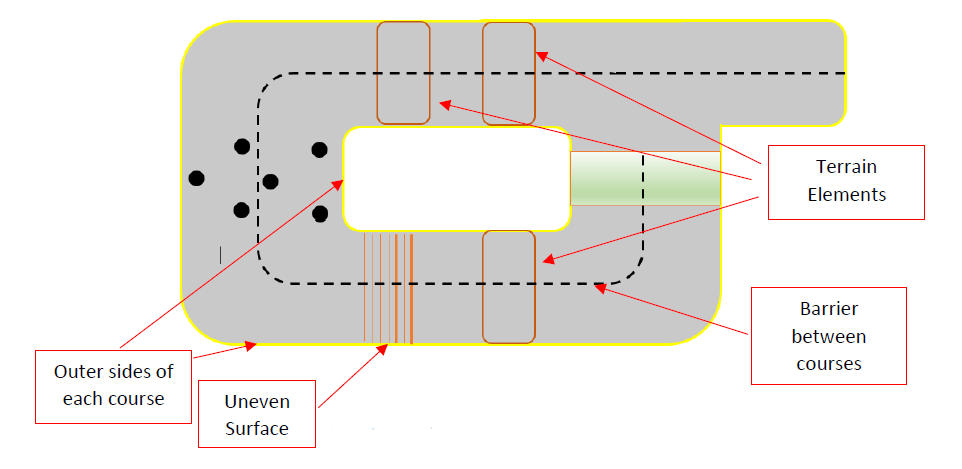

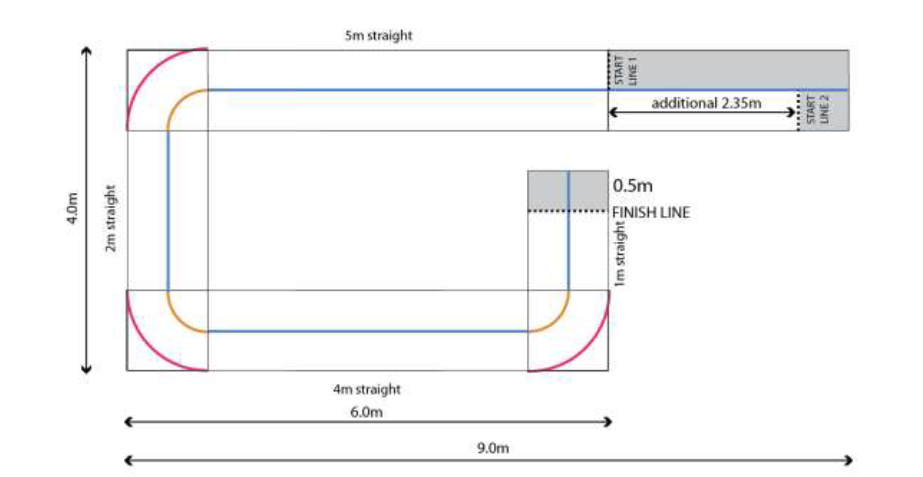

Each year, National Instruments (NI) sponsors a competition to showcase the robotics capabilities of students by building autonomous robots using one of their microcontroller and FPGA products. In 2018, the competition focuses on the theme 'Fast Track to the Future' where team robots will perform various tasks on a track that incorporates off-road and slippery terrain, and unforeseen obstacles to be overcome or avoided autonomously. This project investigates the use of the NI MyRIO-1900 FPGA/LabView platform for autonomous vehicles. The group will apply for the competition in March 2018, with the competition taking place in September 2018.

Robot Sensors

The robot requires a variety of sensors so that it may "see" track obstacles and boundaries, and so the robot may know its location within the track.

The sensors used: - Image sensor: Microsoft Kinect - Range sensors: ultrasonic, time of flight - Motor rotation sensors: motor encoders

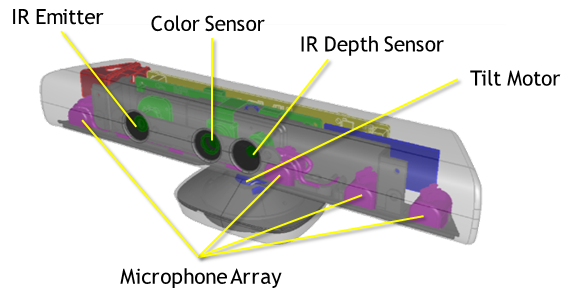

Image Sensor

A camera is used as one of the various sensors for the purpose of determining accurate position and target location estimations. The Microsoft Xbox 360 Kinect Sensor USB 2.0 camera is an ideal candidate for the robot's vision because of it's capabilities, availability, and cost. This camera produces two types of images: RGB images and depth (range) images. The RGB images consist of three channels: red, green, and blue. The depth images are represented with one channel, with each pixel value representing the distance from the camera to the first obstruction encountered.

RGB Image Processing

The competition requires the robot to use the colour images for the following purposes: 1. Identify boundaries on the floor that are marked with 50mm wide coloured tape 2. Identify wall boundaries

The competition track boundaries are marked on the floor with 50mm wide coloured tape. The RGB images are processed to extract useful information. Before any image processing was attempted on the myRIO, the pipeline was determined using Matlab and its processing toolbox.

Determining Image Processing Pipeline in Matlab

An overview of the pipeline is as follows: 1. Import/read captured RGB image 2. Convert RGB (Red-Green-Blue) to HSV (Hue-Saturation-Value) The HSV representation of images allows us to easliy: isolate particular colours (Hue range), select colour intensity (Saturation range), and select brightness (Value range) 3. Produce mask around desired colour 4. Erode mask to reduce noise regions so nothing 5. Dilate mask to return mask to correct size 6. Isolate edges of masked colour region 7. Calculate equations of remaining lines and line endpoints