Difference between revisions of "Projects:2015s1-26 Autonomous robotics using NI MyRIO"

(→Project Details) |

|||

| Line 1: | Line 1: | ||

| + | == Introduction == | ||

| + | |||

| + | This project proposes the construction of an autonomous robot designed around the National Instruments (NI) processing unit called myRIO. The robot will be entered into the National Instruments Autonomous Robotics Competition (NIARC) and will be designed to autonomously navigate an environment with obstacles, collect cargo and deliver cargo to target terminals. Areas of specific focus include obstacle avoidance, navigation and localisation. Obstacle avoidance will be implemented through ultrasonic sensors, while navigation and localisation will use the A* (A star) algorithm and the Kinect sensor. This project will make use of various project management skills such as using budget estimates as well as analysing risks involved. Overall, the expected outcome will be a demonstration that the proposed methods for obstacle avoidance, localisation and navigation work for the competition track. The final robot will be due for completion by the 16th September 2015. | ||

== Supervisors == | == Supervisors == | ||

| Line 24: | Line 27: | ||

Yiyi Wang | Yiyi Wang | ||

| − | |||

| − | |||

| − | |||

== Project Details == | == Project Details == | ||

Revision as of 17:26, 20 July 2015

Introduction

This project proposes the construction of an autonomous robot designed around the National Instruments (NI) processing unit called myRIO. The robot will be entered into the National Instruments Autonomous Robotics Competition (NIARC) and will be designed to autonomously navigate an environment with obstacles, collect cargo and deliver cargo to target terminals. Areas of specific focus include obstacle avoidance, navigation and localisation. Obstacle avoidance will be implemented through ultrasonic sensors, while navigation and localisation will use the A* (A star) algorithm and the Kinect sensor. This project will make use of various project management skills such as using budget estimates as well as analysing risks involved. Overall, the expected outcome will be a demonstration that the proposed methods for obstacle avoidance, localisation and navigation work for the competition track. The final robot will be due for completion by the 16th September 2015.

Supervisors

Dr Hong Gunn Chew

A/Prof Cheng-Chew Lim

Honours Students

Under Graduate Group:

Adam Mai

Adrian Mac

Song Chen

Post Graduate Group:

Bin Gao

Xin Liu

Yiyi Wang

Project Details

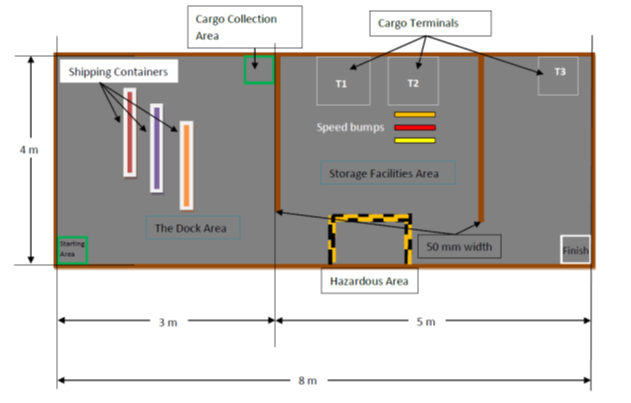

2.1. Aims and Objectives The aim of this project is to develop an autonomous robot using the National Instruments myRIO in addition with a graphical programming software called LabVIEW. The robot requires intelligence in the fields of obstacle avoidance, navigation and pathfinding, localisation as well as object handling [14] in order to traverse the environment depicted in Figure 2.4.1. Implementations of these modules will be divided amongst two groups: the under graduate group and post graduate group. The majority of the software responsibilities will be carried out by the under graduates while the post graduates shall deal with the mechanical aspects of the robot. In regards to the implementation, this project aims to address three hypotheses:

1. The Kinect sensor with ultrasonic sensors or ultrasonic sensors alone can accomplish object avoidance for both dynamic and static obstacles.

2. The A* algorithm can be implemented in such a way that the robot can freely move in any direction.

3. Odometry used in conjunction with filters and sensors will be able to localise a robot accurately in a defined environment.

With a total budget of $750, this project will further aim to minimise costs and produce a robot that is cost effective and reliable. The overall final product should be able to avoid both static and dynamic obstacles, determine its own route and transport goods to target locations. As this transportation process will be timed during the competition, maximising time efficiency will be important as well as ensuring the safety of the robot’s environment.

2.2. Significance of Competition This project exhibits the capabilities of the National Instruments myRIO as well as the CompactRIO (refer to Appendix F for images). The CompactRIO is a real-time embedded industrial controller which National Instruments offers to industries [15]. The myRIO has a similar purpose and acts as a light version of this controller for students to use for smaller applications [16]. In consequence, the competition markets these abilities for National Instruments while allowing students to work with robots. The project illustrates the potential of using autonomous robots as a means of cargo transportation in a shipyard. Similar to all the other previous competitions held by National Instruments, it ultimately displays the benefits of using autonomous robots for industrial applications. The world has seen the power of autonomous robotics within domestic households, where robotic vacuums such as the Roomba have become increasingly popular [2]. Although more primitive than the competition robots, the fundamental idea of portraying the power of autonomous robotics in industries is evident. Furthermore, displaying the skills of future engineers greatly emphasises what the world can expect in the field of robotics for the years to come.

2.3. Motivation for Competition There are three fundamental motivations behind this project. Firstly, on a national scale, by allowing universities from around Australia and New Zealand to compete with each other, it allows aspiring students to apply themselves in the field of robotics. Consequently, it encourages growth and innovation within this field of work [1] as well as demonstrates the capabilities of this generation’s engineers. Secondly, being a competition funded by the university, the successful completion of the project will allow universities to showcase not only the capabilities of their students, but also their quality of education. Lastly, on a personal level, the under graduate students benefit from the knowledge and experience that are gained upon completion of the competition. It allows them to become familiar with the integration of different systems working in conjunction with each other as well as with project management processes. Ultimately, it allows students to grasp an understanding of the requirements of a professional engineer.

2.4. Introductory Background

Figure 2.4.1: Map of the National Instruments competition track (sourced from [14])

Figure 2.4.1: Map of the National Instruments competition track (sourced from [14])

National Instruments (NI) is a company responsible for producing automated test equipment and virtual instrument software. Every year, it sponsors the National Instruments Autonomous Robotics Competition (NIARC) to allow tertiary students to challenge their capabilities in the robotics field. The theme of the competition changes annually and for 2015, the theme is “Transport and Roll-Out!” [1]. This theme simulates the use of an autonomous robot to collect and transport cargo in a shipyard. As such, this project will require the development of an autonomous robot in conjunction with National Instruments’ myRIO device, pictured in Appendix F. The following describes the procedure in which the robot will need to move in the competition. It has been sourced from the NIARC Task and Rules [14] and Figure 2.4.1 illustrates the overall course that will be traversed. Firstly, the robot will be required to navigate itself from the starting location to the cargo collection area. In between these zones will be a maximum of 3 static obstacles (shipping containers) which will need to be avoided by the robot. Once it reaches this collection area, a team member will simulate a crane and load the cargo on to the robot. After, the robot will unload cargo at any of the cargo terminals whilst avoiding any obstacles on its way. Here, the robot will need to deal with walls, speed bumps as well as a dynamically moving obstacle (another vehicle) in the hazardous area. Once it has no more cargo to unload, it will navigate itself to the finish area. The robot will have a three minute time limit to complete all these tasks.

2.5. Technical Background of Robot System The autonomous robot system will utilise a range of techniques and components in order to implement its various features. The following is a list of the components, techniques and algorithms that will be used within this project. It also includes any relevant theories that apply to the robot system’s implementation.

Image/Ultrasonic/Infrared Sensors: The Xbox Kinect Sensor is an image sensor intended for use with the Xbox gaming console. It consists of a RGB (red-green-blue) camera and a pair of infrared optics sensors. It is able to track human movement and implements depth imaging by triangulation using its dual infrared sensors [4]. Ultrasonic sensors are range detection sensors which work by transmitting high frequency sounds. It calculates distance by measuring the time it takes for reflections to return to the sensor. It makes use of the speed of sound which is approximately 340 m/s at sea level, but this value can vary depending on conditions such as temperature and humidity. Infrared sensors are another form of range detection sensor that measures infrared light radiating from objects in its field of vision. It can also be used to detect colour and calculate the distance of objects. As it measures the intensity of the light it senses, other light sources can affect its overall reading such as sunlight.

Data Fusion: Data fusion is a theory and technique which is applied to a system in order to synthesise all its raw sensor data into a common form [12]. It makes use of certain conversion and transformation formulas into order to do this. Its implementation becomes particularly important when a system utilises multiple sensors and needs to translate all this information and make a decision. Data fusion ultimately gives the outputted values more significance as it improves the meaning of the data [12].

Image Registration: Image registration is the process of joining multiple images together such that the corresponding data between images are matched to the same coordinates [13]. This technique allows for image stitching of multiple photographs, 3D imaging and depth imaging. This process is most notably used in computer visual systems and medical imaging.

Path Planning and Path Following Algorithms: Path planning algorithms determine and plan paths that the robot can traverse through to reach a target destination. This includes the A* algorithm used to solve for the shortest path [10] as well as the local tangent bug algorithm which requires the direction of the target to operate [11]. These are to be used in conjunction with path following algorithms to allow the robot to move to a target point.

Pose: The pose of the robot refers to its position and orientation within an area [21]. It displays this information through coordinates (x, y) and through an angle (°) relative to the origin of a map (0, 0) in an anticlockwise direction.

Odometry: Odometry refers to the use of encoders to measure a robot’s wheel axes rotations such that information about its pose can be known [23].

Simultaneous Localisation and Mapping (SLAM) Algorithms: SLAM algorithms are used as a means to localise and map a robot’s surrounding area at the same time. It is generally used with visual systems to create 3D environment models [22].

Feature Extraction: Feature extraction refers to the classification of objects in an image by extracting specific features of this image and comparing them with a pattern classifier [21][25]. It allows a robot to distinguish the difference between a wall and an obstacle.