Difference between revisions of "Projects:2018s1-103 Improving Usability and User Interaction with KALDI Open-Source Speech Recogniser"

(→Improvement On GUI) |

(→Automatic Speech Recognition (ASR)) |

||

| Line 30: | Line 30: | ||

===Automatic Speech Recognition (ASR)=== | ===Automatic Speech Recognition (ASR)=== | ||

| − | |||

| − | |||

[[File:ASR.png|thumb|upright=2.0|Automatic Speech Recognition Process]] | [[File:ASR.png|thumb|upright=2.0|Automatic Speech Recognition Process]] | ||

Revision as of 15:52, 20 October 2018

Contents

Project Team

Students

- Shi Yik Chin

- Yasasa Saman Tennakoon

Supervisors

- Dr. Said Al-Sarawi

- Dr. Ahmad Hashemi-Sakhtsari (DST Group)

Abstract

This project aims to refine and improve the capabilities of KALDI (an Open Source Speech Recogniser). This will require:

- Improving the current GUI's flexibility

- Introducing new elements or replacing older elements in the GUI for ease of use

- Including a methodology that users (of any skill level) can use to improve or introduce Language or Acoustic models into the software

- Refining current Language and Acoustic models in the software to reduce the Word Error Rate (WER)

- Introducing a neural network in the software to reduce the Word Error Rate (WER)

- Introducing a feedback loop into the software to reduce the Word Error Rate (WER)

- Introducing Binarized Neural Networks into the training methods to reduce training times and increase efficiency

This project will involve the use of Deep Learning algorithms (Automatic Speech Recognition related), software development (C++) and performance evaluation through the Word Error Rate formula. Very little hardware will be involved through its entirety.

Introduction

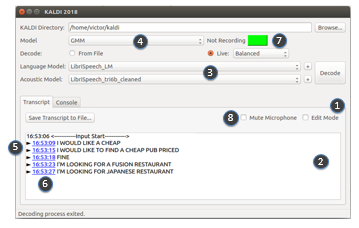

KALDI is an open source speech transcription toolkit intended for use by speech recognition researchers. The software allows the utilisation of integration of newly developed speech transcription algorithms. The software usability is limited due to the requirements of using complex scripting language and operating system specific commands. In this project, a Graphical User Interface (GUI) has been developed, that allows non-technical individuals to use the software and make it easier to interact with. The GUI allows for different language and acoustic models selections and transcription either from a file or live input – live decoding. Also, two newly trained models have been added, one which uses Gaussian Mixture Models, while the second uses a Neural Network model. The first one was selected to allow for benchmark performance evaluation, while the second to demonstrate an improved transcription accuracy.

Background

Automatic Speech Recognition (ASR)

Automatic Speech Recognition (ASR) is the process of converting audio into text [1]. In general, ASR occurs in the following process:

- Feature Representation

- Phoneme mapping the features through the Acoustic Model (AM)

- Word mapping the phonemes through the Dictionary Model (Lexicon)

- Sentence construction the words through the Language Model (LM)

The final three processes are collectively known as 'Decoding', which is highlighted more clearly through the diagram provided.

Research and Development

Results

Improvement On GUI

- Editable transcript

- Interactive display window

- Ability to choose AM/LM

- Audio recording & playback

- Timestamp usage on utterances

- Recording status indicator

- Microphone mute/unmute control

- Detailed documentation of decoding session