Difference between revisions of "Projects:2018s1-108 Machine Learning Multi-Spectral Simulation"

| Line 22: | Line 22: | ||

=== Merging Process === | === Merging Process === | ||

| − | + | Create RAG and NNG and calculate the weights of edges base on similarity | |

distance as described below. | distance as described below. | ||

| Line 30: | Line 30: | ||

feature distance. | feature distance. | ||

| − | + | [[File:spec_eq.png]] | |

| − | |||

| − | |||

| − | |||

| + | ''a'' and ''b'' are area of region ''a'' and area of region ''b'' respectively, std''<sub>ai</sub>'' and std''<sub>bi</sub>'' are | ||

| + | standard deviation of region a and standard deviation of region b respectively | ||

| + | in band ''i'', std''<sub>i</sub>'' is the standard deviation of two region ''a'' and region ''b'' combined. | ||

| + | |||

| + | ==== Texture Feature ==== | ||

| + | Nonsubsampled contourlet transform (NSCT) is used to obtain the texture feature. | ||

| + | The texture feature distance is obtained by following the steps below: | ||

| + | # Perform principal component analysis (PCA) on the multispectral image. The first principal component is taken as the input to NSCT. | ||

| + | # Perform NSCT on the input image with scale number of ''k''. The directional decomposition number for each scale is ''d<sub>m</sub> = 2<sup>m</sup> for scale 1 ≤ m ≤ k. ''k' bandpass images for each scale are generated, denoted by ''I<sub>1</sub>, I<sub>2</sub>, ..., I<sub>k</sub>''' respectively. For each bandpass image ''I<sub>m</sub>'' (where ''m = 1,2,...,k''), ''d<sub>m</sub>'' directional subimages are generated, expressed as ''I<sub>m</sub><sup>1</sup>, I<sub>m</sub><sup>2</sup>,...,I<sub>m</sub><sup>dm</sup>'' | ||

| + | # From the directional subimages, form texture feature vector that corresponds to every pixel. | ||

| + | # Perform fuzzy C-means clustering analysis on all texture feature vectors. Suppose g clusters are created, the cluster centres are denoted by '''CC<sub>1</sub>,CC<sub>2</sub>,...,CC<sub>g</sub>'''. | ||

== Project Members == | == Project Members == | ||

Revision as of 13:25, 22 October 2018

Contents

Introduction

This project aims to develop a machine learning system for generating simulated real-world environments from satellite imagery. The environments generated will be incorporated into VIRSuite, a multi-spectral real-time scene generator. The system will need to generate material properties to allow VIRSuite to render the environment realistically from visible to long-wave infrared spectra. The system will need to be able to regenerate existing environments as new satellite images become available, but doesn't need to be real-time or closed loop. Secondary phases of the project are to build a validation process to check that generated environments match real-world likeness across all spectra. The satellite imagery available is up to 0.3m per pixel and the environments generated should reflect this. This project requires students to: gain knowledge in deep and convolutional machine learning technologies, develop and adapt machine learning techniques for satellite imagery (GIS Data), and conduct validation experiments using multi-spectral knowledge and real-life data.

Image Segmentation

In order to identify objects in the imagery, the objects have to first be separated. This is done by partitioning the imagery into regions. Region merging approach is applied to perform image segmentation, based on techniques described in [1]. The image is first oversegmented using low-level method. Possible low-level segmentation algorithms are watershed transformation, Felzenszwalb’s graph based segmentation, and simple linear iterative clustering (SLIC). Region adjacency graph (RAG) and nearest neighbour graph (NNG) are created from the segmented regions.

RAG and NNG

RAG is an undirected graph which consists of nodes and edges. Nodes represents regions in the image, two nodes are connected by an edge if the two nodes are neighbouring regions. The weight of the edge is the similarity distance between the two regions, which is the combination of spectral, texture and shape feature distances. NNG is a directed graph that can be constructed from RAG by only keeping the edge with the lowest weight for each node. Each node has an edge that points to its most similar neighbour. If two nodes point to each other, an NNG circle is formed.

Merging Process

Create RAG and NNG and calculate the weights of edges base on similarity distance as described below.

Spectral Feature

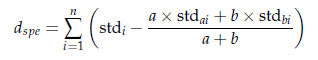

Spectral feature distance can be calculated by considering standard deviations of pixels in the regions. The following equation is used to calculate spectral feature distance.

a and b are area of region a and area of region b respectively, stdai and stdbi are standard deviation of region a and standard deviation of region b respectively in band i, stdi is the standard deviation of two region a and region b combined.

Texture Feature

Nonsubsampled contourlet transform (NSCT) is used to obtain the texture feature. The texture feature distance is obtained by following the steps below:

- Perform principal component analysis (PCA) on the multispectral image. The first principal component is taken as the input to NSCT.

- Perform NSCT on the input image with scale number of k. The directional decomposition number for each scale is dm = 2m for scale 1 ≤ m ≤ k. k' bandpass images for each scale are generated, denoted by I1, I2, ..., Ik' respectively. For each bandpass image Im (where m = 1,2,...,k), dm directional subimages are generated, expressed as Im1, Im2,...,Imdm

- From the directional subimages, form texture feature vector that corresponds to every pixel.

- Perform fuzzy C-means clustering analysis on all texture feature vectors. Suppose g clusters are created, the cluster centres are denoted by CC1,CC2,...,CCg.

Project Members

Supervisors

Students

References

- ↑ D. Liu, L. Han, X. Ning, and Y. Zhu, “A segmentation method for high spatial resolution remote sensing images based on the fusion of multifeatures”, IEEE Geoscience and Remote Sensing Letters, vol. 15, no. 8, pp. 1274–1278, 2018, ISSN: 1545-598X. DOI: 10.1109/LGRS.2018.2829807