Projects:2015s2-216 Feral Cat Detector

1 Motivation

Feral cats are ranked as one of the invasive species by Australia government for two main reasons: Firstly, for their environmental impacts, feral cats are exceptional hunters and pose a significant threat to the survival of many native species including small mammals, birds and reptiles. Feral cats have been implicated in extinctions of Australian native animals and have added to the failure of endangered species reintroduction programs (e.g. numbat, bilby). About 80 endangered and threatened species are at risk from feral cat predation in Australia according to Australia’s Environment Protection & Biodiversity Conservation Act (1999) and threat abatement plan (2008). Secondly, feral cats pose a serious health risk to humans, livestock and native animals as carriers of diseases such as toxoplasmosis and sarcosporidiosis. Cat-related toxoplasmosis can cause debilitation, miscarriage and congenital birth defects in humans and other animals. Feral cats also represent a high-risk reservoir for exotic diseases such as rabies if an outbreak were to occur in Australia.

2 Approach

Inspired by the work done by Yu et al (2013), we have successfully built a camera-trap system equipped with thermal and IR cameras as displayed in the following figures, which is able to automatically take the grey-scale picture of objects walking across the field of view of the camera triggered by the motion of the object. With periodically transferring image data from the disk on ARM device to PC, we can detect the occurrence of feral cats or any other animals of interest in local area by running Matlab implementation of classification algorithm on collected image set which can distinguish them from the rest as a result. The key idea in the design of detection algorithm is to generate one global feature as the representation of input image in a high dimension space, where classification model can be applied to divide feature points of separate categories by a hyperplane so new samples can be classified according to which side they fall onto.

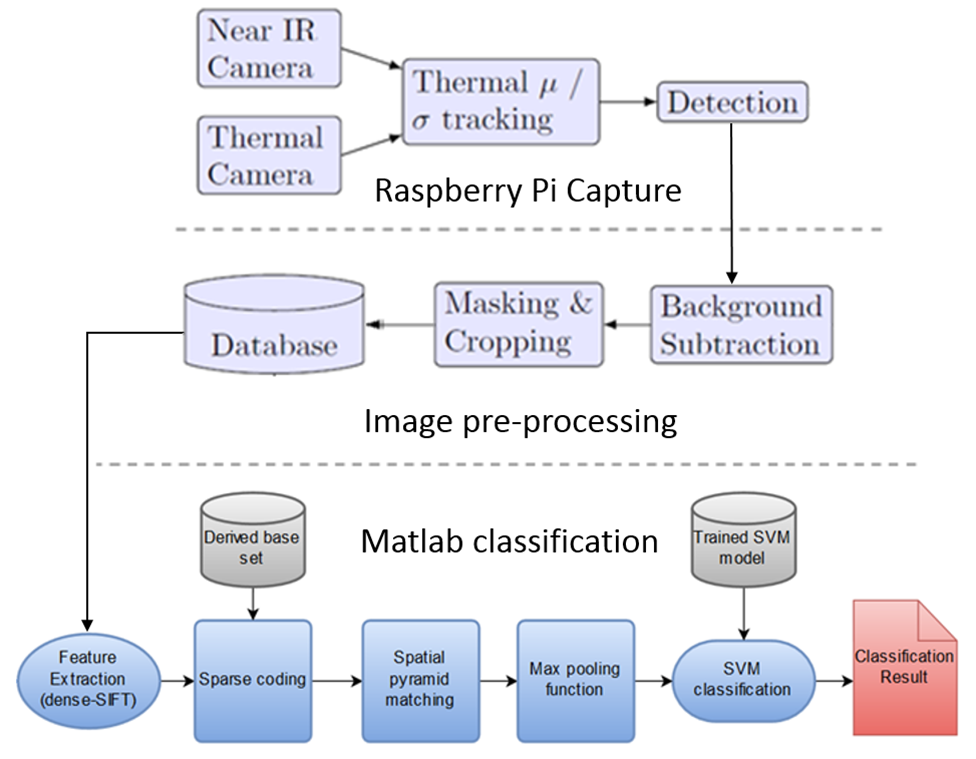

3 System Overview

As shown above, the system process can be break down to following procedures: •Data collection in the field using Raspberry Pi 2 •In-field real-time processing of thermal data to detect animals •Capture images using thermal and infrared cameras •Background tracking using data-fusion of thermal and IR images •Background subtraction, segmentation, and cropping isolates the animal •Feature extraction using dense SIFT •Dictionary matching, sparse coding and spatial-pyramid matching creates one global feature per image •Classification against database of captured images using support-vector machine

Our came trap, as shown below, can easily be set up in a various environmental conditions since it does not require much space and also can be adjustable according to specific requirement.

A layout of the embedded system is shown at right hand side, attached with devices including IR and thermal cameras (capturing devices), IR lamp (illuminate when detection register), batteries (in-field power supply) and USB stick (storage of captured images).

4 Image Representation and Classification

4.1 Feature Extraction

At first, dense-SIFT feature descriptor is extracted on patches across the image as local feature.

4.2 Sparse Coding

Then, sparse coding technique is applied on extracted dense-SIFT features in order to represent them with weighted linear combination of basis vectors (can be seen as stimulus patterns from dataset) in learned dictionary.

4.3 Spatial Pyramid Matching

To preserve the spatial information of the image and linearly classify image data in feature space, we apply spatial pyramid matching kernel on image which basically partitions image into a number of spatial subregions, in which derived sparse codes will be combined together to generate one global feature.

4.4 Classification

We use binary support vector machine classifier as classification model to divide global features of separate categories in feature space at training stage. Therefore, the new examples from in-field capturing could be classified to to either of two classes depending on which side of hyperplane they fall onto.