Projects:2016s1-146 Antonomous Robotics using NI MyRIO

Contents

Introduction

Supervisors

Dr Hong Gunn Chew

A/Prof Cheng-Chew Lim

Honours Students

Thomas Harms

James Kerber

Mitchell Larkin

Chao Mi

Project Details

Each year, National Instruments (NI) sponsors a competition to showcase the robotics capabilities of students by building autonomous robots using one of their microcontroller and FPGA products. In 2016, the competition focuses on the theme 'Hospital of the future' where team robots will have to complete tasks such as navigating to different rooms in a hospital setting to deliver medicine while avoiding various obstacles. This project investigates the use of the NI FPGA/LabView platform for autonomous vehicles. The group will apply for the competition in March 2016. More information about the NI robotics competition is found at http://australia.ni.com/ni-arc LabView programming knowledgement would be a key advantage in this project. This project builds on the platform designed and built in 2015

Project Proposal

To have a better understanding of the project and to distribute the workload amongst the team, the robot system has been broken down into the following sections:

Mechanical Construction Artificial Intelligence (Software), Robot Vision and Range Finding, Localisation, Movement system, Hardware design and installation, Medicine dispenser unit, Pathfinding/Navigation Control, Object detection, Mapping Movement tracking.

By creating a set of clearly defined work activities, each team member will be able to design and develop the required functionality for their respective sections.

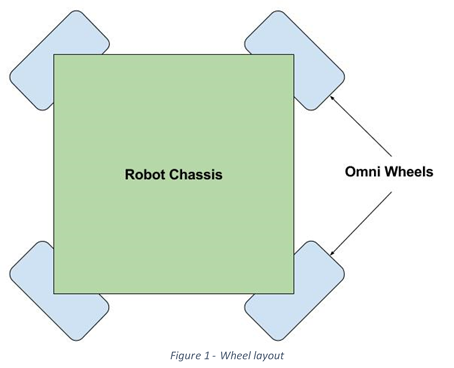

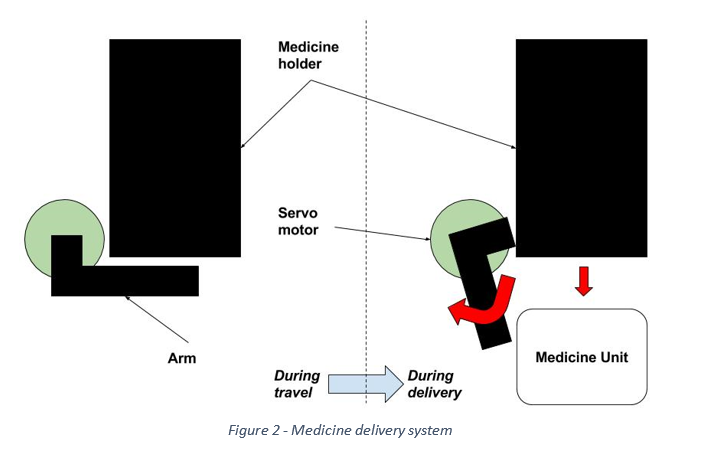

The mechanical construction will focus on the design and assembly of the robot itself. This process will involve deciding on the robot dimensions, motors, sensors and medicine delivery system. Current research and development has been made to the movement system and a four omnidirectional wheeled approach is being prototyped. This prototype will demonstrate a robot with the ability to move in any direction easily without the need to rotate before any translation takes place. Figure 1 shows the layout that will be used for this robot design. The mechanism for delivering the medicine units shall be similar to the design from last year’s University of Adelaide team. Figure 2 demonstrates how this medicine delivery shall work.

The vision and range finding systems for this robot shall consist of an RGB-D camera and an array of ultrasonic sensors. The camera shall be used to implement object detection, while the sensors shall measure proximity to the surroundings. These systems in conjuncture with each other shall allow collision avoidance to be achieved. The localisation system shall be realised through the use of optical flow sensors. These sensors shall measure the direction of flow of the surface below the robot in order to quantify which direction the robot is moving, providing full localisation functionality.

The delivery system, combined with the ease of movement provided by the wheel layout, shall allow for our robot to quickly and efficiently traverse the competition track and deliver the medicine units to the required areas. The use of the mentioned sensors shall provide collision avoidance and localisation, resulting in a robot that can safely and reliably complete the goals required for the competition. In conclusion, this design aims to achieve fully autonomous behaviour in a hospital setting.

Project Progress

Movement System

Current Progress

After initial research early on in the project the group decided upon the four-wheeled setup. If this system can be produced, it could provide the robot with the capability to move about the map quickly and to dispense medicine even faster.

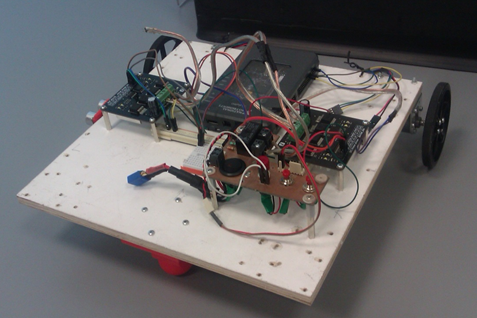

An initial prototype has been constructed through the use of a single 300mm x 300mm wooden base which various wheels and sensors have been attached to. The wheel configuration in this prototype is the two bi-directional wheeled system coupled with a ball bearing slider. The Omni-wheeled design was not implemented first as the team wanted to make a simple prototype to test all the systems simply. In addition, there was a considerable delay in acquiring the Omni-wheels needed.

Manual control was implemented soon after the prototype robot was developed to allow the team to test the sensors and their effectiveness in a moving environment. In addition, a form of automatic control has been developed to avoid obstacles and move past/around them.

Future Progress

The design and construction of a new prototype for testing the 4 wheel configuration. This will be separate from the current prototype and will enable the team to compare the two designs and come to an agreement on the configuration that will be used for the final build robot. The new prototype will consist of a 300mm x 300mm aluminum or Perspex base and the four wheel Omni-wheel design discussed.

A likely inclusion to the new prototype will be an upgrade to a two tier system design. This will involve an additional 300mm x 300mm aluminum or Perspex panel which will be mounted a short distance directly above the first base. This will provide an upper level which various sensors and the medicine delivery system can be mounted onto.

Localisation System

The localisation system is built on optical flow, and computer vision. Optical flow provides a high update rate but is susceptible to noise and accuracy drift. Computer vision observations are registered to the competition track. While the update rate is much lower, it has zero drift. A hybrid was built to obtain a fast update rate from the optical flow system, while counteracting drift errors with the computer vision system. Both these components are described in the following sections.

Optical Flow Sensors

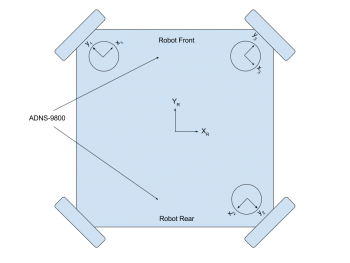

Three ADNS-9800 optical flow sensors were used in the project. These use a laser for illuminating the surface meaning higher accuracy than other illumination methods. Also, they can handle speeds of travel up to 3.81 meters per second. This is more than enough to satisfy the robot's top speed (approximately 1 meter per second). A photo of the sensor module is shown here.

Through testing it was found that the maximum clearance between the bottom of the sensor lens and ground surface is 3mm. This left little room for bumps in the surface area. In order to keep the sensors clear of bumps and maintain a near constant height above the surface, the sensors are mounted directly beneath the motors. Whenever a wheel travels over a bump, the sensor is raised and therefore clears it. To fit the cables attached to the sensor module PCBs, the sensors were mounted with their y-axes pointing out along the axles of the wheels (see the figure below).