Projects:2018s2-270 Autonomous Ground Vehicles Self-Guided Formation Control

Abstract

This project introduces a distributed system that is able to handle multiple agents at once. Each individual of this system will carry a camera alone and make own decisions. However, the decisions are also depend on the communication between the individuals, the data through communication includes the coordinates of current positions, the wheel angles, velocities, and the distances from one position to another. By integrating these information together, the system will come up with a best solution for current scenario and command the each agent to move to the desired position.

Project Team

Group Members:

Liang Xu

Fan Yang

Qiao Sang

Advisors:

Xin Yuan

Yutong Liu

Yuan Sun

Supervisors:

Prof. Peng Shi

Prof. Cheng-Chew Lim

Objectives

Based on the information we know so far, the objectives of this project in the first semester can be concluded as below:

1. Vision-based sensing.

2. Route planning.

3. Formation control.

4. Develop a distributed control system.

5. Integrating all member’s work into the virtual environment and test.

6. Check for more possible formations.

7. Obstacle avoidance after formation.

8. Transfer from virtual environment to the physical platform and test.

System Design

Starting from the highest level of the multi-agent system. This system will be containing four agents, it is a distributed system, which means each agent in this system can make their own decision. But even with this ability, the four agents still need to communicate with each other about the velocity, the current position, the destination and the angle of the agent. The exchange of these data allows them to make an agreement so that they can achieve the desired formation. This part was done by Fan.

Limited by the tools we have in reality, we have to skip some convenient way to approach final result, such as the ultra-sonic sensor, or the infrared distance meter. In this case, we use the camera instead to produce a coordinate system to measure the distances between objects and the agents. We also use the camera to apply image recognition so that the agents are able to recognize the object in its “sight”. By passing these information into processor, our algorithm will provide an optimized path to the agent, the agent then give commands to the motors. The description above is only for one single agent.

To test whether our algorithm is working or not, I have implemented a scene like figure here in V-REP using the built-in models called Pioneer3dx, it is a two-wheel rover and obeys the basic physical law. Based on that, we developed a motor controller to manipulate the movement of the Pioneer3dx. When Fan’s control algorithm executes and outputs a set of coordinates of trajectory, the V-REP would take those coordinates in, and feed them onto the rovers respectively, the motor controller then make the wheels spin by calculating the needed velocity and angle between the starting position and the destination. After the justification in the virtual environment, the testing would be transferred from V-REP to a physical model which is developed by Anh. Just as the way V-REP runs the output from Fan’s algorithm, the real rovers also takes in a set of coordinates, from two different points, the Arduino controller on board would control the rover to move to that desired position and eventually finish the formation.

Final Results

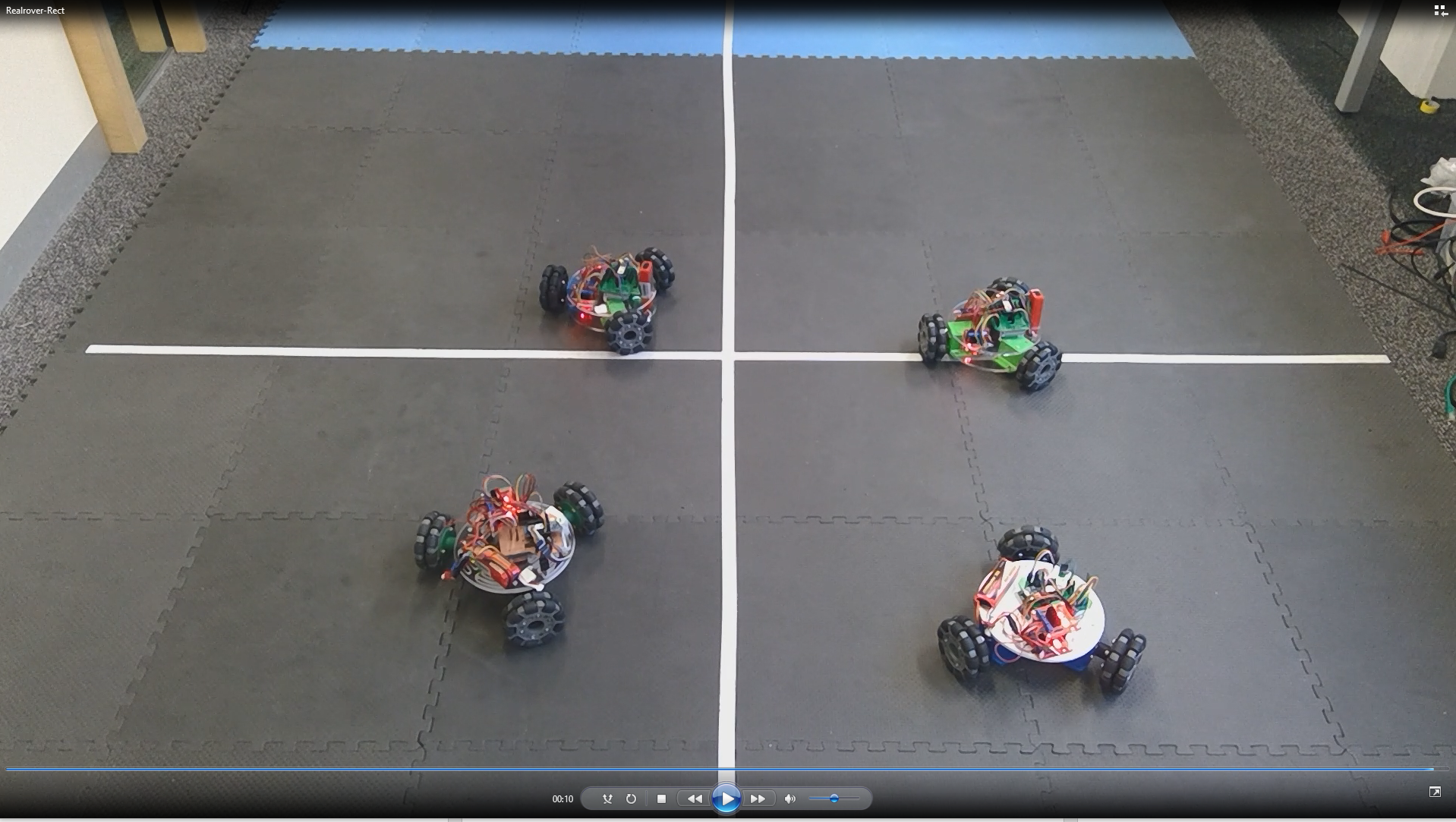

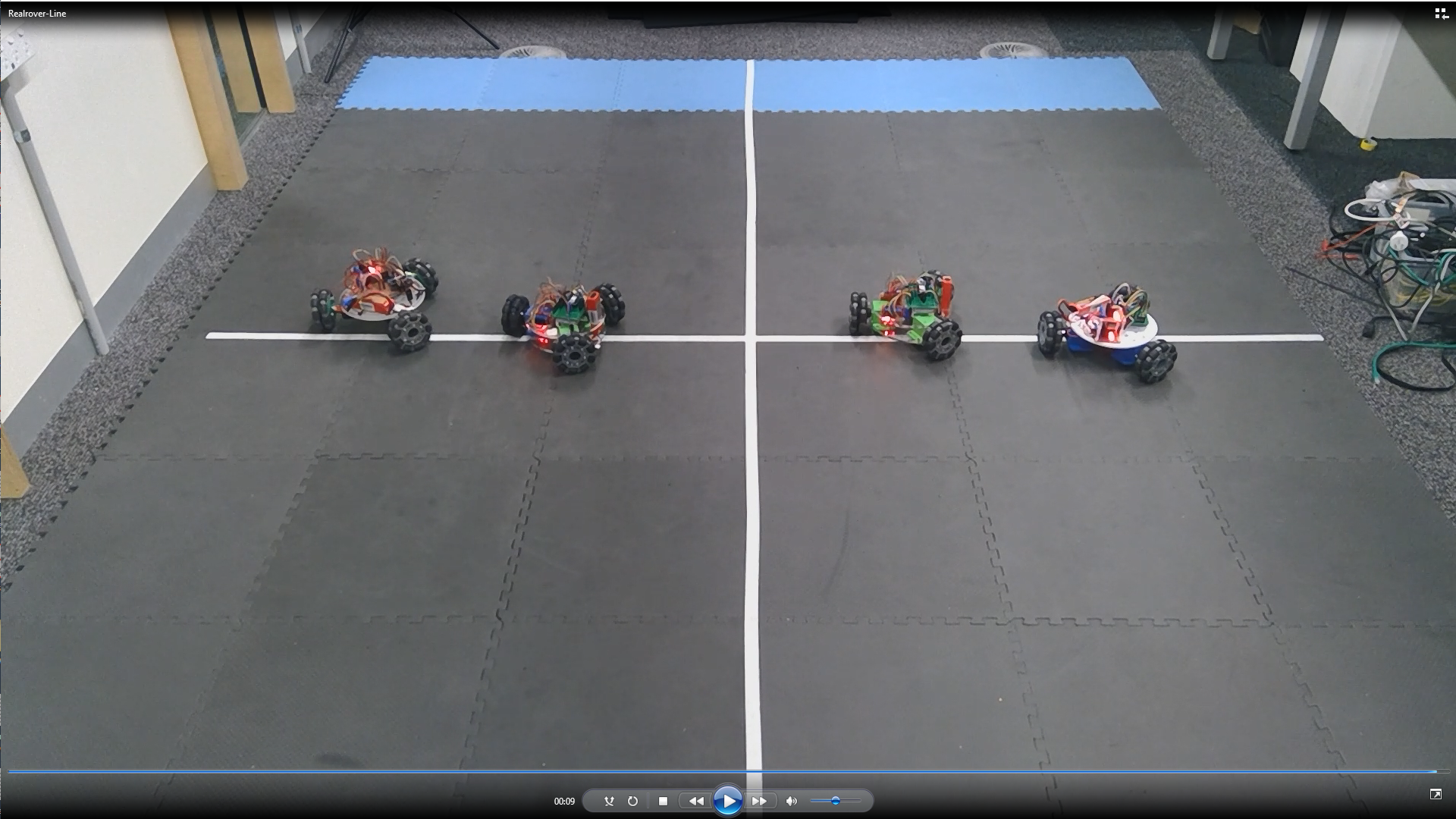

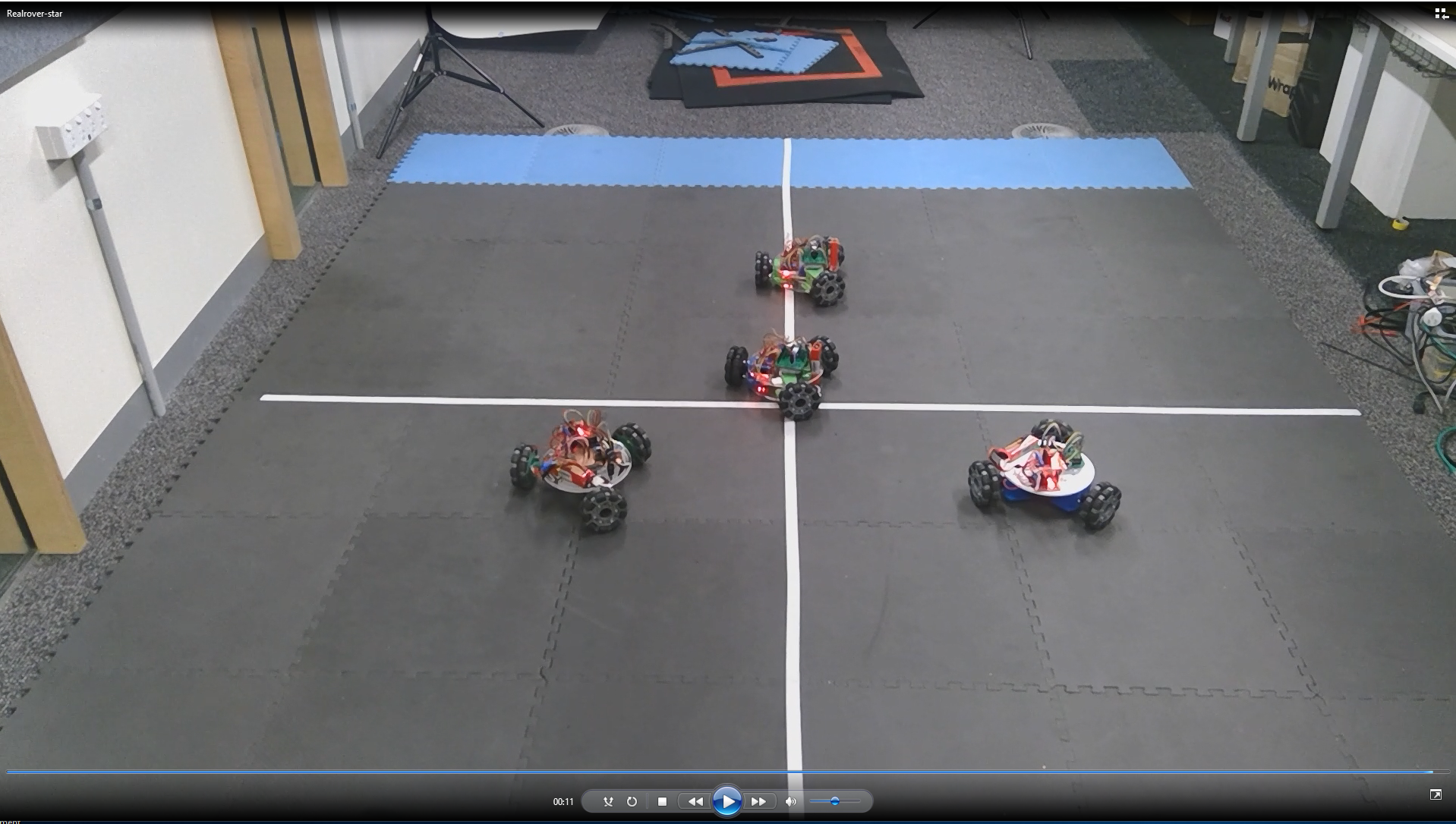

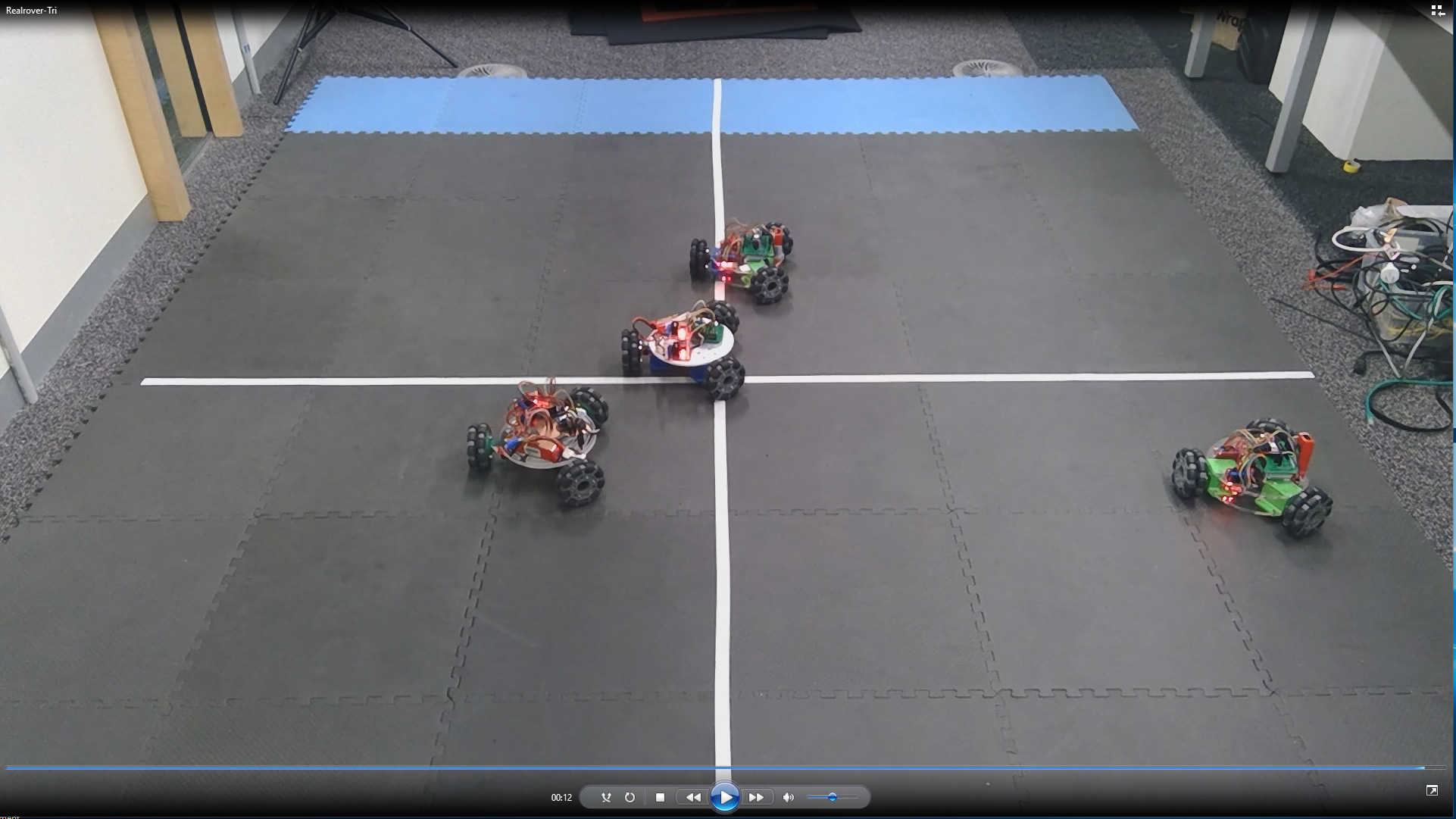

We have successfully tried to use four rovers to form up multiple formations as shown below.

We find the formations we can choose out of four rovers would be very limited, so we expanded the number of the rovers to six, hence we could come up with more formations.

Conclusion

At the end of this project, we have an algorithm capable of doing formation control by taking in the desired coordinates and then outputs moving trajectory of the rovers. We performed that in both virtual environment and real world. Despite the fact that the algorithm is real-time and decentralised, we could not make the real rovers become real-time as well since the time consumption and the schedule delay. But we are able to run the real-time simulation in the virtual environment. This proved that in the real world our algorithm is also feasible. In both virtual and physical environment, we are able to control four rovers to form up different shapes we want. We also expand the number of rovers to 9 in virtual environment and the numbers to 6 in physical environment.