Projects:2019s2-23501 Multi-Drone Tracking and Formation Control Platform

This paper explores solutions to real world problems in the domain of multi-drone control and formation through the development of a generalised platform for indoor UAVs in a confined space. The implementation of the platform is detailed through progressive development and justifies key decisions during development. This wiki details the implementation of the multi-drone platform with a particular focus on the collision avoidance module and rigidbody motion tracking. Dynamic obstacle avoidance is addressed through an artificial potential fields approach with adjustments for various known shortcomings. Reliable rigidbody streaming is achieved through BLANK

Contents

Introduction

Through the rise of multi-drone technology over the past decade, key challenges have hindered the ability of researchers to innovate and provide generalised solutions. In particular, the area of UAVs has expanded to various industries including defense, consumer services, agriculture, and transport. Through the development of a generalised platform for indoor UAVs in a confined space, issues challenging multi-drone research will be resolved.

Project team

Project students

- Jacob Stollznow

- Alexander Woolfall

Supervisors

- Dr. Hong Gunn Chew

- Dr. Duong Duc Nguyen

Motivation

A key issue in multi-drone development is the unique implementation and operation protocols involved in the use of each drone type, adding unnecessary overhead. This labour intensive task translates to the development of algorithms with unique interfaces and control schemes dependent on the drone type. The task of implementing any given algorithm across a range of drones does not advance the researchers work. This results in researchers developing sophisticated multi-drone algorithms, backed by theoretical reasoning and simulation results, with no evidence of operation in a generalised physical environment with various drone types.

Another shortcoming in multi-drone development is the lack of usability by any type of user, regardless of their experience flying UAVs. Drones are difficult to control due to the many degrees of freedom and flight parameters, overwhelming users and algorithms alike. This creates a hazardous environment in which the user, obstacles or other drones could be damaged. The safety concerns of flying drones in a physical environment is another contributing factor which limits researchers. Safety constraints are tedious to implement for each algorithm but are necessary to avoid potential damage to expensive drone hardware. Physical constraints can be used to limit damage to the user, but do little to prevent drone-obstacle or drone-drone collisions.

These key issues result in researchers developing multi-drone algorithms with very limited physical testing. In addressing the challenges detailed, researchers would be able to offer complete solutions to real world multi-drone applications supported by safe and generalised physical environment testing with minimal implementation adjustments.

Significance

The solution to address the key issues will affect two target audiences, researchers and general users. Through the implementation of a solution to the solve the use of different platforms for various drone types, researchers will be able to implement a general solution applicable to all drone types. This will reduce the overhead associated with the separate implementations and also the preparation time required for each unique platform. Collision avoidance management will reduce the user implementation required to ensure drones are not damaged in physical testing scenarios. In which case, researchers are able to focus their energy on the implementation of their algorithms rather than implementing their own mechanisms to manage potential collision situations. Through collision management the risks associated with multi-drone operation would be significantly reduced, translating a hazardous high risk physical environment to an environment operatable by users ranging from trained pilots to high school students.

Objectives

The objectives were developed considering the motivation and specify the essential and desired features of the multi-drone platform --

Required

- Integration of more than one drone type.

- Generalised and robust API to control all drone types as a swarm or individually. The API must support MATLAB.

- Collision avoidance management for drones.

- Researcher-specific features, such as extensive feedback and visualisation with intuitive control of drones.

Extension

- API support in Python and C++.

- Reduction in setup and preparation time as is required for each drone implementation.

Platform

User API

The user API was made such that it was as easy as possible to create new scripts for the platform. Drone formation scripts are created in C++ simply by including the user_api header, and in MatLab by including the user API files in the application's PATH. From here drone scripts can be developed by calling the functions which make up this API. The following paragraphs will refer directly to the C++ user API however they do apply to the MatLab API as well as it was a goal to keep the API as similar as possible between languages.

Initialisation and Termination

The First step of any drone script is to initialise the API program. This is done by calling the mdp::initialise(...) function passing in the desired update rate and the name of the program. To conclude a program, the script will call the mdp::terminate() function which will automatically send all in flight drone's to their designated home locations and land.

All drone's active on the platform will be identified by a user script through a mdp::id class. This class contains the numeric id of the drone on the server, and an identifying name which has been supplied as the rigidbody tag through Optitrack. To receive a list of all active drones on the server, the script will call the function mdp::get_all_rigidbodies() which will return a list of mdp::ids representing each active drone. This function refers to rigidbodies as it will also return ids representing other rigidbodies identified by the platform such as any declared and marked non-drone obstacles.

Movement and Retrieval of Drone Properties

The movement of drones has been distilled by the API down to calls to two functions, one for setting a drone's new desired position, and another for setting a drone's desired velocity. mdp::set_drone_position(...) takes a mdp::id referencing a specific drone, and an instance of mdp::position_msg indicating the desired end point and duration the drone should take to arrive. mdp::set_drone_velocity(...) similarly takes a mdp::id referencing a specific drone, and an instance of mdp::velocity_msg indicating the desired velocity and duration the drone should hold this velocity for. By default a new call to one of these functions will override all previous calls, for instance if a drone is performing a velocity command when it receives a new position based command, it will abandon the previous velocity command immediately and perform the new position command. It is possible however to allow the system to instead queue up events such that one is only ever performed once the last has completed. This option can be enabled during script initialisation.

The API also allows the script to read the current position and velocity of all rigidbodies tracked by the drone management server. This information is retrieved by calling either mdp::get_position(...) or mdp::get_velocity(...) and passing in an instance of mdp::id referencing the desired rigidbody.

Higher Level Flight Commands

The user API also features the ability to make use of common drone flight functionality such as calling for the drone to hover, takeoff from the ground, or land. These functions can be called through mdp::cmd_takeoff(...), mdp::cmd_land(...), and mdp::cmd_hover(...). The platform keeps track of a home position for each drone. This home position is designated on drone initialisation as the starting x and y coordinates of the drone. The user API can interact with this location by either setting this to a new position, retrieving the home position, or calling a specified drone to return to and land at it's home position. This functionality can be used through the following functions: msp::set_home(...), mdp::get_home(...), and mdp::goto_home(...)

Drone Flight States

During operation, drones on the platform are constantly identified to be in one of the following five flight states: LANDED, HOVERING, MOVING, DELETED, and UNKNOWN. The state a drone is in is identified by the rigidbody class and will be discussed in that section. The drone's identified state is able to be retrieved by the user API at any point during operation. This can be done with a call to the function mdp::get_state(...) passing in a reference to a rigidbody id mdp::id. The API's get state function operates by looking for a ROS parameter representing the current state of the requested drone. By using this method instead of ROS services, the API is able to return the drone's current state near immediately with minimal retrieval delays.

Control Functions

In order to avoid the user having to implement their own quantised control loop through the use of operating system sleep functions, the user API has included the mdp::spin_until_rate() function. This function returns once 'rate' time has passed since the last call to mdp::spin_until_rate(). This 'rate' value is given by the user during script initialisation as a number in Hertz generally between 10Hz to 100Hz. For example, if the scripts designated rate is 10Hz (0.1 seconds between quantised 'frames') and it has been 0.06 seconds since the last call to mdp::spin_until_rate(), calling mdp::spin_until_rate() will cause the script to wait for 0.04 seconds to hit the desired update rate of 10Hz and then return such that the script can continue operation. If a time period of 0.13 seconds has passed since the last call to mdp::spin_until_rate(), then the function will immediately return as the desired update rate has already passed.

The combination of getting a drone's state and spinning allows the creation of a very useful control function mdp::sleep_until_idle(...) which spins the program using mdp::spin_until_rate() until the drone specified enters the HOVERING, LANDED, or DELETED states. The result is functionality for a script to be able to wait until the previous command has completed before continuing execution. For example if the script makes a call to set a drones position over 4 seconds and then makes a call to mdp::sleep_until_idle(...) on that same drone, then the script will pause execution until the drone has entered the idle state after completing the 4 second set position call. With this system a simple waypoint program can be created by interleaving drone set position commands with calls to the sleep until idle function.

Drone Management Server

The drone management server is the central system operating the multi-drone platform and contains the list of active rigidbodies currently in operation. The server also has a primary role in receiving user API commands and distributing these commands to the relevant rigidbodies operating on the platform. Platform initialisation, shutdown, and platform-wide operations such as emergency are performed on the level of the Drone Management Server.

The drone management server holds a list of rigidbody classes where each rigidbody class represents a single drone in operation on the platform. whereas the rigidbody conducts all functionality relevant to a single drone, the drone management server conducts functionality that impact multiple at once. The adding and removing of drones is handled through services existing as part of the drone management server class.

The drone management server has been through many iterations since the start of development, originally responsible for the processing of almost all user API messages. However since then much of this functionality has since been shifted onto the rigidbodies themselves to make use of asynchronous operation. The server's main task in the handling of user API messages has since changed to instead act as an intermediary step in translating the encoded standard ROS messages into an easier to handle custom message to eventually be received by the rigidbody classes.

Rigidbody class

The rigidbody base class represents a single rigidbody operating on the platform. This rigidbody class holds the base functionality that applies to all rigidbodies no matter what their drone 'type' is, where examples of differing drone types are Crazyflie, Tello, or a virtual drone (vflie). Drone types are defined by the platform as inheriting the functionality and building upon the functionality present in this class. The rigidbody class holds the vast majority of platform functionality when it comes to the generic flight of drones. The following is a list of the major functionality that this rigidbody class handles:

- Per drone initialisation of various ROS structures

- Safe shutdown of the drone

- Timely reading and conversion of API commands received from the drone management server into functions implemented by drone wrapper classes.

- Handling and conversion of 'relative' movement options of the user API

- Validation and trimming of input API commands such that drone wrappers are not overwhelmed by vast numbers of incoming identical commands.

- Handling of per drone data including current pose and velocity, home positions, identification data, etc.

- Recalculation of drone velocities when new motion capture information is received.

- Publishing of a number of per drone information streams to relevant ROS topics.

- Queuing and de-queuing of API commands such that multiple commands may be completed one after another in order.

- General logging of drone activity.

- Handling and detection of drone flight states.

- Command safety timeouts such that a drone cannot be locked hovering in flight.

Drone Flight States

The system of flight states directly looks at a current drone's position and velocity values to determine what the physical state the drone is during flight. The possible states a drone can be in at any one point is identical to the states discussed in the user API section above. LANDED, HOVERING, MOVING, DELETED, and UNKNOWN. With this state system, missed commands due to packet loss can be identified through witnessing a differing physical state to what is to be expected, for instance if a drone receives a set_position command but remains in a HOVERING state, then it can be determined that the position message was lost and another can be sent. Additionally this system resolves the issue of early transition by, instead of guessing the time of the following command via the last commands duration, the detection of a HOVERING state following a successful movement message will indicate the availability for the following command. The result of this system is more accurate and reliable API commands being conducted on drones pushing forward to reliability and usability of the drone platform.

User Feedback and Drone Visualisations

Live View GUI

Compressed

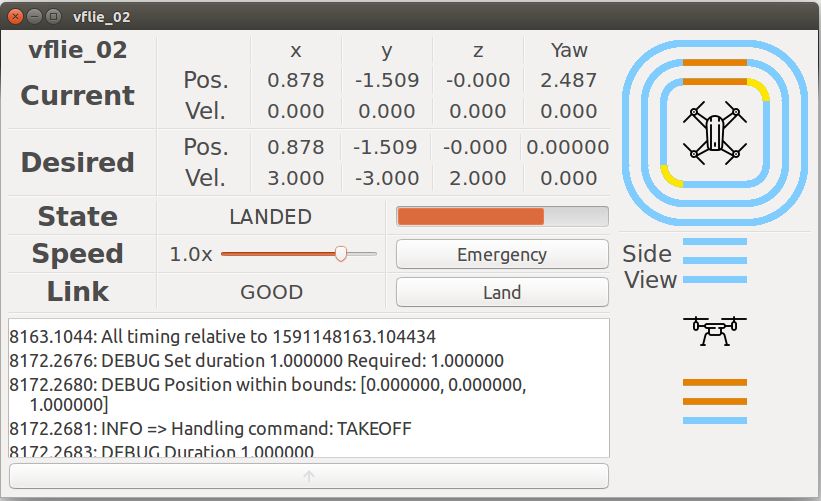

The live window of each drone automatically updates according to the set update rate (by default it is currently 2Hz). As this is only for the users benefit, it is not necessary to run these interfaces at the same operating frequency as other critical components. The GUI provides rounding of each numerical value to increase readability and allow the user to quickly scan the appropriate values. Towards the bottom half of the interface other relevant readings and controls are included such as state, speed, link condition, battery level, emergency override, and land override. The state displayed is consistent with that accessible through the user API and can take one of five values. The speed slider provides multiplier speed adjustments to the specified drone, this value is only adjustable when the drone is in a 'LANDED' state. The user is able to adjust the multiplier to as low as 20% and and high as 2.0. The link condition variable communicates whether there are any communication breakdowns for the given drone. A break at any point in the communication flow will trigger this condition to change. The battery progress bar will monitor the battery level of the given drone if that value is available. The final two controls allow for override in a situation the user is able to notice something the platform is not and would like to trigger either an emergency request or a land request.

Using the live view application program, the user is available to launch the live view GUI for each rigidbody on the drone server. These windows will spawn in a logical fashion and will space accordingly to maximise the number of windows that can be observed at any one time. In addition once overlap is inevitable, the windows are indented to allow the user to easily select lower nested windows, an example of this can be seen below. Due to the lower operating frequency and the fact that only minor graphics are altered each iteration, many live view windows can remain open throughout platform operation.

Expanded

The user is able to trigger an option for the live view window to launch in expanded mode, or the user is able to manually trigger it by selecting the button along the bottom of the basic live view window. The expanded mode can be seen below and adds two key features to the compressed window. These features include drone specific logging output and safeguarding obstacle indicators.

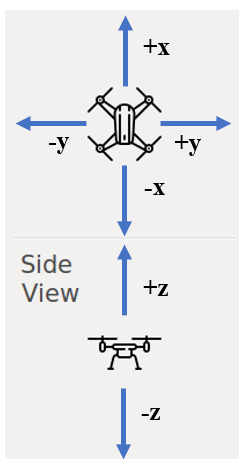

The default color of a light blue indicates there is no obstacle in that vicinity. A yellow bar indicates an obstacle exists in that direction, and the obstacle is not within the influence distance of the collision avoidance algorithm. Orange indicates an obstacle is within influence distance prompting an increase in intensity (two bars). Red indicates an obstacle is in the drone's restricted distance and is indicated by three bars. These top and side view real-time diagrams provide an accurate visualisation of all obstacle influences on each drone. The dynamic diagrams use a number of drone dimension parameters provided through ROS parameters to decide which region an obstacle influences the given drone. The drone's orientation in the diagram is static and all obstacle influences are evaluated relative to the drone. The coordinate system adopted in the safeguarding visual feedback diagrams reflects the coordinate system of the physical Optitrack environment. In the top view diagram, the drone facing in the positive x axis and the left side of the drone is in the negative y region. On the side view diagram, above the drone is positive z, whilst below the drone is negative z. A diagram has been provided below for clarity.

If the expanded option is selected as default, each live view window will be spaced according to the expanded size of the window, as can be seen below. Each live view window can be expanded and compressed as the user requires.

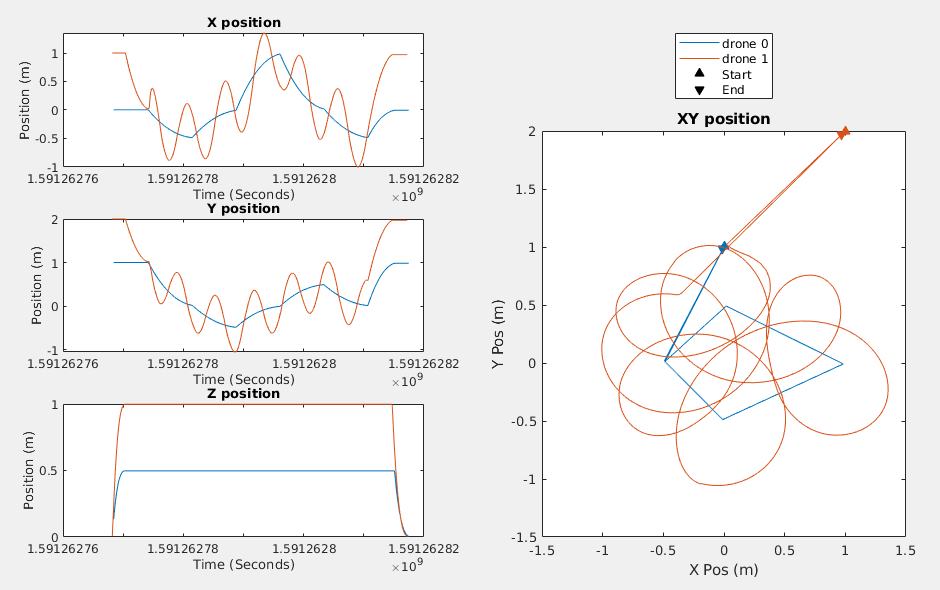

Post-flight Sessions Folder

As well as providing real-time feedback it was necessary to understand what went wrong or why a given drone did not arrive at its goal position. This led to the development of a session folder which captures all the flight details of each drone up until a server shutdown is called and the session is ended. When the multi-drone platform is shutdown the user is able to review the session through the automatic generation of a summary plot. The summary plot contains generic information regarding the position of each drone over the course of the session and the distance to the closest obstacle. This plot provides useful feedback to the user in the case something unexpected occurred or the user was not able to observe an event in real-time. Contained within the session folder are the log files of each drone, the MATLAB data used to generate all information on the summary plot and a .fig file for the summary plot in the case the user would like to further analyse the data. The sessions directory is generated locally in the root folder of the multi-drone platform package and each session contains the files seen below.

The summary plot appears as follows:

Visualisation

All drones can be visualised through rviz within ROS. Here is a visualisation example of some vflie drone objects.

Safeguarding

The safeguarding module provides a number of features to reduce the hazards involved in multi-drone physical testing, these include the following:

- Safe Shutdown procedure - The platform can be shutdown safely through adjusting the ROS parameter, mdp_should_shut_down. This procedure will land all drones prior to a platform shutdown.

- Emergency Shutdown procedure - The platform can be shutdown immediately through the relevant ROS service. Any drones in the air will fall, this program should only be used in absolute emergencies.

- Safety timeouts - Provide individual drone safety when no command is queued. Drone will first go through stage 1 timeout which is a hover at the current position. If no command is queued in the next 20 seconds, the drone will go into stage 2 timeout and land.

- Smart guards for API Commands - Each user API request is checked to see whether the drone can physically complete the command considering physical environment limits and individual drone velocity limitations. Given it cannot the position/velocity and duration are adjusted accordingly.

- Collision avoidance - The platform provides collision avoidance through the implemented potential fields method.

- Reliable motion tracking - The platform aims to provide reliable motion tracking through the use of a modified iterative closest point implementation to realise rigidbodies.

Results

Included below are some platform demonstrations providing evidence of the various features clarified above.

Simple Collision

The summary plot below depicts the potential collision between two drones with drone 0 attempting to navigate to (1, 1, 0.5) and drone 1 attempting to navigate to (1, -1, 0.5).

The summary plot below depicts the potential collision between a drone when navigating between a number of static obstacles.

The summary plot below shows the interactive nature of the user API. The program navigates drone 0 in a diamond formation using waypoint control. Drone 1 then tracks drone 0's position in a circular motion 50cm above it. This demonstrates the manner in which the user API can be used for both drone feedback and drone action methods.

The plot below summarises a complex dynamic potential collision between three drones. As can be seen the collision avoidance module ensures each of the drones do not collide as supported by the distance to closest obstacle graph.

Conclusion

Overall, the platform provides a generalised and simplistic approach to physical testing with significant reductions in preparation time, increased user clarity through the feedback suite, and advanced safety measures to reduce the hazards associated with the physical environment.