Projects:2020s1-1410 Speech Enhancement for Automatic Speech Recognition

This project is sponsored by DST Group

Speech recognition is becoming more and more widely used, though the input audio to these systems is rarely clean. A number of techniques [1] [2] have been developed to reduce the background noise of speech clips, both using deep neural networks, and more traditional filters.

The overall objective of this project is to compare a number of speech enhancement techniques in a fair environment, and to also compare the results of each technique after its output is fed through an automatic speech recogniser.

Project team

Project students

- Patrick Gregory

- Zachary Knopoff

Supervisors

- Dr. Said Al-Sarawi

- Dr. Ahmad Hashemi-Sakhtsari (DST Group)

Advisors

- Ms. Emily Gao (UniSA)

- Mr. Paul Jager (DST Group)

Introduction

This project follows from work done previously by University of Adelaide students Jordan Parker, Shalin Shah, and Nha Nam (Harry) Nguyen as a summer scholarship project.

Background

Machine Learning

Deep Neural Networks

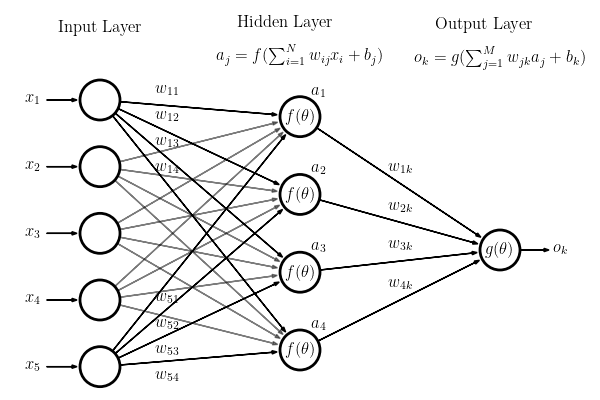

A neural network is a type of algorithm which is developed to emulate the function of the brain. A neural network consists of layers of “neurons”, each of which holds a numeric value. The value of neurons in the first layer of a neural network are determined by the input to the network, and this layer is called the “input layer”. The value of neurons in further layers is determined by calculating a weighted sum of the values of neurons in the previous layer (and an additional bias, which can help bring the value into an appropriate range), and then passing this result through a non-linear function called an “activation function”. This non-linearity is what separates a neural network from a simple system of linear equations. See below for a visual demonstration of a small neural network, demonstrating how the values are calculated. The final layer of the network is called the “output layer”. Layers between the input layer and the output layer are called “hidden layers”. A Deep Neural Network (DNN) is any neural network which has more than one hidden layer.

Training

The value of each neuron in a neural network is calculated by taking a weighted sum of values from the previous layer and a bias. Determining the optimal values of these weights and biases lies at the heart of machine learning. The optimal weights and biases are calculated in the training process. A single iteration of training involves feeding the network an input (with a value assigned to each input neuron), and observing how close the output is to some desired output. It makes a comparison using a “loss function,” which is small when the output is close to the desired output, and large when the output is far from the desired output. The network then adjusts the weights and biases of all neurons to reduce the loss function for that particular input. The training process typically involves a large number of iterations with a large set of inputs called the “training dataset.” The dataset must contain example inputs paired with their desired outputs, so the network has something to compare to in the loss function. The process of iterating over the entire training dataset is called an “epoch”. The training dataset is usually very large, so the number of computations per epoch is also very large, and so the training process requires a lot of time and computing power.

Convolutional Neural Networks

A Convolutional Neural Network (CNN) is a variety of DNN which predominantly contains convolutional layers rather than fully-connected layers. A convolutional layer is a layer where each neuron is only connected to a fraction of the neurons in the previous layer. These connections are defined by a convolution of the previous layer with a kernel. The values in this kernel act as the weights of the CNN, and are adjusted during the training process through back-propagation. CNNs are preferred for systems with large, regular inputs (especially image and audio) since a DNN requires a small fraction of the weights of a fully-connected DNN, dramatically reducing computational costs. They perform especially well on image and audio, since typically a sample of image or audio is very strongly related to its neighbour samples and has little relation to distant samples.

Method

Objectives

Obtain a dataset

Each speech enhancement method has been demonstrated by using different audio datasets depending on the creator(s). Despite this, the general concept is very similar:

- Collect a large amount of "noise" audio

- Collect a large amount of clean speech audio - if a transcription exists too, this is called a corpus

- Combine the two datasets to synthesise noisy speech audio

The goal for this objective is to generate the means of creating a very large (approx 1000hrs) dataset of mixed audio, while maintaining a record of the original clean and noise files - as some methods use these during training. This dataset / generation methodology can then be used by all methods for a fair comparison.

Train and optimise

A number of promising techniques are selected, and their models trained on the dataset from the previous objective. For non-learning methods, their algorithms may be optimised or altered in some small manner to generate the best results.