Projects:2020s1-1410 Speech Enhancement for Automatic Speech Recognition

This project is sponsored by DST Group

Speech recognition is becoming more and more widely used, though the input audio to these systems is rarely clean. Performance of an automatic speech recognition (ASR) system tends to suffer in the presence of noise and distortion. In the past few years, a number of machine learning techniques [1] [2] have been developed to reduce the background noise of speech clips.

The objective of this project is to determine the suitability of machine learning based speech enhancement techniques as a pre-processing step for ASR.

Project team

Project students

- Patrick Gregory

- Zachary Knopoff

Supervisors

- Dr. Said Al-Sarawi

- Dr. Ahmad Hashemi-Sakhtsari (DST Group)

Advisors

- Ms. Emily Gao (UniSA)

- Mr. Paul Jager (DST Group)

Introduction

This project follows from work done previously by University of Adelaide students Jordan Parker, Shalin Shah, and Nha Nam (Harry) Nguyen as a summer scholarship project.

Background

Machine Learning

Deep Neural Networks

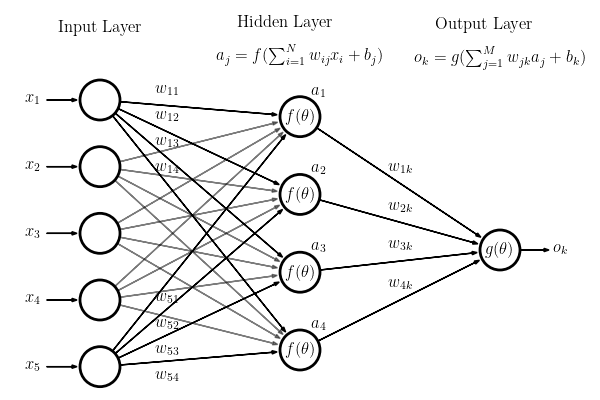

A neural network is a type of algorithm which is developed to emulate the function of the brain. A neural network consists of layers of “neurons”, each of which holds a numeric value. The value of neurons in the first layer of a neural network are determined by the input to the network, and this layer is called the “input layer”. The value of neurons in further layers is determined by calculating a weighted sum of the values of neurons in the previous layer (and an additional bias, which can help bring the value into an appropriate range), and then passing this result through a non-linear function called an “activation function”. This non-linearity is what separates a neural network from a simple system of linear equations. See below for a visual demonstration of a small neural network, demonstrating how the values are calculated. The final layer of the network is called the “output layer”. Layers between the input layer and the output layer are called “hidden layers”. A Deep Neural Network (DNN) is any neural network which has more than one hidden layer.

Training

The value of each neuron in a neural network is calculated by taking a weighted sum of values from the previous layer and a bias. Determining the optimal values of these weights and biases lies at the heart of machine learning. The optimal weights and biases are calculated in the training process. A single iteration of training involves feeding the network an input (with a value assigned to each input neuron), and observing how close the output is to some desired output. It makes a comparison using a “loss function,” which is small when the output is close to the desired output, and large when the output is far from the desired output. The network then adjusts the weights and biases of all neurons to reduce the loss function for that particular input. The training process typically involves a large number of iterations with a large set of inputs called the “training dataset.” The dataset must contain example inputs paired with their desired outputs, so the network has something to compare to in the loss function. The process of iterating over the entire training dataset is called an “epoch”. The training dataset is usually very large, so the number of computations per epoch is also very large, and so the training process requires a lot of time and computing power.

Convolutional Neural Networks

A Convolutional Neural Network (CNN) is a variety of DNN which predominantly contains convolutional layers rather than fully-connected layers. A convolutional layer is a layer where each neuron is only connected to a fraction of the neurons in the previous layer. These connections are defined by a convolution of the previous layer with a kernel. The values in this kernel act as the weights of the CNN, and are adjusted during the training process through back-propagation. CNNs are preferred for systems with large, regular inputs (especially image and audio) since a DNN requires a small fraction of the weights of a fully-connected DNN, dramatically reducing computational costs. They perform especially well on image and audio, since typically a sample of image or audio is very strongly related to its neighbour samples and has little relation to distant samples.

Metric Scores

Word Error Rate

The Word Error Rate (WER) is a commonly used metric for analysing the performance of an ASR system. Its use in evaluating speech enhancement systems is novel for this project.

The WER score is a means of comparing a hypothesis transcript (usually the output of an ASR transcript) to a reference transcript (the actual words spoken). The score is a percentage of the words in the hypothesis that were erroneous compared to the reference (i.e. a lower WER score indicates better performance).

The WER is calculated as follows:

where S is the number of words substituted, I is the number of words inserted, D is the number of words deleted, and N is the total number of words in the reference transcript.

Since there could be infinitely more words inserted into the hypothesis compared to the reference, there is no upper limit on WER, however for a reasonable speech sample and ASR system, we expect WER to fall between 0 and 100%. A lower WER indicates a better performance.

Perceptual Evaluation of Speech Quality

The Perceptual Evaluation of Speech Quality (PESQ) is a score originally developed by Rix et al [3] as a metric for evaluating the quality of speech after degradation caused by telecommunications systems. It is now a common metric in the speech enhancement community, since the literature shows that the score consistently reflects true human opinion on increase in speech quality.

PESQ is a real number between 1 and 4.5, with a higher PESQ indicating better quality of speech.

Short-Time Objective Intelligibility

The Short-Time Objective Intelligibility (STOI) index was developed and published in 2011 by Taal et al [4] to reduce time and cost involved with using human listening experiments to determine speech intelligibility. The speech enhancement community has taken this on as a common metric.

STOI is a real number between 0 and 1, with a higher STOI index indicating more intelligible speech.

Segmental Signal-to-Noise Ratio

The Segmental Signal-to-Noise Ratio (SSNR) is a modification on the standard definition of Signal-to-Noise Ratio (SNR). It is calculated by splitting an audio clip into small (~20ms) segments, taking the SNR of each segment, and taking the mean of those SNRs. Since SNR has no upper or lower bound, the SNR of particularly noisy or quiet segments can weigh heavily on the mean SNR, so upper and lower bounds for individual SNRS of 35dB and -10dB are introduced.

The SSNR can be calculated as follows:

Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://en.wikipedia.org/api/rest_v1/":): {\displaystyle SNR_m = 10 \log_{10} \frac{\sum\limits_{n=mN}^{(m+1)N-1}x_r[n]^2}{\sum\limits_{n=mN}^{(m+1)N-1}(x_d[n]-x_r[n])^2} }

Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://en.wikipedia.org/api/rest_v1/":): {\displaystyle SSNR = \frac{1}{M} \sum_{m=0}^{M-1} \begin{cases} -10 & SNR_m < -10\\ SNR_m & -10 \leqslant SNR_m \leqslant 35\\ 35 & SNR_m > 35 \end{cases} }

where xr is the reference (desired) signal and xd is the degraded (noisy) signal.

SSNR is a number (in dB) between -10 and 35, with a higher SSNR indicating less noise.

Method

Data Preparation

Each speech enhancement method has been demonstrated by using different audio datasets depending on the creator(s). Despite this, the general concept is very similar:

- Collect a large amount of "noise" audio

- Collect a large amount of clean speech audio - if a transcription exists too, this is called a corpus

- Combine the two datasets to synthesise noisy speech audio

The goal for this subsystem is to generate the means of creating a very large (approx 1000hrs) dataset of mixed audio, while maintaining a record of the original clean and noise files - as some methods use these during training. This dataset / generation methodology can then be used by all methods for a fair comparison.

Speech Enhancement

Two promising techniques were selected – SEGAN+ and Wave-U-Net – and their models trained on the Training dataset produced in the Data Preparation subsystem.

The Test dataset was then enhanced by both the trained models of SEGAN+ and Wave-U-Net. A Wiener filter was also used to enhance the Test set, which was used as a baseline, traditional speech enhancement method to compare the performance of the neural networks to.

Performance Evaluation

The enhanced Test datasets produced by each speech enhancement method mentioned above were then evaluated against the four selected metrics. The original clean and noisy Test datasets were used as the reference material for metric comparison.

Results

Conclusion

The Wave-U-Net was the only method able to improve performance in WER. It was only a marginal improvement, but the fact that it showed improvement answers the research question: Yes, machine learning speech enhancements are suitable as a preprocessing step for ASR.

As speech enhancement methods, both SEGAN+ and Wave-U-Net were able to outperform the baseline Wiener filter, and both networks improved in PESQ, STOI and SSNR, however Wave-U-Net was the clear standout across all metrics.

References

- ↑ S. Pascual, A. Bonafonte, and J. Serrà, “Segan: Speech enhancement generative adversarial network,” arXiv preprint arXiv:1703.09452, 2017.

- ↑ D. Stoller, S. Ewert, and S. Dixon, “Wave-u-net: A multi-scale neural network for end-to-end audio source separation,” 19th International Society for Music Information Retrieval Conference (ISMIR-2018).

- ↑ A. W. Rix, J. G. Beerends, M. P. Hollier, and A. P. Hekstra, “Perceptual evaluation of speech quality (pesq)-a new method for speech quality assessment of telephone networks and codecs,” in 2001 IEEE International Conference on Acoustics, Speech, and Signal Processing. Proceedings (Cat. No.01CH37221), vol. 2, 2001, pp. 749–752 vol.2.

- ↑ C. H. Taal, R. C. Hendriks, R. Heusdens, and J. Jensen, “An algorithm for intelligibility prediction of time–frequency weighted noisy speech,” IEEE Transactions on Audio, Speech, and Language Processing, vol. 19, no. 7, pp. 2125–2136, Sep. 2011.