Projects:2021s1-13332 Artificial General Intelligence in fully autonomous systems

Contents

Project team

Project students

- Chaoyong Huang

- Jingke Li

- Ruslan Mugalimov

- Sze Yee Lim

Supervisors

- Prof. Peng Shi

- Prof. Cheng-Chew Lim

Advisors

- Dr. Xin Yuan

- Yang Fei

- Zhi Lian

Introduction

Artificial Intelligence (AI) has made many innovations across industries in recent years. According to Elon Musk’s interview with the New York Times, we will have machines vastly smarter than humans in narrowed functions and applications within five years, such as recognitions and predictions. However, this is only the first stage of “the AI revolution”. Smarter machines will need to achieve human-level intelligence and recursive self-improvements. This category of AI is called Artificial General Intelligence (AGI) which improves machine intelligence in border tasks. AGI could be implemented into autonomous systems and make machines think, react and perform as humans.

Motivation

The field of AGI has seen many recent developments however, there exists a gap between ANI and human intelligence due to its limited performances and functions This project explores the usage of AGI in an autonomous system and investigates the collaboration of two agents under AGI and normal autonomous algorithms.

Objectives

This project aims to apply a rudimentary form of AGI in a fully autonomous system. In this project, AGI will be demonstrated by reproducing basic human behaviours that are understandable and explainable to humans. This will be achieved by designing a heterogenous, multi-agent maze solving system with the cooperation of the Unmanned Aerial Vehicle (UAV) and the Unmanned Ground Vehicle (UGV). A non-AGI system will also be developed to evaluate its relative performance against the AGI system. Both the AGI and non-AGI systems will be developed on virtual and physical platforms respectively to facilitate testing and demonstration of concepts developed by the team.

Literature Review

AGI Relevant Literature

ANI Relevant Literature

Background

Looking back to the days when technological developments were not that advanced, barely has anyone thought that one day in the future, machines would be capable of achieving the same level of intelligence as humans or even supersede humans. However, in the 21st century, every dream on technology has the slightest chance of turning into reality.

We are currently in the later stage of AI with many researchers and technology companies starting to venture into the upcoming field of AI, which is AGI, also known as strong AI. According to Kaplan and Haenlein in [1], AGI is the ability to reason, plan and solve problems autonomously for tasks they were never designed for. As of today, AGI has not been realisable, however, AI experts have predicted its debut by the year 2060 according to a survey in [2].

Artificial intelligence

We have heard AI this term many times, but what is actually AI? Intelligence has many forms, there are human intelligence, computer intelligence, animal intelligence,group intelligence, and alien intelligence. The functional assumption that AI researchers have is that the “intelligence” that human-being presented is a special form of a universal phenomenon. While another form can be constructed or presented by a computer, which is AI. AI can be classified into 3 types as most scientists believe, which are Artificial Narrow Intelligence(ANI), Artificial General Intelligence(AGI) and Artificial Super Intelligence(ASI).

Artificial Narrow Intelligence or weak AI, is the only type of AI that we have achieved up to date. It is considered as an agent that can only perform a singular specific task but can not do anything else. Most of the AI we heard today referred to this category, like Alpha Go, Siri, and Google are famous examples of this type of AI. People generally think AI is equal to ANI while this is not true, because AI has other representation other than ANI

Artificial general intelligence, also called human-level AI, strong AI or universal AI, is the type of AI that can perform a variety of tasks in a variety of environments. There were 45 known "active research and development projects" spread across 30 countries in 6 continents in 2017 [*]. Many of these projects are based in major corporations and academic institutions and the two common goals are humanitarian and intellectualist [*]. The three largest projects are DeepMind, the Human Brain Project and OpenAI [*]. The following points are some key characteristics of general intelligence that AGI community agrees broadly [/]:

- AGI should have the ability to achieve a variety of goals, and carry out a variety of tasks, in a variety of different contexts and environments.

- It should be able to handle problems and situations quite different from those anticipated by its creators.

- A generally intelligent system should be good at generalizing the knowledge it’s gained, so as to transfer this knowledge from one problem or context to others.

System Design

High Level Diagram

The High-Level Design of the project incorporates a system with AGI and a system without AGI. The key difference between both systems is where the Information Processing System module is located. In the System without AGI, the UAV will be assuming control over the UGV, directing the UGV precisely throughout the maze. However, in the System with AGI, the UGV will be equipped with a decision-making ability while the UAV will just be providing additional information as a guide. Each of these systems consists of three main modules which are the Operations Control Centre (OCC), UAV, and the UGV

System without AGI

In this part of the system, the UAV and UGV are designed to work together but work separately. The system without AGI is more reliable on the performance of the UAV. UAV plays the role of the UGV’s eyes by providing a better view of sight and more information. UGV needs to follow the specific navigation information to arrive at its destination. UAV is designed to be equipped with the abilities of image processing and information collection. The collection system uses a monocular camera to capture images while flying. The collected images will need to be processed and converted to the real world coordinates corresponding to the UGV’s location to guide the UGV in traversing the maze. The UGV will need to comply with the information received while using its collision avoidance function to navigate towards the exit. This mimics the human behaviour of navigating to a specific destination with a mapping platform. This system will be purely autonomous and with lower general intelligence compared to the system with AGI.

System with AGI

In comparison with the aforementioned ANI system, the AGI system comprises a custom maze-traversal algorithm. The UAV and UGV still work together to solve the maze, however, the primary goal of this system is to attempt to mimic human maze-solving behaviour. Evidently, humans are not optimal creatures, and as such, it can be expected that this system may lack aspects that benefit from raw logical input and deduction. Humans however are capable of adapting easily to a plethora of environments and conditions. This is where the system with AGI should excel: adapting to different mazes dynamically, being able to solve the maze through exploration without failure. In this system, rather than having the UAV assert full control over the UGV, the UAV would only serve to provide the UGV with guiding information. The UAV would roughly tell the UGV where the landmarks are located in the maze that would serve to guide the UGV towards the solution path. This is akin to how a human being might use tall buildings or road signs to navigate the streets of an unfamiliar city.

Methodology

This section covers the methodologies that have been implemented to build the ANI and AGI system. The project was initiated on a virtual platform on CoppeliaSim, and has gradually transitioned to a physical platform for more practical and thorough testing. Simulation codes were mainly written in the Python programming language. The UAV that was used in the physical platform is the DJI Tello Edu Drone and the UGV used was the Robomaster EP core.

Virtual Platform

UAV

Maze Image Reconstruction

Due to the limited field view of the UAV, several images needed to be taken at different positions to form the entire maze structure. The UAV motion control algorithm was integrated with the vision sensor to capture images at every new set position from start to end. The images in each of the three rows will then be concatenated horizontally to form three images and lastly vertically to form the complete maze image.

Path Planning Algorithm

After the completion of maze capturing and processing, the maze needs to be solved to provide a path to guide UGV moving out of the maze. Before directly using a processed maze image, the maze needs to be transferred to a binary grid map, which means a map composed of 0 and 1. The obstacle of the maze is using 1 to represent. Maze-solving algorithms are the Breadth-First Search algorithm and A* algorithm. The working process of the BFS algorithm is to scan the maze from the start point first and calculate and record the current position to start point distance. Once the endpoint is found, the algorithm will move back to the start point and compute the shortest path. A* algorithm is a greedy first algorithm, it prefers to move through the shortest straight line distance from the current position to the endpoint, the distance will be counted as a cost in the calculation. The characteristic of the A* algorithm is that will determine the moving cost first and then decide the moving direction. Therefore, programming the A* algorithm can avoid passing the desired path and generate more accurate moving information. Furthermore, the configuration space of UGV needs to be considered, which represents the available movement map based on the robot’s size and degree of freedom. To apply the configuration space into the path planning algorithm, the obstacles in the maze can be expended to achieve the configuration space in the BFS algorithm, the configuration space can be expressed as additional movement cost in the A* algorithm.

Landmark Detection

Template matching was used to detect landmarks in the maze where the landmarks were represented by resizable concrete blocks. An HSV colour range was defined to enable the algorithm to segment the green colour on the landmarks from the maze.

Following that, to avoid the issues of having multiple detections on one landmark, the Non-Maximum Suppression (NMS) technique was used. It works by selecting the best match out of all the overlapping bounding boxes by computing the Intersections over Union (IOU). The IOU is a method to compute the overlap percentage between the ground truth detection box and the prediction box. Expressing the IOU calculation mathematically, it will be,

IOU (Box1,Box2)=Intersection Size(Box1,Box2)/Union Size(Box1,Box2)

The IOU will then be used in the NMS technique to filter out detections keeping only one bounding box per detection. This method works by selecting the prediction with the highest confidence score and suppresses all other predictions.

This method has also been applied in the physical platform with some slight modifications to the ratio.

Coordinate Conversion

Coordinate conversion is needed to convert the pixel coordinates the UAV is using to real-world coordinates for the UGV to traverse through the maze. This is one of the most essential sections in ensuring the success of both the system with AGI and without AGI because without an accurate coordinate conversion, the UGV will have a risk of moving towards the wrong location and in the worst-case scenario, it may cause the UAV to crash into walls.

The final reconstructed maze has been plotted on a graph spanning from -2.5m to +2.5m in both the x and y axes in the beginning. This specific extent was chosen as it resembles the actual maze size of 5m x 5m in the virtual environment. A ratio comparison was then made by choosing several reference points from the plotted maze and the real environment.

UGV

Pioneer_p3dx

Pioneer_p3dx is an in-build mobile rover provided by copperliasim. It has 16 ultrasonic sensors surrounding it to achieve the 360-degree object avoidance function. A vision sensor is added for the image recognition function. A front view of this rover can be seen below. As it's a two-wheeled rover, the motion is simple and easy to understand. However, it has the limitation of movement and self-balance.

Sensors overshooting

In the simulation, the ultrasonic sensor can only detect a certain range of distance and output value from 0 to 1. When the rover enters an open area, the sensor will behave weirdly and approach an extremely small value like 2e-47. This has impacted the function for finding the smallest sensor and caused the rover to turn to the opposite direction as we want. To prevent this issue, we set a threshold value for all the sensors. When the sensor value is lower than 0.0001, we correct it to 1. By fixing this, the rover can successfully avoid obstacles and navigate through the maze without collision.

Two-Wheel Rover

The two-wheel rover motion control was done through simple PID inputs to the left and right motors. The steering direction was derived from the lowest collision avoidance sensor value. This value was stored in the steer variable:

steer = -1/sensor_loc[min_ind]

The left and right inputs to the respective motors follow from above:

vl=v+kp *steer, vr=v-kp*steer

Omnidirectional

The four-wheel rover motion control covered four motor inputs, rather than two, in a similar fashion to how motion control was handled for the two-wheel rover:

vlf=v+kp *steer,

vrf=v-kp*steer,

vlr=v+kp *steer,

vrr=v-kp*steer,

It is important to note that for both the two-wheel and four-wheel UGV’s, the P value was kept at a moderate level, so as to not cause overshoot when corrections needed to occur.

UGV Rudimentary AGI Algorithm

During the conception phase of the project, we proposed the following high-level description of the rudimentary AGI algorithm, with a description of its behaviours:

General methodology:

- Divide the whole maze into 4 quadrants.

- Once the rover moves from one quadrant to another, avoid backtracking until the current quadrant has been fully explored.

- Backtracking will occur if a landmark has not been found in this quadrant.

- Once a landmark has been found within a specific quadrant, disregard previously explored quadrants. This ensures progression

- Add a camera module that is able to differentiate between dead-end landmarks and progression landmarks.

- Recognisable objects are put at dead ends which will help the rover identify them.

Experimental variables:

- Checkpoint landmark quantity.

- Checkpoint landmark location.

- Maze complexity.

- Relative maze size.

What information is the landmark providing?

- Correct path is being followed in order to reach the exit.

Rules for positioning landmarks:

- Do not position landmarks at the exit.

- Do not position landmarks at the start.

- Do not position a checkpoint landmark at dead ends.

- Try to position a landmark near the centre of the maze

- Try to position a landmark in the same quadrant as the exit.

- Landmarks must be positioned along the exit path.

- Use additional landmarks to indicate dead ends, for the visual sensor to recognise.

Landmarks categorised by colour:

- Green: Checkpoint landmark (progression)

- Red: Dead end (avoid)

Most of the methods listed above were realised by the UGV team, in the simulation phase of the project. One of the methods that was not fully implemented, was the complete backtracking logic. The final implementation was a lot more sporadic and less methodical. The UGV could in theory return back to explored sections of the maze, but this was a rare occurrence. This was not implemented due to both time and resource constraints. The algorithm works, irrespective of maze size and complexity, as long as the ‘Rules for positioning landmarks’ are followed correctly. It is important to add or remove checkpoint landmarks in proportion to the maze size, a greater number of checkpoint landmarks would more closely resemble a distinct solution path, and this is to be avoided for the AGI system. Same algorithm is used for physical experiment later.

Image recognition

A vision camera is attached to the rover on simulation to achieve image recognition function. Using the camera output data, we are able to recognise the object’s color and its shape using image processing technology. Filter can be applied to the original image to remove the background noises for better image quality. A more advanced AI camera can be used to help UGV make better decisions. In the simulation, when the camera detects a red landmark, it will turn 180 degrees immediately and not go toward dead-end. This is similar to humans when they see a stop sign at the entry of a path and turn away to the other path.

Orientation correction

When the rover enters an open area, the orientation correction function will be activated and make the rover align its orientation to the same direction as the next landmark. This is being done by first taking the arctan value of the coordinates difference between rover and landmark in x and y directions.

ugvLandmarkTrans = [0, 0, math.atan2(nextlandmarkPos[1] - ugvPos[1], nextlandmarkPos[0] - ugvPos[0])]

This is a vector pointing from the rover to the landmark. The correction angle will then be:

correction = (ugvLandmarkTrans[2])*180/PI - ugvOrien[2]*180/PI

When this correction angle is greater than 5, we will correct the orientation of the rover by turning left or right.

Real-time landmark update

As the rover proceeds toward the landmark, the rover will mark the status of this landmark as reached if the rover is within a certain radius distance from the landmark. Then the rover will calculate the next closest landmark based on its current position and move toward it. This process and information is being updated in real time and able to react to an unexpected change in the environment. This is being done by the function find_index_colsest_landmark() and landmarks list update.

Physical Platform

UAV

UAV Motion Control

Two different approaches were taken when controlling the UAV motion for image capturing. The method applied in the virtual environment without modification is no longer viable due to the front-facing camera on the Tello drone. The single image approach was to fly sufficiently far and high to capture one image that covers the entire maze. The second approach was to attach an acrylic mirror sheet to the front of the camera to reflect the image on the ground which resembles a downward-facing camera.

Single Image Approach

This approach involves taking off to a specified height to capture an image of the entire maze. Due to space constraints, the UAV can only fly up to a maximum height of 2.4m. Therefore the maze has been shrunk to 1.5m x 1.5m just to demonstrate the feasibility of the idea. However, for practical applications in the real world, the UAV will be required to scan an environment many times larger than the current maze size. Therefore, the second approach may be more practical.

Multiple Image Approach

This approach involves a more tedious procedure of defining fixed intervals between captured images having decided on a fixed maze size. The traversal path is the same as the method applied in the virtual platform which is a ‘S’ pattern. However, due to the underperforming gyroscopes and acrylic mirror sheets, the quality of the images taken from the UAV during flight has been largely compromised. The images were relatively pixelated which makes it a challenging task when combining them.

Due to time and budget constraints, an alternative of hanging drones down from safety nets was used to prove the feasibility of the initial concept while eliminating the potential issues. The UAV has been hung with the front camera facing vertically downwards to eliminate the reflection issue from the mirror sheet. A total of 9 images were taken- 3 per row, 3 per column to be combined with the image stitching algorithm detailed in the next section.

Image stitching

In the virtual simulation environment, the UAV movement is accurate and precise. Therefore, the entire maze image can be simply connected through the separated maze images. The situation is different from the virtual environment. The main problem is that UAV movement is not stable. The unstable movement can cause the error and deviation to the desired position, which leads to the failure of a simple image connection while using the same algorithm in a virtual environment. Therefore, instead of strict UAV motion control, an image stitching method can be used in this case. The image stitching method is called Scale-Invariant Feature Transform (SIFT) which is a feature matching algorithm. Through feature-matched points in two relevant images, the spatial transformation can be applied to stitch the images. The basic image stitching algorithm is referred from [1]. Because the maze environment is tedious and boring which leads to a lack of features, image stitching algorithms cannot work appropriately. Meanwhile, TELLO’s camera quality cannot capture maze images with enough features. Hence, additional features were added manually that have significantly different colours to the maze image and simple shapes such as star, oval.

Image processing

After stitching the entire maze image, the image needs to be processed to consist of the maze information, such as the wall, path, and landmark. To remove the unwanted content, the first is to extract the information that we want. Dependent on the colour differences between the maze wall and most unwanted objects, a script from [x] can be operated to determine the maze wall colour in a range of HSV colour spaces. By removing most unwanted contents, there may still be some shapes that are from the extra features, because the light condition and similar colour range caused the thin edges in the image. Canny detector and Hough Line Transformation can be applied to remove those thin edges. Because the line detection only remains the line information, the wall area between two edges needs to be filled out. Using a morphological filter with a closing operation can fill the small empty area and complete an image that can be transferred to a binary grid map.

UGV

Robomaster

Robomaster EP core is an educational robot from DJI. It has a robotic arm and a gripper that allows users to grab and place small objects. The Mecanum wheels allow for omnidirectional movement and shifting. Multiple extended modules including Servo, Infrared Distance Sensor, Sensor Adapter are available to increase the capabilities of the rover. In our project, we had attached 4 Infrared Distance Sensors on the rover to enable the object avoidance function. 2 sensors were put in the front and 2 on left and right side. Robomaster is also compatible with third-party sensors to further expand its functions. Scratch programming is suitable and friendly to beginner-level programmers and Python programming is available for high-level programmers.

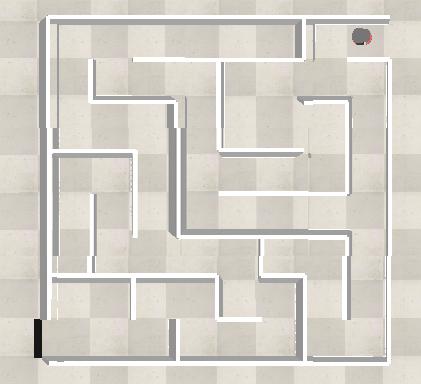

Maze

The maze is constructed using extruded polystyrene (XPS), it has a start gate and an exit. Several green landmarks and red landmarks were put into the maze for object recognition. The maze contains multiple dead-ends, straight lines and “s” shape turns.

Object avoidance

Using the on-board Infrared Distance Sensors we are able to achieve this function to a certain extent. Below is the pseudo-code of the object avoidance function

If (front sensors > certain threshold)

-- Move straight

Else if (front sensors < certain threshold & left sensor >= right sensor)

-- Turn left

Else if (front sensors < certain threshold & left sensor < right sensor)

-- Turn right

Else

-- Turn 180 degrees

Unfortunately, the complete collision avoidance subsystem could not be implemented on the physical platform due to the lack of available sensors. The platform that was selected also did not facilitate seamless integration of any additional sensors. The final AGI simulation depended on 16 sensors in total, in order to function properly, compared to the physical platform’s 4 sensors.

Image recognition

Image recognition on the physical platform is done in a similar way to how it was done in the simulation. There is a vision camera that is attached to the chassis of the UGV, that is able to differentiate between different numeric values. In the real-world maze environment, even numbers represent checkpoint landmarks, while odd numbers represent dead-end landmarks. This is different from the colour-based image recognition implemented in the simulation platform but is almost identical in its functionality. The result of this is that the UGV is able to clearly and quickly identify dead ends, and turn away from them in order to find an alternate path.

Results

UAV Maze Reconstruction

Three images each corresponding to the start, mid, and end rows of the maze have been combined to form the final maze image. Several adjustments have been made to produce the best concatenation result of three images with a minimal amount of overlaps.

Single Image Approach

The physical maze for this approach has been limited to a size of 1.5m x 1.5m, which is half the actual size the team was after. This approach was used to merely demonstrate the single image idea while working with a front-facing camera. This approach may not be feasible in performing real-world search and rescue missions that involve large areas. This is because the UAV is unable to cover the entire area with a single image while still maintaining an acceptable level of resolution.

After applying the corner detector, more than four corners were detected from the maze. To obtain the four corners, all detected corners were sorted into an array in ascending order. The first two elements and the last two elements were sorted in ascending order. The first two elements and the last two elements of the sorted array were obtained. These will form the four corners of the maze needed for perspective transformation.

Multiple Image Approach

The wobbling effect of the drone together with its low camera resolution has resulted in the output image being relatively pixelated and blurry. Waiting time was introduced into the algorithm to stabilise the drone after, however, it is insufficient for the stitching function. Therefore the alternative method of using a stationary UAV without the mirror sheet was then implemented.

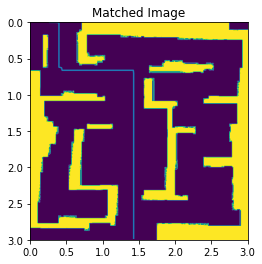

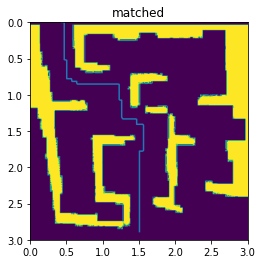

Maze content extraction

To remove the unwanted objects, the color ranges of key components in image are (116, 115, 75) to (220, 239, 226) and (244, 211, 127) to (254, 255, 245) in HSV color space. After removing the unwanted objects, there still are some unwanted edges.

Through the filtering of Canny detector and Hough line transformation, the maze image becomes clearer.

Path planning illustration

To compare the performance of two path planning algorithms, each algorithm has its own advantages and disadvantages. Because of the characteristic of A* algorithm, it will be time-consuming for solving a complex maze. The BFS algorithm is good at finding a path in an empty and large space.

According to the processed maze image, the BFS algorithm can be applied.

The maze structure has a slight difference for the above images. Both images show the path out of the maze. The left image looks fine for UGV, but the right one has some distortions in the bottom half image. The UGV movement can be affected by the distortion. The current image stitching algorithm doesn’t have enough performance to reflect correct information to UGV under a system without AGI.

ANI & AGI Comparison

From conducted experiments and tests it is evident that a clear distinction between characteristics of the ANI system and AGI system can be drawn.

AGI is less efficient but adapts to changing maze environments and avoids obstacles. This is due to the fact that the path for the AGI Rover is not preplanned. It reacts, using onboard sensors and extensive logic, to overcome any obstacles it may encounter. The path taken is not always optimal, nor is it the most direct or successful in many instances. The advantage is that there is no requirement for strenuous pre-planning or accurate mapping.

ANI is direct and precise, but the path must be planned anew, every time the environment changes in any way. This is tedious and time consuming. Additionally, systems may not always be online to facilitate this planning, or more likely, the situation would not accommodate such a tedious and time consuming process. ANI needs to work under precise information, AGI on the other hand, doesn't.

Perhaps comparing these two approaches in a maze solving application is not the most optimal solution, but it did serve to demonstrate the open-endedness of AGI as a subfield of artificial intelligence, and the incredible potential that this field holds. It is important to continue contributing research to this field, and to promote it to the forefront when any discussion about emerging technologies is conducted.

Conclusion & Future Work

Through the performance comparison of both systems, the non-AGI system is more robust and efficient than the AGI system However, the AGI system has higher adaptability in solving problems in varying environments There is vast potential for improvement and boundless possibilities, from the rudimentary form of AGI designed to an AGI system equipped with human-like capabilities.

References

[1] A. Kaplan and M. Haenlein, "Siri, Siri, in my hand: Who’s the fairest in the land? On the interpretations, illustrations, and implications of artificial intelligence", Business Horizons, vol. 62, no. 1, pp. 15-25, 2019.

[2] A. Zhao et al., "Aircraft Recognition Based on Landmark Detection in Remote Sensing Images", IEEE Geoscience and Remote Sensing Letters, vol. 14, no. 8, pp. 1413-1417, 2017. Available: https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=7970161.

[3] "HSV-Color-Picker/HSV Color Picker.py at master · alieldinayman/HSV-Color-Picker", GitHub, 2020. [Online]. Available: https://github.com/alieldinayman/HSV-Color-Picker/blob/master/HSV%20Color%20Picker.py. [Accessed: 24- Oct- 2021].

[4] L. Thomas and J. Gehrig, "Multi-template matching: a versatile tool for object-localization in microscopy images", BMC Bioinformatics, vol. 21, no. 1, p. 1, 2020. Available: https://bmcbioinformatics.biomedcentral.com/articles/10.1186/s12859-020-3363-7.

[5] S. D. Baum, B. Goertzel and T. G. Goertzel, "How Long Until Human-Level AI? Results from an Expert Assessment", Technological Forecasting and Social Change, vol. 78, no. 1, pp. 185-195, 2011. Available: https://sethbaum.com/ac/2011_AI-Experts.pdf.

[5] V. Kommineni, "Image Stitching Using OpenCV", Medium, 2021. [Online]. Available: https://towardsdatascience.com/image-stitching-using-opencv-817779c86a83. [Accessed: 17- Oct- 2021].