Projects:2014s2-75 Formation Control of Two Autonomous Smart Cars

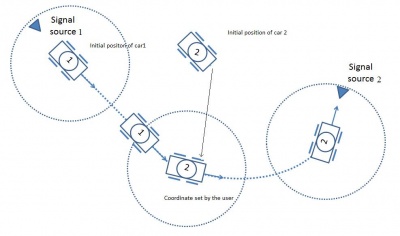

The aim of this project is to build a model of two smart cars which can move independently. The smart cars will not be controlled by any human intervention for its movement. The two cars should have autonomous control and be able to recognize the destination and should move to the destination in a definite path. There are two major modes in which the cars should move. The control mode and the signal relay mode. In control mode, the cars will move in a chase dodge model. That is one car chases the other one in a definite speed. The other car recognizes the chase and tries to avoid the first car. At a particular distance between them, the cars change their behavior and the dodger turns back and pursues the chaser. The chaser from the first scenario, turns away in order to evade the other car. In signal relay mode, the movements of both the cars are integrated together to send a signal from a source to destination. Car 1 carries a signal or information to a particular location. Car 2 moves from its initial position to the same location. Car 1 supplies the data to Car 2 and Car 2 carries the information from the location of the first car to the final destination.

Contents

Background and Significance

Over the years, driving has become one of the biggest life threatening risks. The rapid increase in the number of vehicles has proportionally increased the number of accidents. Studies show that about 1.24 million deaths occur due to traffic accidents in a year across the globe. Carelessness, drink driving and speeding are the major reasons for the cause of traffic accidents. Despite the safety advancements such as abs, airbags, anti-collision systems cars prove to be more dangerous than any other modes of transport such as buses, flight or trains. Apart from creating accidents, driving has additional disadvantages such as increase in stress and fatigue. Cases have been reported even of mental illness caused due to long distances and congested traffic driving.

Autonomous cars could prove to be a solution for this situation. If a sophisticated system can be built, the smart cars can decrease the accidents due to human errors. Also, passengers can relax without the agony of driving. There has been significant improvement in the studies of smart cars in the recent past. Google’s autonomous smart car project has gone on to test the smart cars in real environment. GPS is an important factor in this autonomous smart car implementation. The GPS should not only provide the location and coordinates the car is driving but should provide detailed information about the environment which the car is driven in, such as color of traffic lights, curb width, height of the bump etc. Google uses its maps and satellite images for finding the path and sensors to find the inertia and wheel encoders for calculating speed. Due to constraints in finding the color of the traffic lights due to glare or rain, difficulties in detailed mapping of the whole world and in decision making smart car technology is still in its development stages. Intelligent Car is a branch of intelligent robot; it is a system which include automatic control, artificial intelligent, mechanical engineering, and image processing and computer sciences. The main difficulty in intelligent car is image processing. The accuracy of the image processing directly impact intelligent car’s driving directions, driving speed and the ability to dodge obstacles. The technique of moving target’s detecting and tracking are the main parts of image processing The image processing techniques and software have improved due to the introduction of advanced software, improved processing capabilities, digital image processing techniques and the improvement in hardware. Several methods have been introduced for processing the images and finding moving targets. The images we view are in RGB. These images are converted to HSV for processing. The cam shift algorithm, pixel processing, background subtraction, Gaussian distribution and noise elimination are some of the modern techniques used in image processing.

Motivation

This project deals with some of the ways in which the design of autonomous movements of the car can be managed. The robotic technology and artificial intelligence systems proposed in this project can be used in robotics and traffic advancements. The project gives hands on experience in dealing with the Arduino robotic technology which is a starting step for intense robotic technologies. Traffic signalling and camera systems use significant amount of image processing in the near past. The image processing used in the project will be a guideline for detecting moving target and controlling them. The project gives a guideline for using image processing technology for finding the target. The technology can be used in machine vision and medical imaging. With the use of accurate systems such as gyroscope in the future, the project can be used in remotely controlling the robots to reach and investigate inaccessible and congested areas.

Requirements

The project requirements can be classified in to hardware design and implementation and software application. The major requirements of the project are: -

• Research and design a hardware system which can serve as the model of two smart cars and a control system which is to be used as the nerve center of the process

• The hardware design should consist of a model of two smart cars which must move independently

• Design and implementation of a core algorithm to perform the chase dodge model

• Implementation of tracking system in order to track the locations of the car in real time

• Implementation of a communication system for the cars to recognize the locations from the tracking system and implementation of a drive and power system for the cars so that the cars follow the algorithm for the movements.

Hardware

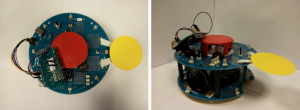

Arduino Robot

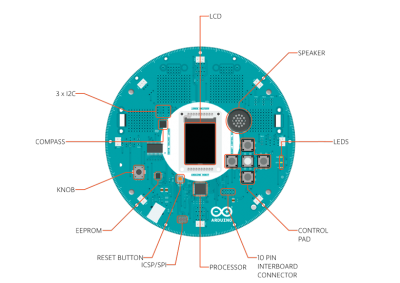

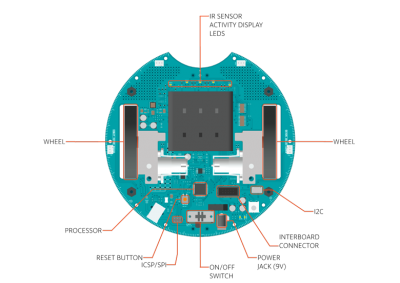

Arduino robots serve as the model of the cars in the project. An Arduino robot is a robot on wheels. It comes from the Arduino family of microcontroller based products. The Arduino is open source which means documentation and software is freely available. Arduino family contains boards build on microcontrollers such as Arduino Uno, Arduino Leonardo, Arduino Esplora, Arduino Mega etc. shields such as GSM shield, Ethernet Shield, Wi-Fi shield, Motor shield etc. and Accessory kits such as Arduino ISP, Serial adapter, proto shield etc.

Components

The Arduino robot mainly consists of a control board and a motor board. Each board is controlled by Atmel micro controller ATmega32u4. Motor board and control board are connected through serial connections. The major components of the Arduino robot are: -

• On board Infra-red sensors

• Compass

• TFT-LCD module

• Potentiometer voltage dividers

• External SD card

• 5V brushed DC motors

• Speakers (8 ohm)

• LED indicators

• Input keypad

The infra-red sensors in the robot are used to find the surface regularities in which the Arduino robot moves. The compass is used to find out the direction in which the robot moves although electrical interferences from the power lines and machines might reduce the accuracy. The Thin Film Transistor Liquid Crystal Display is used for projecting images and interrupt outputs of the robot through serial SPI communication. Potentiometer dividers divide the voltage to the two wheels of the robot. The other potentiometer knob can be used for alternating the voltage such that different outputs can be controlled by programming it. The external memory card is used for saving images, sound and data and can be used to project these images and speaker can be used for playing the music. The 5V brushed DC motors are used to convert the electrical energy from the battery to mechanical energy to the tyres for movement. There are 3 LED indicators also, one each for indicating data transmission and reception. The keypad can be used to give inputs to the robot.

Memory

The Arduino robot can be programmed in either control board or motor board. Each of the microcontrollers ATmega32u4 has 32KB of flash memory in which 4KB is used for boot loader program. So a total of 28KB is available for programming.

Input and Output

There are a number of pins in Arduino for input and output. These can be used for adding external parts such as sensors, inputs, switches etc. The control board TK0 to TK7 pins are multiplex pins multiplexed to the TK pin in Arduino. These pins are used for connecting sensors and actuators. The control board TKD0 to TKD5 pins and motor board TK1 to TK4 pins are connected to ATmega32u4 and are used as digital input and output. The Arduino also has 10 pins for serial communication and four I2C connectors.

Camera

AXIS P3364-VE cameras are usable both indoors and outdoors. It uses SVGA technology and has a resolution up to 5 mega pixels and it supports SMPTE HDTV video of 720p and 1080p.The SVGA technology offers excellent image quality. The camera comes with light finder technology from AXIS and is highly sensitive to light which enables it to capture high quality images in low light. It is developed with P-iris control technology which controls the field position through its iris to optimize its depth. AXIS P33 series provides H.264 and JPEG motion stream *which can be individually configurable. The cameras support Ethernet technologies with 802.3a/f and can work in extreme weather conditions. The camera is ideal for image processing due to its high quality imaging. The image sensor technology used in AXIS P3364-VE is progressive scan CMOS RGB technology. It comes with lens length 6mm and 12mm. 6mm models provides an angle of view up to 105° and is used in the project. An infrared cut vision enables it for night vision the shutter time is 1/24500s when operated in 50 hertz power supply and a frame rate of 25fps. Video compression technology used is H.264 baseline and motion JPEG. It supports Http, Https, FTP, SMTP, TCP, NTP, UDP, DNS, RTCP, SOCKS and DHCP protocols.

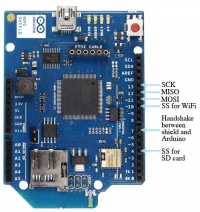

Wi-Fi module

The Wi-Fi module HDG204 is also manufactured by the Arduino. This module helps in connecting the Arduino robot to internet and local area networks without wires. The Wi-Fi shield is connected to Arduino by using Serial Peripheral Interface (SPI) through ICSP headers. It uses 802.11 wireless specifications for the connection and supports WEP and WPA2 encryptions. The Wi-Fi shield contains the microcontroller AT32UC3 which provides the internet protocols (IP) which supports Transmission Control Protocol (TCP) and User datagram Protocol (UDP). The Wi-Fi library in Arduino IDE is essential for programming the Wi-Fi shield. It contains an SD card slot and a micro USB port for firmware updates. The four LED indicators are for showing the status of digital pin 9 of Arduino, link for indicating a successful connection, error for indicating the error in a connection and data for indication of receiving/sending of data.

Network router

The network router is used to connect the camera Wi-Fi network to the computer Wi-Fi network. This helps the camera to send images as packets of data to the computer. The router used for the project is TP-link wireless router model G54. It accepts a maximum data rate of 54Mbps. It provides long distance wireless connectivity and support WPA and WPA2 encryptions apart from WEP. Wireless standards supported by the route are 802.11b/g and works in a 2.4 GHz frequency.

Computer

The computer is needed for the project to process the images which are received from the camera. The image processing is a real time procedure. The frame rate of the camera is 25fps and with 1.3MP of pixel data in each frame the computer needs a very high processing capability. The recommended technology is the use of Intel i7 core processors with a clock speed of 3.20 GHz and 8GB RAM. The processor has 2 cores and 4 threads. An Intel HD graphics 6000 processor is also recommended for higher performance in image processing. The processor supports up to 2560 x 1600 resolutions at 60 hertz. The operating system can be either windows, Linux or MAC OS.

Software

Arduino IDE

Arduino Integrated Development Environment (IDE) is the open source software used to program the Arduino robot. It is an interactive space which is used for developing the Arduino programs. The Arduino IDE is a development mechanism based on processes and it supports coding in C++ and C. The software is implemented on java platform from basic C codes and supports use in Windows, Linux, Mac OS and other platforms. The Arduino IDE consists of compilers which use GNU toolchain and AVR libraries for compilation and avrdude is used for uploading. All Arduinos use atmel microcontrollers as their brain, so can be programmed with AVR programming. There are many libraries associated with the Arduino IDE. These libraries consist of a set of programs which helps in connecting the Arduino to the hardware within the robot and externally. The typical Arduino library consists of drivers for accelerometer, analogue to digital converters, sensors, Wi-Fi, LEDs. I2C connections, SD card, SPI display, gyroscope, PWM servo, EEPROMs, motors, Ethernet, GSM and other devices which can be connected to Arduino. The robot will be connected to a particular port for uploading the program. The ports and type of Arduino used can be selected in IDE. There are provisions for burning the boot loader program and fixing the encoding. The boot loader program is saved in the microcontroller memory and helps the Arduino to upload code without any additional hardware. Each set of codes written in Arduino is called as a sketch. Text editor is used for writing sketches. A sketch has a setup( ) part and a loop( ) part. The setup( ) part is the place where we can add initialisations and constants. The loop( ) part is a loop which repeats until the robot is switched off. There is a console window which shows the messages outputs and error details. The codes are saved in .ino file. There are three major parts for the program values, functions and structures. A serial monitor helps in monitoring the values of the SPI communication to the robot. This helps in noting the values even without a LCD screen. The major applications developed with Arduino IDE are Xoscillo oscilloscopes, OBduino which is used as computer interface in modern cars, Ardupilot – for drones, Arduinophone etc.

OpenCV

OpenCV is a programming library, which mainly focus on the real-time computer vision. All the functions in the OpenCV are written in C++, and can be installed in Microsoft Visual Studio. The color detecting and tracking programming are based on the OpenCV library. The OpenCV program used for the project is OpenCV version 2.4.1. It is used for processing images send from the camera to the computer[1]. It is an open source program built by Intel Corporation built with C/C++ base code and is freely available for using. It has a cross platform library which supports operating systems windows, Linux and MAC OSX and is excellent for image processing. The program has C++, C, python and java interfaces and the major advantages are for computational efficiency focusing on real time computing applications. OpenCV uses open computing language which can accomplish process across diverse platforms and has high level and low level application program interface[1]. The uses extend from art, mining and inspection to mapping using robots. The high dynamic range imaging uses more 8 bit channel for image capabilities and detects the exposure of light in bright area and less exposure in dark area of the images thus producing wider dynamic range. The major features of OpenCV include the conversion, copying, allocation and setting of images, Accepts input and releases output in image and videos, applications for vector analysis and linear algebra, various structures of data such as graphs, blocks, serials and lists. The program is based on library structures which contains wide range of libraries for major applications. This include core functionalities which defines basic structures and variables, image processing libraries for histograms, colour space conversions, video libraries for processing motion estimations, object tracking and background subtraction, video codecs, object detection libraries, highgui libraries for graphical user interface with GPU-acceleration algorithms. The application of OpenCV includes facial configuration, image filtering, robotics and tracking and object identification.

Hardware Design and Methodology

The Arduino robots will be used as the model of the smart cars. Colour plates of colours red (RGB value) and green (RGB value) are fixed above the two robots respectively. The camera captures the images of the two robots and the background image. This image is send to the computer. A network router is used for exchanging the data from the camera Wi-Fi to the Wi-Fi network of the computer. The computer receives the images in packets of data. This data is combined to reproduce the image. Using programming (OpenCV) the location of the colour boards are recognised. Using the centre of mass method the centre point of the colour boards -which are the centre points of the robots- are found out. The coordinates of these central points are sent to the robots though the local Wi-Fi of the computer. With the help of Arduino Wi-Fi shield, the Arduino robots receive the coordinates of its location and the coordinates of other robots location. The robots are programmed in Arduino IDE program in order to realise the algorithm of chaser dodger model and the relay race model. To find out the orientation and direction the Arduino robots use the lantern fish method which provides the angle of deviation from geographic north. In the relay race model, the Wi-Fi Shields of one robot receives the data from the computer and moves to a particular location. From that location it shares the data with the other robot. The receiver robot in turn moves from the initial position to the destination where it delivers the data.

Determining Camera Specifications

Requirements

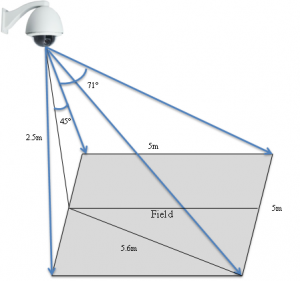

Height of the camera from the ground = 2.5m

Length x breadth of the field = 5m x 5m

Area to be covered by the camera = 5m x 5m =25m^2

Resolution >= 1cm

Frame rate >= 20fps

Support Wi-Fi with 802.11b/g network LAN Network protocol TCP/IP

Calculations

Field of view

Height of the camera from field = 2.5m

Distance to the farthest view on X axis = 2.5m

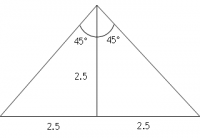

Angle from camera to farthest side = arctan(2.5/2.5) = 45°

Field of view in x axis = 2×45° = 90°

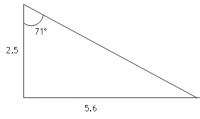

Angle from camera to farthest side = arctan(2.5/5.6) = 71°

Field of view in y axis = 71°

So minimum camera field of view = 90°

Resolution

Area to be covered = 25m^2

Resolution required in cm = 1cm

So,minimum resolution required in pixels = 500pixels = 0.5MP

Experiments

Lighting LEDs

One requirement of the project is to find out the suitable solution for the camera to detect the robots. Plan was proposed to use light LEDs above the robot so that the camera can detect. Programming and hardwiring was done in order to light the LEDs above the robots. But the actual results show that camera finds it difficult to detect the LEDs especially in the daylight glare. So a colour plate is used instead.

Wheel Calibration

Wheel calibration program is used to help the robot as straight as possible. This is done by dividing the voltages to the wheel motors proportionately. The potentiometer trim on the motor board of the Arduino divides the voltage to the wheels of the robot by a voltage divider. By controlling the potentiometer trim, the robot can be moved reasonably straight. Output is transferred as voltages to the wheels, trim values and instructions to the screen. The program reads the value of the potentiometer trim and displays it to the screen. Also programmed instructions are displayed on the screen for the user to make the adjustments. The values of the compass are constantly read to find out whether the robot moves in a particular direction. A screw driver can be used to turn the trim clockwise and anti-clockwise in order to distribute the voltages to the motors.

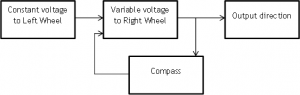

Robot Orientation

The robot deviates from its straight path, especially when moving large distances even after the wheel calibration execution. This is due to the imperfections in the shape of the tyres and difference in size between the two tyres. In order to eliminate these deviations a constant feedback should be given to find the direction in which the robot is heading and to compare it with the previous direction. The feedback used in this experiment is from the compass. The compass is read in each loop of the program. Initially equal voltage is given to both the wheels of the robot. The program reads the compass and compares it with the previous compass value. If it returns a difference, the voltage to the right wheel is varied by keeping the voltage to the left wheel constant. A positive error voltage is given to the right wheel if the robot tends to move right and a negative error voltage which decreases the original voltage is given otherwise. If the deviation tends to be a large value, the error voltage will be higher. This helps the robot to come back to its original orientation. The experiment is done in a controlled environment without the interference from external magnetism.

Eliminating oscillations

The feedbacks given to the robot motors make it unstable and force it to go in an oscillatory path. This makes the robot to wobble around. This is due to the behaviour of wheels to the feedback. The inertia of the motor tends to overcompensate for the deviations from the straight path which causes it to move to the other direction. This can be eliminated by controlling the error voltage supplied to the wheels. When the error voltage is more than a particular value, the program limits the error to its maximum value which in turn limits the voltage to the tyre. This helps the robot to move in a smooth direction and eliminates oscillations. The maximum value of the error voltage is found out using the trial and error method and is found as 0.39V.

Orientation control

The images send by the camera is processed by the computer and locations of the robots are identified. These locations are sent to the robots through Wi-Fi in terms of their coordinates. The program finds the direction in which the robot is pointing and the direction in which the chaser robot should turn in order to chase the dodger robot. The tangent function is the trigonometry and mathematical calculations are used to return the orientation to which the robot should turn. The program helps the robot to turn and move to the location. Once the orientation to which the robot needs to turn is determined, the program sends positive voltage to one wheel and negative voltage to the other. This makes one wheel to move forward and the other to roll backward creating the rotating motion of the robot on its axis. Feedback is read from the compass to determine whether the robot reached the orientation required. Once the orientation is reached, the polarity of the voltages to both the wheels is made in the positive direction which makes the robot move in a straight line. The value of voltages given to the wheels during rotation is directly proportional to the amount of rotation required. That is, when the robot nears the determined orientation, the speed of rotation of motors is reduced so that the torque and inertia is reduced to eliminate overshoot rotation. Also decision whether the robot needs to rotate clockwise or anti-clockwise depends on the angle of deviation required. That is if the robot needs the rotate less if it is rotating in anti-clockwise direction than clockwise direction then anti-clockwise rotation is implemented and vice versa.

Implementation

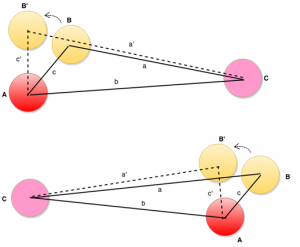

Lantern-Fish method

One of the major issues in the project is to find out the direction in which the robot is facing. As the compass is inaccurate in the magnetic condition an alternative method which is called the Lantern fish method is implemented. In the lantern fish method, an additional colour plate is used in the robot so as to find out the direction of orientation. The camera finds the centre of mass of the two colour plates and draws a straight line from the centres. This will project the direction in which the robot is facing. Some arithmetic calculation is done for detecting the direction in which the robot should move. The distance between the centres of mass of each colour plate is detected from the images send by the camera. The figure explains the calculation of the distance and shows the nomenclature. The value of c will be constant, as both colour plates A and B are fixed in the same robot and is fixed. The value of a and b changes according to the distance between the robots and the orientation in which the robots face. Initially robot turns to the left of the direction of facing. The new values will be a’ and c’. As we can see from the pictures, if the value of c’+a’ > c+a then the target will be in the right. Now robot is programmed to move right. If the value of c’+a’ < c+a, then the target will be at the left and the robot will turn left. When a+ c = b+ 0.01b the direction in which the robot is facing is the direction of the other robot. Now the robot will move forward. The value 0.01b is the amount of error allowed, as finding the exact orientation will be extremely difficult and time consuming.

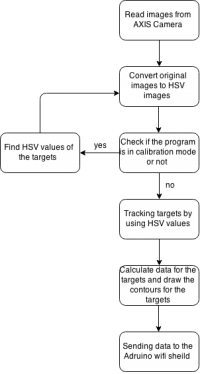

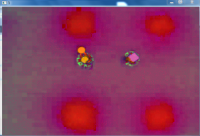

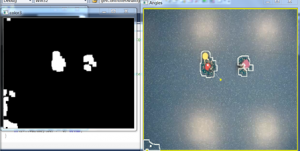

Target tracking algorithm

The target tracking algorithm is used to find out the location of the target to be processed through image processing. The tracking algorithm reads the images from the camera. Then the program chooses an initial search window for searching the image. The images are captured in RGB. Each colour in the image will be having different mixtures of red green and blue. The digital image processing processes this mixture in terms of values. These RGB values are converted in to HSV values. The HSV representation is by giving hue saturation and value. It can be represented as a cylinder of values with hue as the angular dimension. Hue represents the type of colour. For example, in the cylinder primary red is at 0°, primary green is at 120° and primary blue is at 240°. The saturation value is the degree of Chroma or purity. Value is the degree of lightness. The algorithm finds HSV values for all the pixels and converts it into a histogram with probability distribution. Now the whole image is digitalized in terms of their HSV values. The HSV values for finding the targets are initialised and the image is searched for finding the target colours. Now the centre of mass is found out to get the coordinates. This coordinates will be the coordinates in which the robot lies.

Image processing through OpenCV

The OpenCV programming is used for image processing from the camera the programming include libraries involved in the program, functions for doing procedures and algorithms.

Libraries

High level GUI: - The graphical user interface used in the programming is high level GUI. The library highgui.hpp is included in the program.

Imgproc: - The image processing library is included for getting the image processing algorithms. Imgproc.hpp library is added for this.

Stdafx.h: - Includes precompiled header library for decreasing the compilation time by taking the commands from previously complied libraries.

Stdio.h: - Includes standard input library for input and output operations.

IOstream.h: - Includes input and output functions for streams of input and output data.

UDPcon.h: - Includes User datagram protocol function for wireless transmission

IOmanip.h: - Includes parametric manipulators for setting and resetting flags.

Math.h: - Includes the numeric calculation libraries.

String: - For input and output operations for strings.

Vector: - Includes vector operations.

Functions

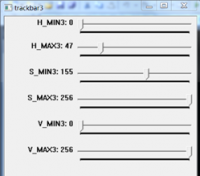

Functions used in the program are classified in to system functions and used defined functions. These functions are used for creating track bars, finding colours, drawing contours, converting RGB to HSV, finding centre of mass etc.

CreateTrackbars: - This function creates the track bars used for tracking the colours in the image. Track bars are created for three different colours used in the image processing. Each of the track bars represents HSV values and creates a range from 0 to 256 this includes all the possible colour values represented in a pixel.

Morphops: - This function is created for implementing the mathematical morphology. Mathematical morphology is used for binary images for machine language and images are explored with some shapes. The program explores elements in a 3px by 3px sized rectangle. The erodeElement function and dilateElement functions are used. The erodeElement function does the erosion of the elements with a disk smaller than its size. The dilateElement function does the dilation in which the element will be dilated by a disk larger than its sides producing a strip around the element.

Mat: -In digital processing the images are saved in the form of matrices containing numerical values. The Mat function is used for using these data and makes it available when the processor requires it. Mat has two parts mainly, a matrix header and a pointer to the pixel values.

FindColour: - The findColour function is used to find the colours in the HSV range initially for setting the values of the targets to be detected. Erode and dilate functions of morphops is used for determining the colours.

Getcolour: - The getColour function is used to detect the colours in the HSV image for finding the set values and comparing it to track the object. Erode and dilate functions of morphops is used for determining the colours.

Cvtcolor: - the cvtcolor function converts RGB colours to HSV colours. RGB is the amount of mixture of red green and blue in a colour. This is used to basically mix the colours in real world (for example: - painting). But HSV values are required for processing images digitally. The HSV is a cylindrical coordinate representation with hue as the angular measurement saturation as the x coordinate and value the y coordinate. The hue value runs from 0 to 360°. Saturation and brightness has values from 0 to 1. Hue is also the tone, saturation the purity and value is the brightness. The hue, saturation and value are represented in 0 to 255 in digital processing.

FindContours: - The findContours function is used to find the contours in which the image HSV is detected. That is it recognises all the areas with the same range of HSV values set.

DrawContours: - The drawcontours function is used for drawing the boundaries of contours detected when a colour plate or certain colour is detected.

Draw1: - The draw1 function finds the contour size of all the elements included in the contour of the first colour. It includes drawContour function. It also finds the numerical centre points or centre of mass of these contours. The contours are saved as vector points. The centre of mass is found out by the function called moments. The draw2 and draw3 functions are used for drawing the contours of the other image values. Threshold: - The threshold function is used to segment the image. The segmentation is done through the variable intensity of the target from the background. A threshold value is set and each value of the pixel is compared with the threshold.

VideoCapture: - As the name suggests, the function is used for capturing videos.

cv::circle ( ): - This function is used to draw a circle on the target.

Programming

The programming logic starts with establishing a connection between the camera and the computer. Using the VideoCapture function the videos of the camera are sent to the computer. This is done by identifying the IP of the camera. The IP of the camera we used is 192.168.0.2:554. The function ConfigRecieveAddr is used for receiving the IP address of the camera. The program then moves to a calibration mode where initial calibration is done where the track bars are created for finding the targets. Each of the hue, saturation and value are set in range from 0 to 256 so that any colour can be tracked. The findColour function is called here which enables to set the values of the initial colours to be detected. If the system is not in calibration mode, the getColour function is called for detecting the targets. The get colour function detects the elements within same HSV range set in the getColour function. When all the elements which have the HSV range are found out, the next step is to find the boundary of the array of the elements. The findContours function is used for finding these boundaries. The image is treated as binary where zero pixels are treated as zeros and all others are treated as ones. To create the black and white image of the picture which highlights the contours, the function threshold is called. This will compare the values to a set of predefined HSVs. The contours and numerical centre points are drawn with the use of draw function. Now the line function is used for drawing lines from the centres of the three contours. The length of these lines are measured in order to find out the values of a, b, c, a1, b1 and c1 so that the robots recognises whether to turn right or left. The values are calculated using the coordinate arithmetic by the equation D=√(dx^2+dy^2 ). Where dx = x1-x2 and dy = y1-y2.The coordinates of the centre points are send to Arduino Wi-Fi module after establishing a connection between the Arduino Wi-Fi and the computer. The steps to calculate target’s centre of mass are shown below.

Calculate zero-order moments:

M_00=∑_x∑_yI(x,y) ⋯⋯⋯⋯⋯⋯⋯⋯(2.1)

Calculate first-order moments:

M_10=∑_x∑_yxI(x,y) ⋯⋯⋯⋯⋯⋯⋯⋯(2.2)

M_10=∑_x∑_yyI(x,y) ⋯⋯⋯⋯⋯⋯⋯⋯(2.3)

Calculate target’s centre of mass:

(X_C,Y_C )=(M_10/M_00 ,M_01/M_00 )⋯⋯⋯⋯⋯⋯⋯⋯(2.4)

(I(x,y) is the pixel values in point (x,y))

Wi-Fi communication

The LCD screen need to be taken off for connecting the Wi-Fi shield to the robot. The ISCP pins are connected to the ISCPs of the Arduino. Now the TKD pins are to be connected to the Wi-Fi shield. TKD5, TKD4 and TKD3 pins of the Arduino needs to be connected to digital pins 7, 9 and 10 respectively of the shield. The jumper wire is to be connected from pin 2 which is the reference pin to the 3.3V pin of the Arduino. The programming of the Wi-Fi shield is done through the robot itself. The UdpSendRecieveString program is used for connecting the Wi-Fi shield to the networks and sending and receiving strings of data. The network SSID and the password need to be sent through character strings. A packet buffer is allocated in the memory for saving the incoming data string. The program waits for the connection to establish through the hardware with verifying network SSID and the password. A time interval of 10s is allocated for establishing the connection. When the shield is connected to network, it verifies whether any data is available. If yes the size of the data is read through Udp.parsePacket() function. This data is then read and saved to the packet buffer. An acknowledgement is send when the data is received. The signal strength of the network is also calculated and stored. WAP and WAP 2 are the only Wi-Fi connections Arduino Wi-Fi Shield can connect. A local area network system should be set up for the connection. The key part of this system is a network router. TP- Link G54 network router is the router used in this project. This router connects all hardware in the project together, which include network camera, computer and robots. It acts as a “heart” of this project.

Results

The compass deviation under environmental magnetism is found out to be less than or equal to 30°. This shows the compass cannot be used under magnetic interference of any kind.

The minimum voltage needed for the tyres to move in normal floors coated with polyester is 50/255×5v= 0.98 V

The voltage to be applied to the right tyres for the robot to move straight when the left wheel has 1.96(i.e. value 100) volts is94/255×5v=1.843 V.

The voltage to be applied to the right tyres for the robot to move straight when the left wheel has 1.373(i.e. value 70) volts is66/255×5v=1.294 V.

The maximum error voltage found for eliminating oscillations is 15/255×5v=0.394 V

Conclusion

The aims of the project were completed successfully. The project was able to find a solution for designing the smart cars. The use of camera and image processing is proposed as the output from the project. The control mode in which the cars can move independently and the signal relay mode in which the cars share the tasks were successfully completed. The proposed Bluetooth triangulation method was replaced with the camera due the infeasibility in finding the range using Bluetooth triangulation. The failure of compass as a direction sensor during magnetic interferences affected the project. But the project realised that modern equipment like gyroscope can replace the compass technology. The communication part was proposed to be using Bluetooth but was replaced with Wi-Fi technology due to its increased bandwidth and also less interference.

Future Work

In future the compass module and image processing can be replaced with gyroscopes. The gyroscope is a machine which can find the direction even in the absence of earth’s magnetism and interference from other magnetic equipment. The project can be improved using multiple robots and tracking them. The features such as LIDAR technology which uses laser to find out the external environment, SONAR which sends sound waves to find out the distance and behaviour of the system, RADAR, and IR sensors can be added for increased accuracy and feedback. The image processing used in the project can be improved for high speed measurements when the vehicles move faster. A local vision system in which the camera is place above each car can track the environment.

Other applications

Apart from the system of smart cars, the project can be extended in the mode of robotic technology. The robot can be controlled remotely to access congested areas and track other targets. The image processing technology used in the project can be extended to create technologies for image processing in traffic signals and for facial recognition.

References

[1] Bradski, Gary, and Adrian Kaehler. Learning OpenCV: Computer vision with the OpenCV library. " O'Reilly Media, Inc.", 2008.

[2] Almaula, Varun, and David Cheng. "Bluetooth triangulator." Final Project, Department of Computer Science and Engineering, University of California, San Diego (2006).

[3] Stauffer, Chris, and W. Eric L. Grimson. "Adaptive background mixture models for real-time tracking." Computer Vision and Pattern Recognition, 1999. IEEE Computer Society Conference on.. Vol. 2. IEEE, 1999.

[4] Evans, Brian. Beginning Arduino Programming. Apress, 2011.

[5] Zivkovic, Zoran. "Improved adaptive Gaussian mixture model for background subtraction." Pattern Recognition, 2004. ICPR 2004. Proceedings of the 17th International Conference on. Vol. 2. IEEE, 2004.

[6] Lipton, Alan J., Hironobu Fujiyoshi, and Raju S. Patil. "Moving target classification and tracking from real-time video." Applications of Computer Vision, 1998. WACV'98. Proceedings., Fourth IEEE Workshop on. IEEE, 1998.

[7] “Three axis Accelerometer” viewed on 16/05/2015 <http://basbrun.com/2011/03/12/triple-axis-accelerometer>

[8] “How self-driving cars work” viewed on 15/05/2015 <http://www.makeuseof.com/tag/how-self-driving-cars-work-the-nuts-and-bolts-behind-googles-autonomous-car-program>

[9] “AXIS P3364-VE network camera” viewed on 19/05/2015 <http://www.axis.com/lk/-en/products/axis-p3364-ve>

[10] “AXIS P33 network camera series” viewed on 19/05/2015 <https://www.use-ip.co.uk/datasheets/1215/axis_p3364ve_fixed_dome_network_camera_12mm_0484001.pdf>

[11] “G54 Wireless High Power Desktop Access Point” viewed on 20/05/2015 < http://www.tp-link.us/products/details/?categoryid=245&model=TL-WA5110G#spec>

[12] Agam, Gady. "Introduction to programming with OpenCV." Online Document 27 (2006) viewed on 21/05/2015 < http://www.cs.iit.edu/~agam/cs512/lect-notes/opencv-intro/opencv-intro.html>.

[13] “OPenCV” viewed on 21/05/2015 < http://opencv.org/>

[14] “Image filtering” viewed on 23/05/2015 < http://docs.opencv.org/modules/imgproc/doc/filtering.html>

[15] “Structural Analysis and Shape Descriptors” viewed on 23/05/2015 <http://docs.opencv.org/modules/imgproc/doc/structural_analysis_and_shape_descriptors.html>

[16] “Distance between two points (given their coordinates)” viewed on 24/05/2015 < http://www.mathopenref.com/coorddist.html>

[17] “Arduino robot” viewed on 25/05/2015 <http://www.arduino.cc/en/main/robot>

[18] “Arduino WiFI Shield” viewed on 26/05/2015 < http://www.arduino.cc/en/Guide/ArduinoWiFiShield>

[19] “How to connect Arduino WiFi Shield to Arduino Robot” viewed on 27/05/2015 < https://arduinorobothelp.wordpress.com/>

[20] “ARDUINO ROBOT-MAD-ROBOT” viewed on 25/05/2015 < http://littlebirdelectronics.com.au/products/arduino-robot>

[21] “Global Health Observatory (GHO) data” viewed on 17/05/2015 < http://www.who.int-/gho/road_safety/mortality/en/>