Projects:2015s1-22 Automatic Sorter using Computer Vision

The aim of the project was to improve computer vision and robot arm control to accomplish a robust autonomous playing cards sorter. The project is primarily a demonstration tool for potential electronic engineering students to interactively show technology capabilities.

Contents

Project information

The specific aims of the project this year are identified as:

- To improve on the software subsystem of the last year’s project to ensure system reliability and performance by incorporating more sophisticated image processing techniques.

- To ensure that most physical constraints will be overcome by the robustness of playing cards image processing algorithm, as an example, constraints on illumination.

- To implement algorithms for recognising playing cards via suit, rank, and colour identifiers or different types of combination of these three.

The objectives of the project identified at the beginning are the following:-

- Sort a full deck of standard playing cards

- Use computer vision to differentiate between cards

- Perform the following sorts:

- Full Sort

- Suit Sort

- Colour Sort

- Value Sort

- Have a focus on electrical engineering, particularly image processing and reduce mechanical requirements

This project was tackled by breaking it into four subsections.

- Computer Vision

- Robotics

- Card Sorting Algorithms

- Graphical User Interface

The system consists of four hardware components:

- Camera

- Laptop PC

- Microcontroller

- Robotic Arm

Their individual functions are detailed in the image to the side.

Project Breakdown

Image Processing

The purpose of the image processing software of this project is to distinguish between different cards.

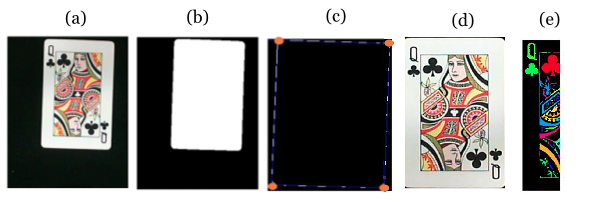

This is done using a four step method:-

- Find the outline of playing card on the black background

- Crop and warp the playing card so that it is a perfect rectangle

- Crop the suit and value images from the top left corner

- Run Optical Character Recognition software on the suit and value images

The digital image techniques used in the above steps are as follows:-

- The Hough Transfer

- Pixel Template Matching

- Adaptive Image Thresholding

- Edge Detection

- Blob Detection

(Refer links for further details)

Results

The below table show the results of testing carried out on the 24th of October 2014. Scripted batch testing aswell as testing the system as a whole was run.

| Run Name | Cards Tested | Cards Correct |

|---|---|---|

| Batch Test | 52 | 52 |

| System Test 1 | 52 | 49 |

| System Test 2 | 52 | 52 |

| System Test 3 | 52 | 50 |

Robotics and Kinematics

Arduino program

- Connected with matlab via usb

- The input from matlab is all the new angles for each servo

- Output to matlab when robotic arm has finished moving

Matlab Program

- Decide which set of movements to use depending on where a card is picked from and where it is placed

- Use inverse kinematics to determine angles of the robotic arm depending on where the card is to be placed and how high the stack is

- Forward Kinematic method

x = l1(cos(θ1)) + l2(cos(θ1 – θ2)) + l3(cos(θ1 – θ2 – θ3)) y = l1(sin(θ1)) + l2(sin(θ1 – θ2)) + l3(sin(θ1 – θ2 – θ3))

- The inverse kinematic method used the geometrical features of the arm to find all the angles of the robotic arm describe in the diagram to the right

- calibrations are made to correct the inverse kinematic method

- Movements are included to ensure robotic arm doesn't bump in to anything

- Movements are included to ensure robotic arm doesn't pick up two cards stuck together by the electrostatic force between them

Card Sorting Algorithms

The cards are sorted via one of the following methods chosen using the GUI:

- Separate Colours

- Separate Suits

- Separate Values

- Select-A-Card (pick cards you want the robot to find)

- Full Sort (back to a brand new deck order)

The full sort algorithm is based on a bucket/postman and selection sort and occurs in three stages:

- Cards are partitioned into buckets dependent on their value shown in the image to the right

- Each bucket is emptied out progressively onto the board

- Cards are selected from emptied out buckets in order and placed in sorted stacks dependent on their suit

Graphical User Interface (GUI)

The GUI was implemented to enhance the projects interactiveness. The GUI aids in showing the viewer the image processing as it happens with live snapshots of images along with live decisions made by the computer vision on the value of the card. Similarly, due to the table showing the entire data structure of cards that have been scanned and sorted, the user can not only track the sorting process but ‘see’ what is below the top card in a given stack.

Project Significance

This project acts as a proof of concept for the possible uses of combining computer vision and robotics. It proves that with more time and more advanced hardware the combination of the two could produce systems with great potential. Examples of industries which could benefit from these types of systems include; manufacturing, medical sciences, the military, artificial intelligence and the list goes on. The project also acts as a demonstration to entice future engineering students, and show off the possibilities of electrical engineering.

Team

Group members

- Miss Yue Zhao

- Mr Sijie Niu

- Mr Jiahui Tang

Supervisors

- Dr Brian Ng

- Dr Danny Gibbins

Team Member Responsibilities

The project responsibilities are allocated as follows:

- Miss Yue Zhao - Image Processing(Recognition)/Robotic ArmSorting Algorithms/

- Mr Sijie Niu - Image Processing(Recognition)/Image Processing(Extraction)

- Mr Jiahui Tang - Image Processing(Recognition)/GUI

Resources

- Bench 11 in Projects Lab

- Lynxmotion AL5D Robotic Arm

- Arduino Botboarduino Microcontroller

- Microsoft Lifecam Camera

- MATLAB

- Laptop PC