Projects:2016s2-246 Feral Cat Detector

Contents

Introduction

Group members

Bolun Huang & Yan Chen

Supervisors

Dr. Danny Gibbins & Dr. Said Al-Sarawi

Background

In Australia, significant numbers of native wildlife are killed each year by feral cats and foxes. As part of their control and monitoring, field researchers and park managers are interested in low cost automated sensor systems that could be placed out in the field to detect the presence of feral cats and possibly even trigger control measures. The aim of this project is to examine a range of image and signal processing techniques that could be used to reliably detect the presence of a nearby feral cat or fox and distinguish it from other native animals such as wallabies and wombats. The possible range of sensors that might be used to achieve this include (but is not limited to) infra-red imaging cameras, ultrasonic detectors, and imaging range sensors (akin to say an x-box Kinect). Both the sensors and the processing unit (say a raspberry pi or A20 based mini board) would need to be low cost and potentially sometime in the future be able to operate in the field for days at a time. The two students would be required to develop both the processing techniques and a simple hardware implementation which demonstrates their solution.

Approach

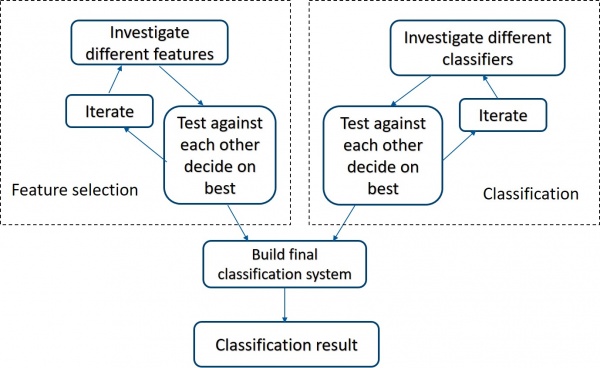

Based on the project of Richard & Yang in the last year, we build a alternative classification system which consist of two parts: feature selection and classification. In this project, instead of single feature, we have used feature combination which is proven could improve the classification performance. Moreover, we have test several classifiers to find which one is better and applied the better one in our system. In feature selection, we select 5 single features as base features: SIFT, PHOW, PHOG, LBP and SSIM. At first, we compare the performances of feature combination and single feature to find which way is better. Then, based on 5 base features to test all feature combinations and find the feature which can obtain the highest accuracy. For the part of classifictoin, we verify the framework of AdaBoost can improve classifier’s performance firstly, and then find the best classifier among 4 classifiers, SVM, MKL-SVM, softmax, SVM+AdaBoost.

Technical details

Feature Selection

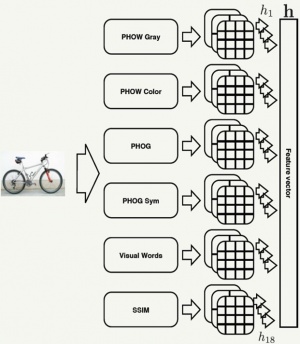

Suggestion from previous project:the more features we used to describe visual objects, the more accurate classification result we obtain.Thus we select 5 base features, SIFT, PHOG, PHOW, SSIM and LBP. These 5 features describe one image from different aspects. SIFT is a shape descriptor so that some attributes of visual entities cannot be obtained, such as texture, appearance and color. LBP has a satisfactory performance in detecting object’s texture. PHOW can provide us with more information about the position of image. PHOG is able to detect more features from different scale space. SSIM finds the same regions of interest from a lot of images, thus it can help system find the similar objects from different images.

After selecting base feature, we start to test the performances of single features. The first step is to randomly combine single features to create a feature combination Test all feature combinations. Next step i to combine features, we build the histograms of employed features, concatenate them into a larger histogram and convert this histogram into a vector by quantization.

Classification

We implement a new classifier--Multiple Kernel Learning SVM (MKL-SVM). It had been proven to be a useful tool for image classification and object recognition in a number of studies, where every image is represented by multiple sets of features and MKL-SVM is applied to combine different features sets.

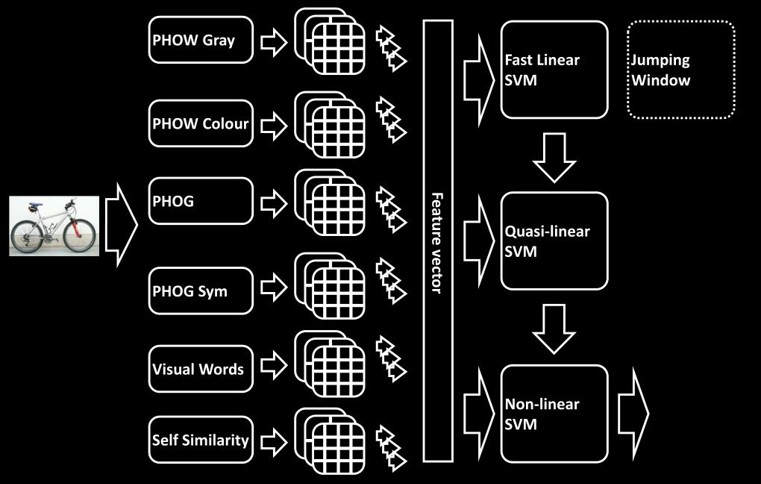

MKL-SVM is a cascaded SVM which is consisted of three internal SVM classifiers, i.e., fast linear SVM, quasi-linear SVM and non-linear SVM. Each classifier has its distinct kernel. The task of MKL-SVM is to compute kernel matrices of training and test images. Even though the histograms can represent the images, it still impossible to build a linear classifier for every class. Thus, we use a feature map in which the regions of interest (features) are linearly separable to help us find the optimal kernel.