Projects:2017s1-121 Learning Procedural Knowledge using Random Forests

Contents

- 1 Project Team

- 2 Abstract

- 3 Introduction & Motivation

- 4 Objectives

- 5 Background

- 6 Project Method

- 7 Project Results

- 8 Discussion

- 9 Conclusion

- 10 Future Project Recommendations

- 11 Reference

Project Team

Students: Woong-Ji Choi and Yiming Shitao

Supervisor: Dr Braden Phillips and Xin Yuan

Abstract

This project aims to produce artificially intelligent(AI) programs, or agents, that are automatically generated using a learning process. The agents are written in a production rule language and are created by inferring decision trees. A decision tree describes a series of conditions that link observed variables to a conclusion. Decision trees can be generated automatically (or inferred) from training data and random forests of inferred trees have often proven to be very effective classifiers. Although it is less common, decision trees can also be used for AI control of agents. In effect, the agent's behaviour is learned by the system rather than programmed in to it. As outcome of the project, it was possible to produce AI agents by using random forest for simple games such as Tic-Tac-toe and Connect 4. However, the behaviour of the AI agents depend strongly on the quality of training data and gathering these data is a time-consuming process. Also, the AI agent was only able to make decisions based on current environment state and can not foresee the future outcome. Hence, it was concluded that using random forest alone is not an optimal way of developing more complex AI agents. As a solution, it was suggested that a further research needs to be carried out to overcome the limitations of random forest by combining it with reinforcement learning.

Introduction & Motivation

In current technology, a computer requires a set of specific rules or an algorithm that pre- defines the process to execute any given task [1]. Therefore, successfully completing a task relies on the presence of a precise and extensive set of instructions. Existing computer systems lack the ability to: execute tasks through examples and previously executed tasks; observe and imitate experts to acquire new abilities; or improve on errors previously made [1] [2]. As computing tasks continue to become more complex, manually defining and implementing these rules is challenging and time consuming, hence demanding extra time and expertise from programmers. Machine learning methods have the potential to overcome these limitations [1] [3]. Machine learning involves the learning process of algorithms which can automatically be generated from studying data, learning from examples (models) and a process of developing computer systems or programs which automatically improve with experience [4]. In recent years there has been great progress in the use of machine learning for classification tasks [5] [3]. Using machine learning to generate agents is also possible and is beginning to emerge as an important research topic. Significant examples from recent years include developing new reinforcement learning algorithm for robot soccer [6] and applying machine learning strategy for controlling mobile robots [7] . Similar to computer programs and algorithms, developing an artificial intelligent (AI) agent is also usually rule-based, meaning it requires programmers to define a set of rules in order to perform a task. Additionally, like defining algorithms, developing AI agents are a complex and time-consuming process as its reasoning and decision-making abilities must mimic or resemble those of a human [8]. Utilising machine learning to develop AI agents can enhance fast development, reduce the workload of the developer but most importantly, results in more versatile AI agents through their ability to learn [1].

Objectives

The purpose of this project is to produce AI agents using random forests, a well-known machine learning method. The first objective is to produce a training dataset which is used as an input for random forests. The next objective is to Convert the production rules into a functional agent in Java language and develop a program to demonstrate the games using the AI agent. Here, another goal is to investigate the effects of different training data size has on the production rules, hence, the performance of AI agent. Finally, the last objective is to achieve expert behaviour of the agents.

Background

Machine Learning

Machine learning is referred to as the autonomous learning ability of a computer through series of models [8]. There are two types of machine learning: supervised and unsupervised. Unsupervised learning uses a set of data without any class information and reveals patterns or features hidden within the data [9]. On the other hand, supervised learning uses classified training data, which contains an input and a desired output to learn a function and predict a class for any valid data [9]. Supervised learning usually involves classification tasks. Decision trees and random forests are well-known examples of supervised learning methods [9].

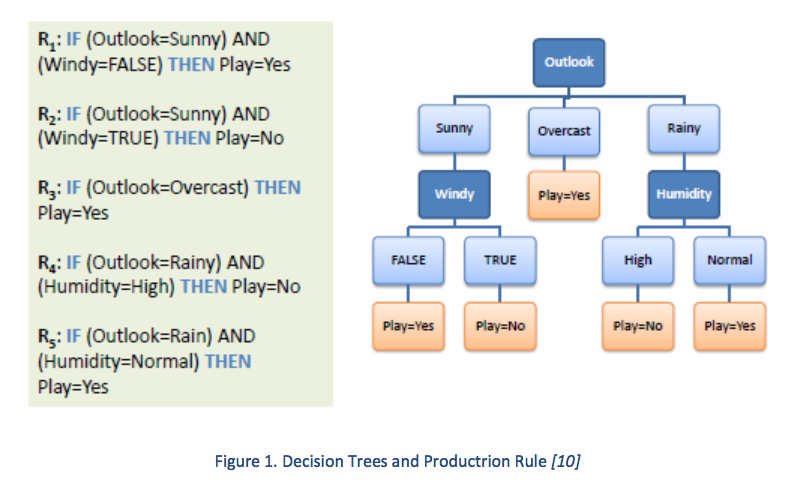

Decision Trees

A decision tree is supervised logic based machine learning method ultimately used to classify data, through categorizing information depending on values or features [3]. Decision tree contains two types of decision nodes: root nodes (also known as decision nodes); and leaf nodes [10]. Figure 1 represents an example of a decision tree. The node at the upmost part of the tree is referred to as the Root Node where the classification initiates. From the decision node are multiple branches, which then lead to leaf nodes. Leaf nodes signify a result or classification. The set of rules that results a in classification is called the production rules. The production rules can easily be defined by translating each path from a leaf node to the root node of a decision tree into the form of “if-then” statement (Figure 1).

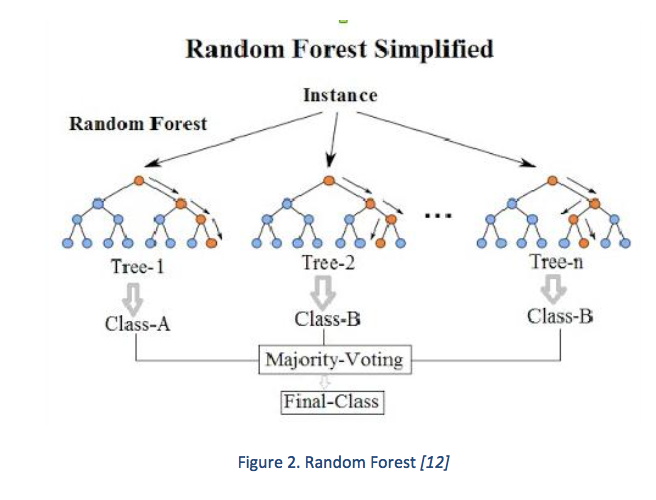

Random Forest

Random forest is a machine learning method used for classification that consists of multiple decision trees. A random selection of data (or variables) is used to generate the decision trees. This results in an ensemble of classifiers [11]. When presented with data to classify, the results from the decision trees may differ. The final classification for the input data is determined through casting a majority vote. The most popular classification among the various decision trees is output as the final result. The process is illustrated in Figure 2 [11] [12].

To achieve correct classification, it is crucial that the set of data chosen to generate the random forests [CHECK THIS] contains important features [13]. However, this is not always the case as the dataset is randomly selected and may contain impurities. Random forest provides a process called “feature selection” which involves developing a model that only includes the most important feature from a random dataset [11]. Therefore, random forest has advantages on minimising the generalization error and does not overfit (too many features effecting the overall accuracy in prediction) [13]. Other advantages of random forests include the effectiveness in computing and short amount of time required to train which makes random forest a good classifier [13].

C5.0

C5.0 is a software platform used as a data mining tool which involves a process of evaluating patterns from a set of data [14]. The evaluated patterns are categorised and constructed into classifiers [14]. C5.0 uses the classification techniques discussed in 2.2 and 2.3. A random set of input data produces classifiers in the form of decision trees and by majority voting the final output classification is generated. As a result, the set of rules (production rules) can be found automatically which describe the classifier [14].

Reinforcement Learning

RL is a type of machine learning which its agents learn the desired behaviour through interacting with the environment [6]. The objective of RL is to obtain the best action based on the current state [7]. The RL agents learns the behaviour through the process of rewarding/penalising the actions it has taken [7] and the RL algorithm aims to select actions that maximizes the expected cumulative rewards [6].

Project Method

1. Developing Training Dataset

Tic-Tac-Toe Training Dataset

The steps taken to generate the training dataset are:

1) Play the game of Tic-Tac-Toe and record current state of the board in a line followed by a number that represents the position of the board where next move will be made. Starting from the left, each letter corresponds to state of the board position 1 to 9 (e.g. N,N,N,N,N,N,N,N,N will represent the initial state of the board since no move is made by players).

2) Repeat step 1) until the game is over.

3) Include recorded results in the data file.

4) Repeat step 1) to 3) for each game played.

5) To investigate the effect data size has on the agent’s behaviour, Record 5,10,15 and 20 games of data separately to form training datasets. Each training dataset will be used to generate production rules for the AI agent in later stage.

Connect 4 Training Dataset

following steps are taken to generate the training dataset.

1) Play the game of Connect 4 and record current state of the board in a line followed by a number that represents the column of the board where next move will be made. Starting from the left, each letter corresponds to state of the board column number 1 to 7 (e.g. N,N,N,N,N,N,N will represent the initial state of the bottom row since no move is made by players). However, there are six rows in the board therefore, total of forty-two letters are required to display the board state.

2) Repeat step 1) until the game is over.

3) Include recorded results in the data file.

4) Repeat step 1) to 3) for each game played.

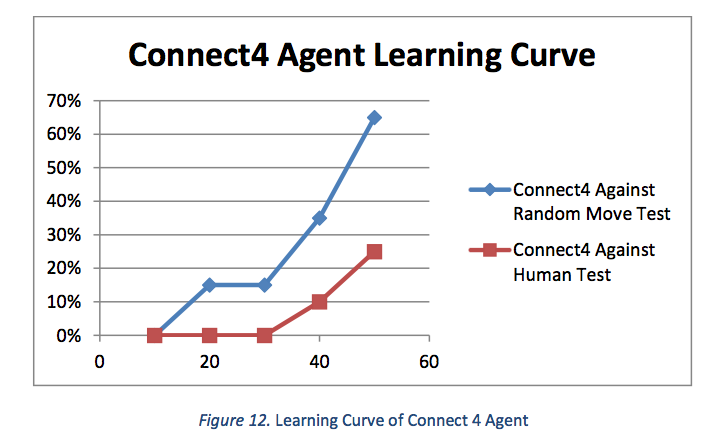

5) To investigate the effect data size has on the agent’s behaviour, Record 10,20,30,40 and 50 games of data separately to form training datasets. Each training dataset will be used to generate production rules for the AI agent in later stage.

2. Converting Decision Trees to Java Agent

Tic-Tac-Toe Agent

The steps involved in converting production rules to Tic-Tac-Toe agent in Java are:

1) Open C5.0 software and locate the training dataset from Section 4.1.1.

2) Click on “Construct Classifier” button in the toolbar. This shows the classifier construction options. Construct classifier with default setting and click OK. The resulting decision trees are displayed in a new window with details.

3) Copy and save the decision trees.

4) Repeat step 1) to 3) with different sizes of each training datasets.

5) Translate each set of production rules from resulting decision trees into Java language. This is essentially the agent for the game for each different sizes of training dataset.

Connect 4 Agent

The steps involved in converting production rules to Connect 4 agent in Java are:

1) Open C5.0 software and locate the training dataset from Section 4.1.2.

2) Click on “Construct Classifier” button in the toolbar. This shows the classifier construction options. Construct classifier with default setting and click OK. The resulting decision trees are displayed in a new window with details.

3) Copy and save the decision trees.

4) Repeat step 1) to 3) with different sizes of each training datasets.

5) Translate each set of production rules from resulting decision trees into Java language. This is essentially the agent for the game for each different sizes of training dataset.

3. Simulating & Testing the Performance of Agent

Java Programs for Game Environment

To simulate and test the game of Tic-Tac-Toe and Connect 4, gaming environment is required for both games in Java. The program developing processes are:

1) Write a program for the agent which will be used in a main program.

2) Write a program to construct the board for the game.

3) Develop a function to display and update the board state.

4) Develop a function which takes an input from user.

5) Write a main program that uses above functions and programs. This main program also implements the rules of the games.

Testing the Performance of Agents

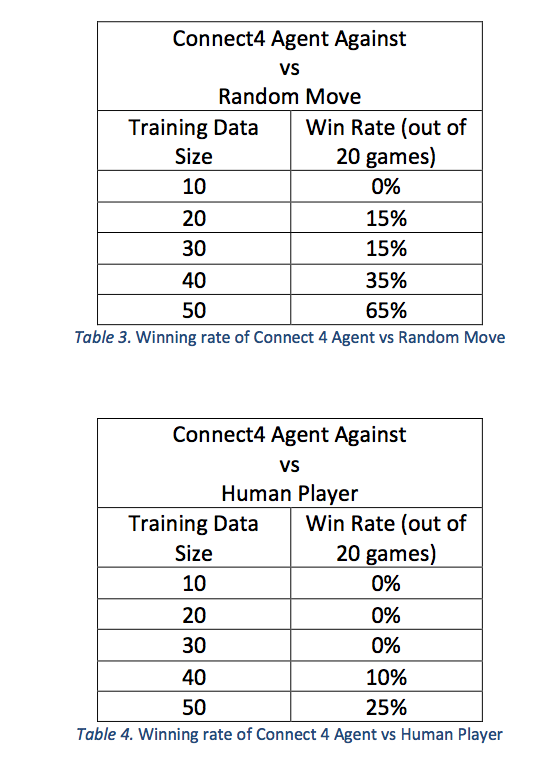

The performance of the agents will be recorded by simulating the game. The desired performance of the agent is to either win or at least, draw the game. The testing procedures are as follows:

1) Use the programs and agent developed in previous section to play both games and record the results for twenty games.

2) Repeat step 1) with each agent with different training dataset size.

3) Calculate the winning rate for each agent.

Improving Performance of Agent

Tests are performed to discover undesired behaviour of the agent. These errors are corrected by including new training data for particular error cases into existing training dataset. The updated training dataset will undergo the agent development cycle depicted in Figure 4 and as a result, the performance of the agent will be improved.

The steps for improving performance of agent are:

1) Simulate both games and test the performance of each agent.

2) When undesired behaviour of the agents occurs, record the conditions (e.g. the state of the board and next move made).

3) Include a new training case into existing training dataset. Repeat step 1) to 3) to improve the performance.

Project Results

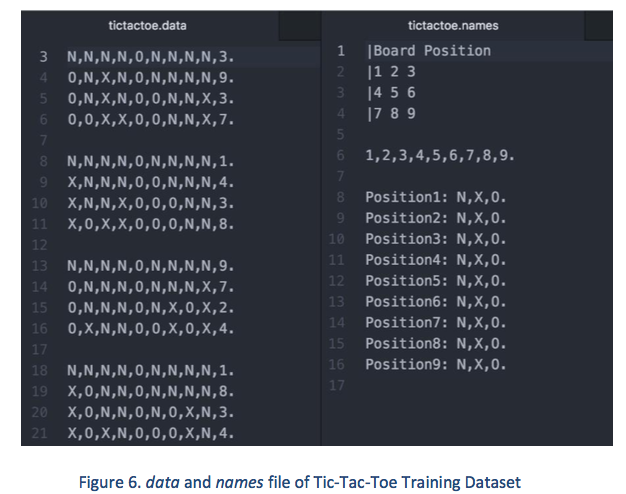

Training Dataset for Tic-Tac-Toe

Figure 6. represents an example of the training dataset produced for the game of Tic-Tac- Toe by following the procedures in Section 4.1.1. The largest training dataset contains twenty games of training data. Both data and names files are successfully defined and each training data cases are separated by space between them.

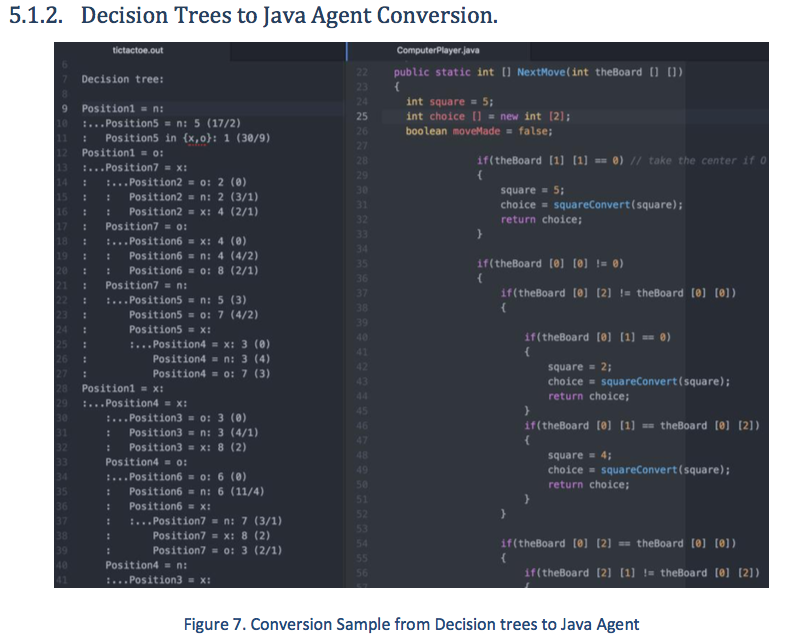

The left side of Figure 7 Is the production rules from resulting decision tree and the right is the direct translation of the production rules in Java language. Essentially, the production rules are if-then statements. Therefore, the program written in Java directly uses the conditional statements (if statements) to define the behaviour of the agent.

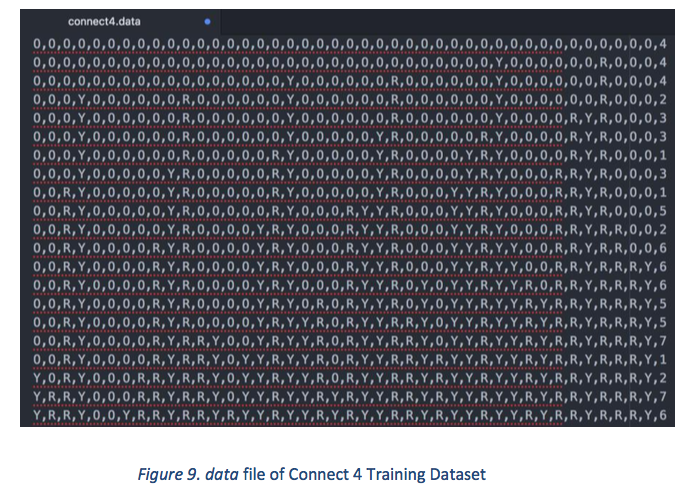

Training Dataset for Connect 4

Figure 9 and 10 represents the examples of the training dataset produced for the game of Connect 4 by following the steps in Section 4.1.2. The largest training dataset contains fifty games of training data. Both data and names files are successfully defined and each training data cases are separated by space between them.

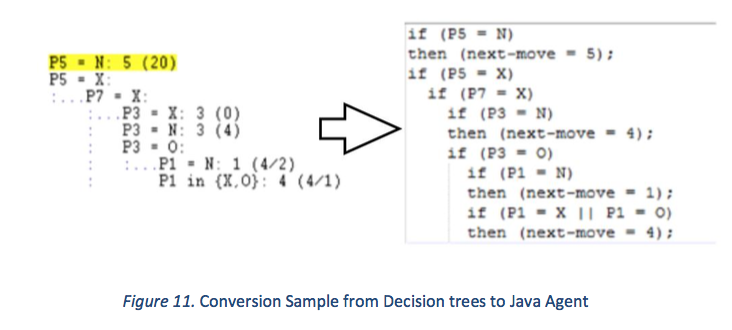

The results for this section is identical to the one in Section 5.1.1. The left side of Figure 11 Is the production rules from resulting decision tree and the right is the direct translation of the production rules in Java language. Essentially, the production rules are if-then statements. Therefore, the program written in Java directly uses the conditional statements (if statements) to define the behaviour of the agent.

The results for this section is identical to the one in Section 5.1.1. The left side of Figure 11 Is the production rules from resulting decision tree and the right is the direct translation of the production rules in Java language. Essentially, the production rules are if-then statements. Therefore, the program written in Java directly uses the conditional statements (if statements) to define the behaviour of the agent.

Discussion

Training Dataset for Tic-Tac-Toe and Connect

During the development of the training dataset for Tic-Tac-Toe, it was possible to generate dataset which covers most of the winning scenarios as size of the board is small (9 blocks in the board) and there are only 8 winning scenarios. Therefore, it required relatively small size of training dataset. The dataset of 20 games is sufficient to define the overall behaviour of the agent. On the other hand, the size of the board for connect 4 is much larger (42 blocks in the board) than the Tic-Tac-Toe, hence much more winning scenarios to consider. In fact, it was too difficult and time-consuming to cover all the possibilities. This indicates that the developing process of training dataset becomes more complicated and time consuming as the AI environment becomes more complex. For the purpose of this thesis, these datasets were produced manually but considering an alternative method for generating the training dataset would speed up the agent development process.

Converting Decision Trees to Java Agent

The inferring decision trees from random forest is the foundation for defining the rules of these agents. As it can be seen in Figure 7 & 11, the decision trees consist of production rules which are just lists of “if-then” statements that can be directly translated into any other programming language. These condition statements determine the behaviour of the agent. Hence, it emphasizes the close relationship between the training dataset and the agent.

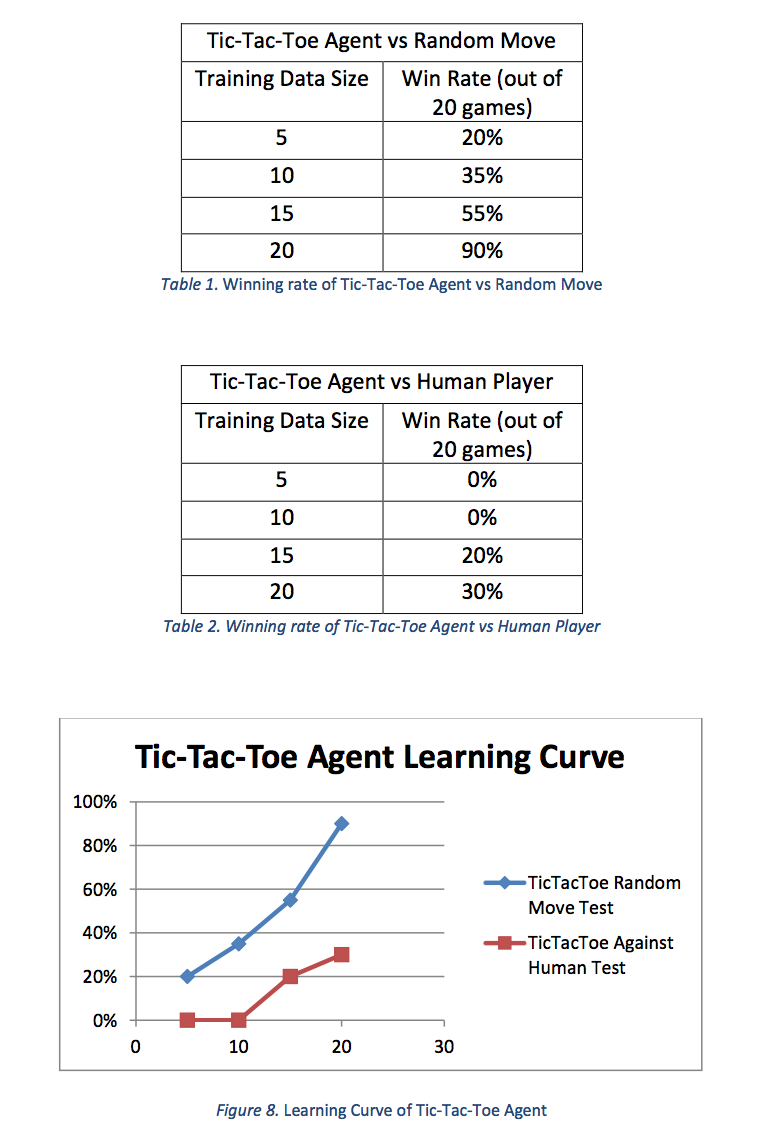

Simulating and Testing the Performance of Agents

While simulating and testing the agents, a few undesired behaviours of the agents were encountered for Tic-Tac-Toe but still maintaining acceptable level of performance. However, the Connect 4 agent was not meeting the performance expectations. This was mainly due to the fact that the size of training dataset is not sufficient to successfully train the agent. Unlike Tic-Tac-Toe case, the training dataset of 50 games weren’t enough to determine the desired behaviour of Connect 4 agent. In fact, the behaviour was very limited to the training dataset provided which agrees with Peter Stone’s statement “the behaviour was trained and tested in a limited situation, it is unclear whether it would generalize to the broader situations” [5]. Table 1, 2, 3 & 4 show that against random moves the performance of the agents are higher than the ones against human player. This means that the agents cannot yet think like human player and predict next moves. The tables also indicate that as the size of training dataset increases, the more likely to achieve desired behaviours of the agents given that the quality of the data is insured. Additionally, the graphs in Figure 8 & 12 indicates that the learning rate of the agents is increased as the size of training dataset increases.

Improving Performance of Agents

The procedures shown in Section 4.4 slightly contributed in improving the performance of agents. However, it was not an efficient way as the processes required are long and tedious. For example, if an undesired behaviour is identified, the corresponding training case is produced and included inside the existing training dataset manually. Then, a new production rule must be generated by random forest and these production rules are again translated manually into rules which defines the agent behaviour. Hence, a new way for performance improvement is required.

Conclusion

This project delivered agents capable of playing tic-tac-toe and connect 4. The agents were generated automatically using random forests and inferring decision trees. The decision trees represent the production rules which formed the basis of agent behaviours. To the best of the author’s knowledge, there is only one other example in the literature of an AI generated in this way: the application of decision tree algorithm to agent control in multi-agent environment such as robotic soccer domain [14]. The simulation and testing results showed in section 5.1 & 5.2 indicates that machine learning methods such as random forests and decision trees can be used to produce an AI agent. It also indicates that the behaviour of the agent is strongly dependent on the quality and size of the training dataset. Furthermore, the size of training dataset can be small or vast depends on the complexity of operation environment and tasks. Although these agents worked, the development exposed a number of limitations. Firstly, preparing the training dataset can be time consuming and requires high work load. All of the training datasets used for this project are constructed manually. For the purpose of this project, relatively simple game environments were chosen. However, developing AI agents with more complex environment using the manual approach would be inefficient and difficult process. Secondly, the characteristics of decision trees being a good classifier limits the AI agents to only make decisions based on current state of the environment it is in. This behaviour of agent is not considered as a “smart” agent as it cannot predict the future outcome of current decision. Finally, the agents developed using this method do not consider other possibilities in decision making. This means that the decision made by the agent is not necessarily the optimal solution to the problem. Due to these limitations, it was concluded that random forest alone is not a good way of developing AI agents. To overcome these limitations, I have proposed a new approach of combining RL with random forests and to add new functions in random forest that supports the RL methods. These new approaches could make the agents to continuously observe and learn from the environment. While this is a good possibility to an effective way of producing AI agents, limitations could also arise from difficulties in combining two methods.

Future Project Recommendations

Reference

[1] T. O. Ayodele, “Introduction to Machine Learning,” in New Advances in Machine Learning, INTECH, 2010, pp. 1-8.

[2] J. G. Carbonell, R. S. Michalski and T. M. Mitchell, “An Overview of Machine Learning,” in Machine Learning: An Artificial Intelligence approach, California, TIOGA Publishing Co., 1983, pp. 3-23.

[3] S. B. Kotsiantis, “Machine Learning: A Reiview of Classification Techniques,” in Emerging artificial intelligence applications in computer engineering Real Word AI Systems with Applications in eHealth, HCI, Information Retrieval and Pervasive Technologies, vol. 160, Amsterdam, : IOS Press, 2007, pp. 3- 24.

[4] T. O. Ayodele, “Machine Learning Overview,” in New Advances in Machine Learning, INTECH, 2010, p. 9–18.

[5] P. Stone and M. Veloso, “Using Decision Tree Confidence Factors for Multi-Agent Control,” Computer Science Department of Carnegie Mellon University, Pittsburgh, 1998.

[6] M. Yoon, J. Bekker and S. Kroon, “New reinforcement learning algorithm for robot soccer,” Orion, vol. 33, no. 1, pp. 1-20, 2016.

[7] I. Carlucho, M. D. Paula, S. A. Villar and G. G. Acosta, “Incremental Q-learning strategy for adaptive PID control of mobile robots,” Expert Systems with Applications, vol. 80, pp. 183-199, 2017.

[8] D. Michie, F. D. Spiegelhalter and C. C. Taylor, “Classification,” in MACHINE LEARNING, NEURAL AND STATISTICAL CLASSIFICATION, New York, 1994, pp. 6-16.

[9] T. O. Ayodele, “Types of Machine Learning Algorithms,” in New Advances in Machine Learning, INTEC, 2010, pp. 19-46.

[10] S. Sayad, “Decision Tree,” [Online]. Available: http://www.saedsayad.com/decision_tree.htm. [Accessed 10 March 2017].

[11] L. Breiman, “Random Forests,” in Mahince Learning, vol. 45, Kluwer Academic Publishers, 2001, pp. 5-32.

[12] N. Kumar, Linkedin, 17 June 2016. [Online]. Available: https://www.linkedin.com/pulse/random- forest-algorithm-interactive-discussion-niraj-kumar. [Accessed 15 March 2017].

[13] H. E. Osman, “Random Forest-LNS Architecture and Vision,” in New Advances in Machine Learning, INTECH, 2010, pp. 151-162.

[14] “Data Mining Tools See5 and C5.0,” Real Quest, April 2017. [Online]. Available: https://www.rulequest.com/see5-info.html. [Accessed: 04-Mar-2017. [Accessed 4 march 2017].

[15] P. Stone and M. Veloso, “Layered approach to learning client behaviours in the RoboCup soccer server.,” Applied Artificial Intelligence, vol. 12, pp. 165-188, 1998.

[16] J. Frost, M. W. Numan, M. Liebelt and B. J. Phillips, “A New Computer for Cognitive Computing,” in IEEE International Conference on Cognitive Informatics & Cognitive Computing, Beijing, 2015.

[17] M. W. Numan, J. Frost and B. J. Phillips, “A Network-based Communication Platform for a Cognitive Computer,” 2015.

[18] M. C. Choy, D. Srinivasan and R. L. Cheu, “Nueral Networks for Continuous Online Learning and Control,” IEEE, vol. 17, no. 6, 2006.