Projects:2014S1-24 AI Agent Development for an Autonomous Trash Collecting Robot

Contents

Project Information

The goal of this project was to create a software environment for the development of Artificial Intelligence [AI] agents, and an agent to operate in it.

The creation of this agent facilitates an exploration of the Soar architecture, and helps promote the an understanding of its technical challenges and what kind of problems it may be suited to solving. The virtual environment allows the development of this agent in ideal conditions, where the complications of designing and interacting with hardware are abstracted away.

Environment

The software environment is comprised of two main components, the Virtual Robot Development Environment [VRDE], and the Communication Layer [CL].

Virtual Robot Development Environment

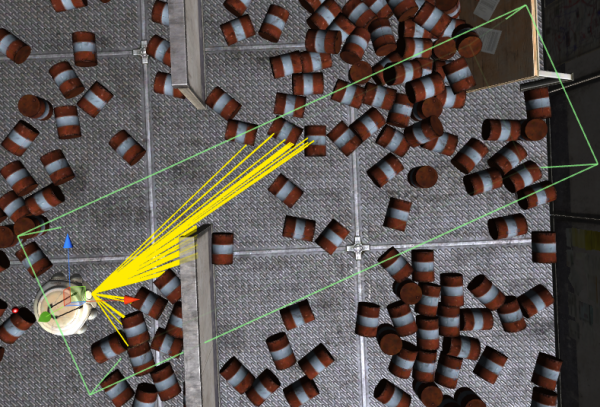

The VRDE is created in the Unity3D game engine. It contains a single, user-controllable robot, which can be driven around, pick up loose cans, and deposit them in bins.

The robot may be instructed to move, rotate, and halt, as well as pick up and discard items. These actions can be driven by a user via the keyboard, or by an AI Agent, written in Soar.

To allow the AI to make appropriate decisions, the robot contains a number of sensors to feed it data about the virtual environment. These sensors are designed to mimic physically realisable sensors, but simplified to allow the AI to easily use them. The currently implemented sensors are:

- Camera - Notifies the AI of any can or bin in sight, sending the name, range, and bearing of the object of interest.

- Compass - Constantly sends the robot's current orientation, relative to its spawn orientation

- Distance - Sends the total distance moved for the move operation in progress

- Rotation - Sends the total degrees rotated for the rotate operation in progress

- Collision - Act like 8 switches surrounding the robot, which notify the AI whenever they are triggered by the environment.

- Movement Status - Denotes whether the robot is currently executing a move or rotate operation.

- Arm Status - Denotes whether the arm is currently holding a can.

This collection of sensors is sufficient to allow the AI to perform its current task of hunting down loose cans, and depositing them in bins. They also allow for potential future AI goals, such as mapping the position of bins for more efficient disposal.

The VRDE has a well maintained codebase, test suite, documentation and a developer wiki.

Communication Layer

The Communication Layer is written in Java, and is used to allow the VRDE to communicate with the Soar AI Agent via the JSoar implementation.

The CL attaches event listeners to certain output links in the AI agent, which allows the agent to send instructions by writing to these links. The CL writes sensor data from the VRDE onto input links in the agent's memory.

The CL and VRDE use JavaScript Object Notation [JSON] to communicate. All instructions, network messges, and sensor data are written to simple JSON structures, and sent over a socket connection between the two.

AI Agent

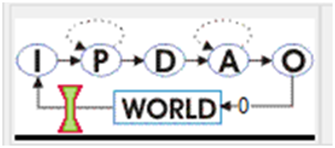

The AI agent is created in Soar which a unified architecture particullarly used for developing intelligent systems. Soar is a cognitive architecture, which allows modelling of certain aspects of human cognition, such as memory and reward systems. The agent uses the sensor data from the VRDE to allow it to make decisions, and the execution cycle of Soar is shown as following

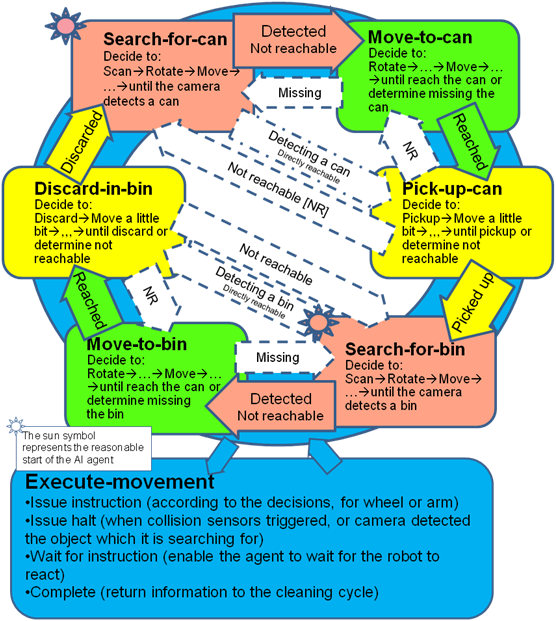

The agent has the following goal hierarchy:

Search-for-can

- Goal Description: If the robot is not holding a can, and there is no can detected with the camera, then it will decide to perform as following:

- firstly, rotate the robot for 360 degree for scanning

- secondly, choose a random direction

- then, move a random distance

- repeat until achieve the desired state

- Desired state: the camera sensor reports a can is visible,

- Consequence:

- enable move-to-can if the can is not reachable,

- enable pick-up-can if the can is directly reachable.

Move-to-can

- Goal Description: If the robot is not holding a can, and there is a can detected with the camera but it is not reachable with the arm, then it will decide to perform as following:

- firstly, rotate to the direction where the can is

- then, move to the can until the can is reachable

- repeat if there is a significant bearing again, until achieve the desired state

- Desired state: the camera sensor reports a can is reachable or missing the can

- Consequence:

- enable move-to-can if the can is reachable,

- enable search-for-can if the can is missing.

Pick-up-can

- Goal Description: If the robot is not holding a can, there is a can detected with the camera, and it is reachable with the arm, then it will decide to perform as following:

- firstly, pick up

- if not success, move forward a little bit

- repeat until achieve the desired state

- Desired state: the arm status sensor reports the robot is holding a can

- Consequence:

- enable move-to-can if the can is not reachable but still visible

- enable search-for-can if the can is is not reachable but no longer visible

Search-for-bin

- Goal Description: If the robot is holding a can, and there is no bin detected with the camera, then it will decide to perform as following:

- firstly, rotate the robot for 360 degree for scanning

- secondly, choose a random direction

- then, move a random distance

- repeat until achieve the desired state

- Desired state: the camera sensor reports a bin is visible

- Consequence:

- enable move-to-bin if the bin is not reachable,

- enable discard-in-bin if the bin is directly reachable.

Move-to-bin

- Goal Description: If the robot is holding a can, and there is a bin detected with the camera but it is not reachable with the arm, then it will decide to perform as following:

- firstly, rotate to the direction where the bin is

- then, move to the bin until the bin is reachable

- repeat if there is a significant bearing again, until achieve the desired state

- Desired state: the camera sensor reports a bin is reachable or the bin is missing

- Consequence:

- enable discard-in-bin if the bin is reachable

- enable search-for-bin if the bin is is missing

Discard-in-bin

- Goal Description: If the robot is holding a can, there is a bin detected with the camera, and it is reachable with the arm, then discard the can in the bin.

- firstly, discard the can

- if not success, move forward a little bit

- repeat until achieve the desired state

- Desired state: the arm status sensor reports the robot is not holding a can anymore

- Consequence:

- enable move-to-bin if the bin is not reachable but still visible

- enable search-for-bin if the bin is is not reachable but no longer visible

Execute-movement

- Goal Description: issue a move or rotate instruction to the robot and wait until the instruction is complete or a collision occurs or the desired object is detected for the searching supergoal. When a collision occurs, or when the desired object is detected for the searching supergoal, issue a halt instruction.

- Desired state: the goal has set ^goal-status complete in its persistent

- Consequence:

- return value:

- <top-state> ^persistent execute-movement

- ^status complete

- ^instruction-name (move-forward|move-backward|rotate-left|rotate-right)

- ^amount (0...)

- ^collision.(front,front-right,front-left,left,right,rear,rear-right,rear-left) (True|False)

- ^camera.(can,bin).(range, bearing) (0...)

Outcomes

This project succeeded in its goal of creating a virtual environment in which a Soar AI agent can operate. It provides an appropriate platform to create and explore Soar agents for autonomous vehicles. The AI agent delivered proves that the system is capable of interacting with an agent, and providing it enough information to make decisions.

Team

Students

- David Reece

- Shuangsheng Liu

Supervisors

- Dr Braden Phillips

- Dr Brian Ng