Projects:2014S1-31 Autonomous Robot Navigation using a Movable Kinect 3D sensor

Project Introduction:

The goal of this project is to extend the functionality and navigation performance of an existing robot platform by adding a movable 3D Kinect sensor. The robot will be able to navigate through the environment and perform collision avoidance whilst it searches for an object of interest.

Objectives:

--Collision avoidance

--Object recognition

--Path planning

Team Members:

Yinzia Pang

Yingzheng Wang

Supervisors

Danny Gibbins

Braden Phillips

Overall Approach:

There are two parts to approach. One part is the Hardware and the other part is Software approach.

Hardware Approach:

The hardware structure is based on the previous project robot structure. The existing platforms are eight ultrasonic sensors, one Mux-shield board, one Wild-thumper board and four motors. To implement object recognition and path planning, a kinect sensor and a servo motor is added to the existing platform.

Software Approach:

The kinect image processing code and the Path Planning & Collision avoidance code are written in C++. Kinect image processing program not only output the object information, but also output wide range environment information. The output data then are used by the decision making program.

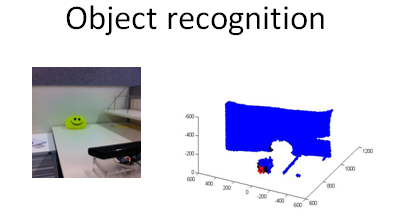

Object Recognition:

The Object Recognitoin is performed by 3D Kinect Sensor. In our project, the robot could recognize a simple object with a simple color. An example is showing below: A yellow ball is recognized.

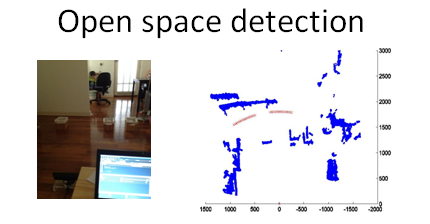

Path planning:

In this project, the path planning mathod is 'Find the open space'. An example is showing below: the red line are suggesting the open spaces that detected

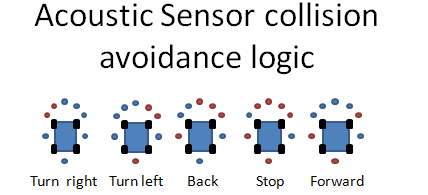

Collision avoidance:

Collision avoidance objective is performed by acoustic sensors. The logic of avoidance is showing below

Testing:

A test result is showing below. It is suggesting that the robot is able to detect object, find the open space for navigation, and avoid collision.