Projects:2014S1-39 Tell your Robot where to go with RFID (Improving Autonomous Navigation)

Contents

Tell your Robot where to go with RFID Project

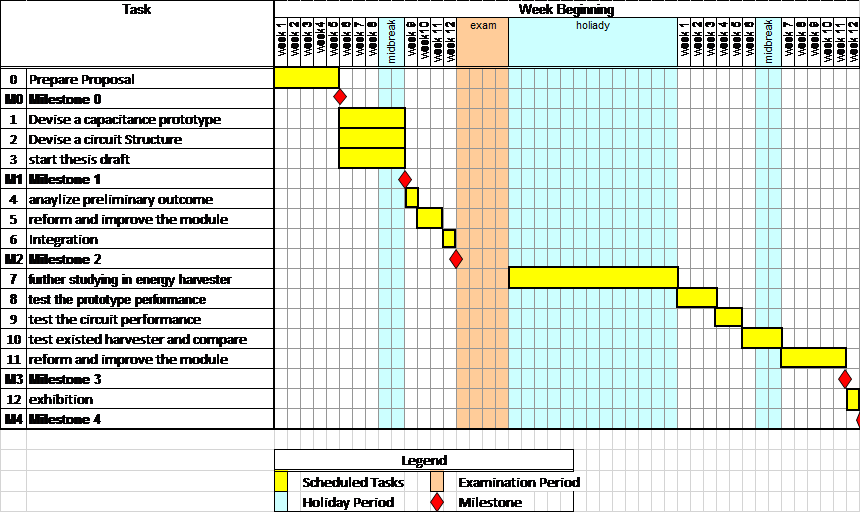

This project aims to improve robot simultaneous localization and mapping (SLAM) capacities to make robot SLAM faster and more accuracy.

Project introduction

The project is mainly divided into two parts: localization and mapping

For localization part, it is consisted by 3 steps:

1st, obtaining RFID position data by RFID sensor and obtaining the environment data by laser scanner

2nd, fusing RFID and laser data, an optimized position will be generated.

3rd, by using those positions and RFID ID, a data package of tags position distribution could be generated.

For map building part, in order to improve robot localization and map building, objectives blow should be achieved:

1. Adding RFID information to improve robot localization:

2. using RFID information (semantic information) to improve robot map building:

As the robot received the fused data the map has already waiting for update, but the robot does not know which groups of tags are describe one object, therefore, the robot need to base on the information that is described by RFID tag to group them.

After grouping, the robot can use the tags’ information to update the map. Once the map is updated, robot can according to the semantic information to navigation.

The semantic map effect sketch is shown below

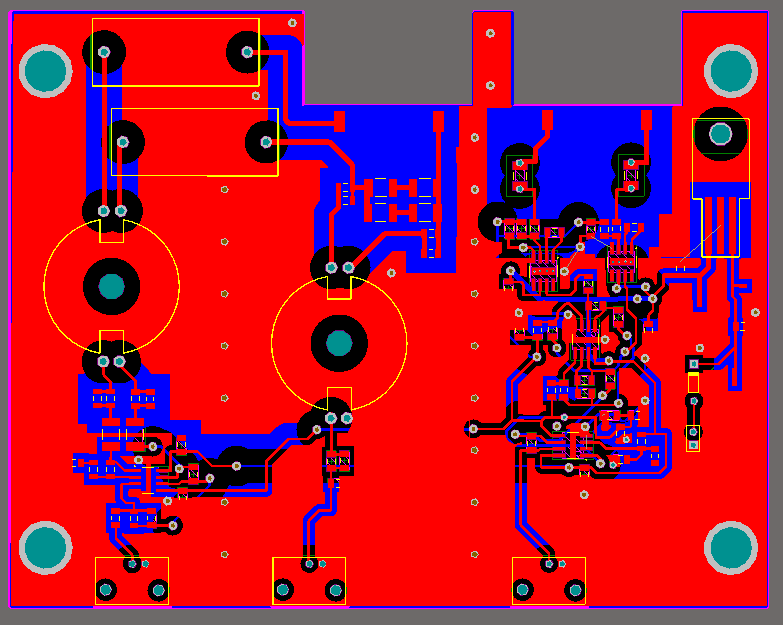

Project overview

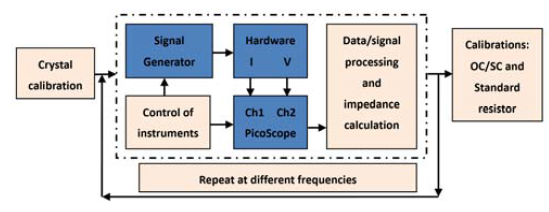

As it is shown below, this project is divided into two parts.

The one is sensor module.

The other is mapping module.

Sensor module focuses on localization RFID tags position which includes RFID and laser measurement and data fusion.

Mapping module aims to build a map based on the semantic information which includes moving and mapping two parts.

Xu Zhihao is in charge with sensor module

Qiao Zhi is responsible for mapping module.

The project overview is shown below

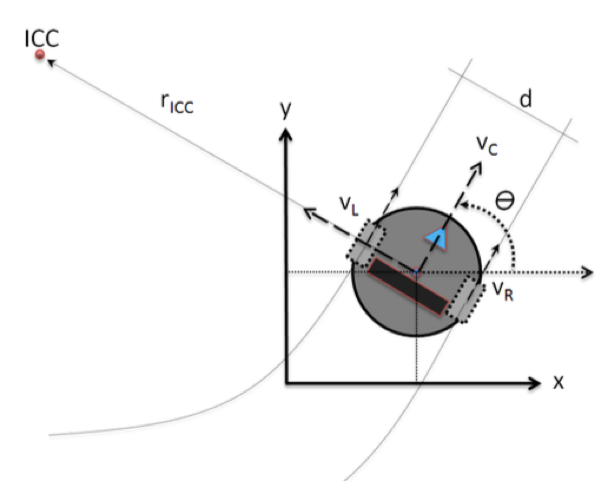

Sensor Module Algorithm

The structure of sensor module is stating following.

Initially, RFID sensor module and laser sensor module will be discussed, separately.

Then, data fusion part will be introduced. Finally, the experiment results will be analysed.

Before starting, the limitation of laser will be discussed.

As is shown below, laser could not create an accurate map when there is no enough points and a objects locates on the scanning path.

1. Laser

To begin with, a single cycle of a particle filter is shown blow.

Step 1: Likelihood calculation

1.1 Getting un-weight value

1.2 Each measurement could be saw as a particle, and Each reading own one weight

1.3 Weight calculation (weight = posterior) is needed to generate an important weight.

1.4 The area presented by importance weight value is used as the scan area of next cycle.

Step 2: Likelihood optimisation

2.1 comparing with threshold

2.2 Re-sampling

weight computation

Importance Density Funtion:

practical weight calculation formula

2. RFID

RFID algorithm is mainly consisted by 2 parts: measurement and self-matching.

Measurement:

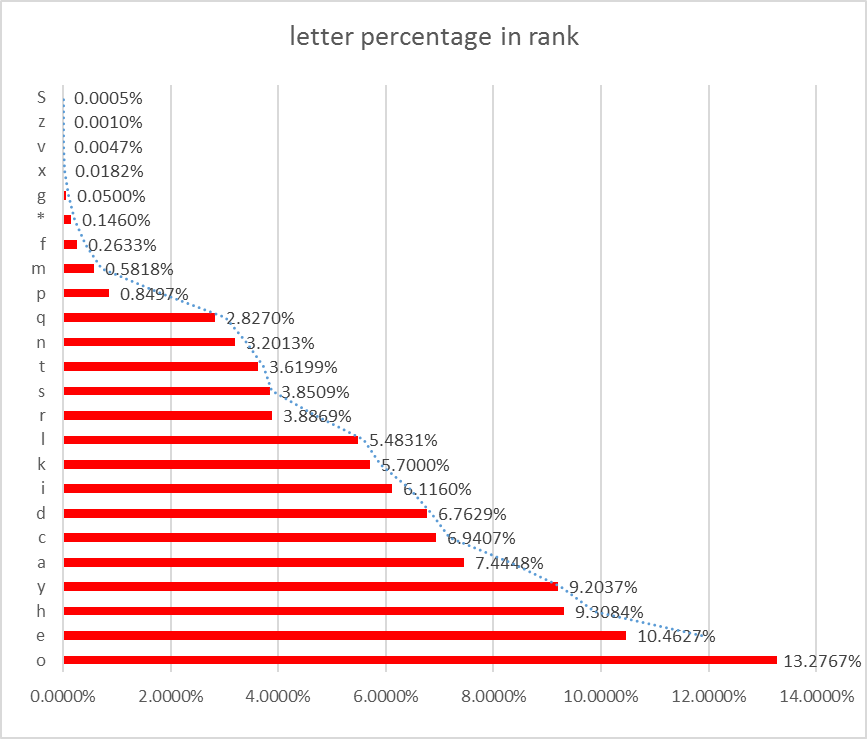

2.1.ranking

due to human influence, particle filter could be not directly applied here.

Therefore a ranking process will be needed. The RFID measurement data will be ranked from high values to low values.

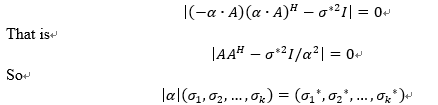

2.2.log –normal Shadowing model

This model is suited for calculation between RSSI and distance. the formula is shown below.

P=P0+10*N*log(d/do)-ξ Eq. 2.2

P0 and d0: reference power and reference distance.

N: pass loss index.(small room :3.4)

ξnormal random variable (caused by flat fading)

The method is briefly discussed below.

As it is shown below, two steps will be discussed.

Step 1: measurement

Robot will twist (Zhi Qiao, motion module) until it detects the maximum RSSI; and then turns back.

This action will help robot obtain the original angle θ0 between robot and tag.

According to Eq. 2.2 the distance d1 could be computed.

Thus, through ranking and applying trigonometric function, the position of tag X1 and Y1 could be calculated and optimised.

The optimise method is slightly difference with particle filter which will be discuss later.

Finally, the robot will compute a prediction of the tag position likelihood (x2 and y2) which means the probability of the tag position in next step; along with the predicted angle θ1 which the robot need to turn next time.

Step 2: matching

The robot will move forward with a distance of y1 (Zhi Qiao, motion module).

Then, robot will twist (Zhi Qiao, motion module) based on the predicted angle θ1 and will detect the RSSI, and then it turns back.

After computing the nest step tag position likelihood, a hypothesis test will be hold.

If the results are greater than threshold, the map will be re-sampled. Otherwise, the position X2, Y2 will be published.

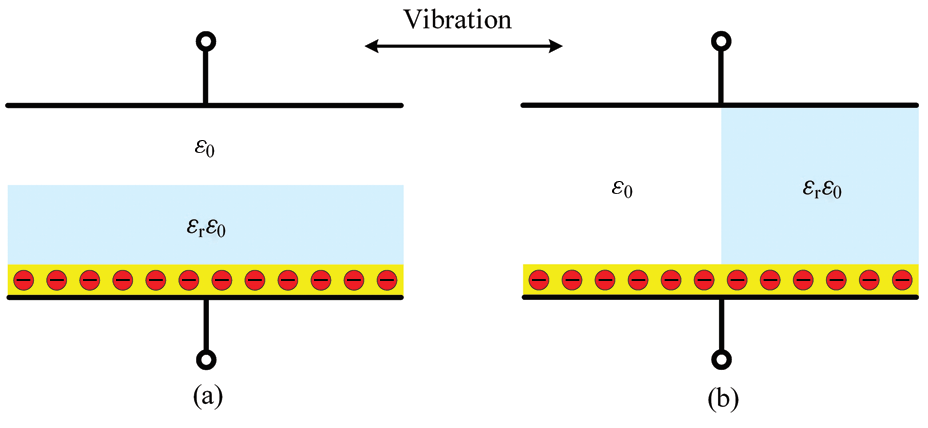

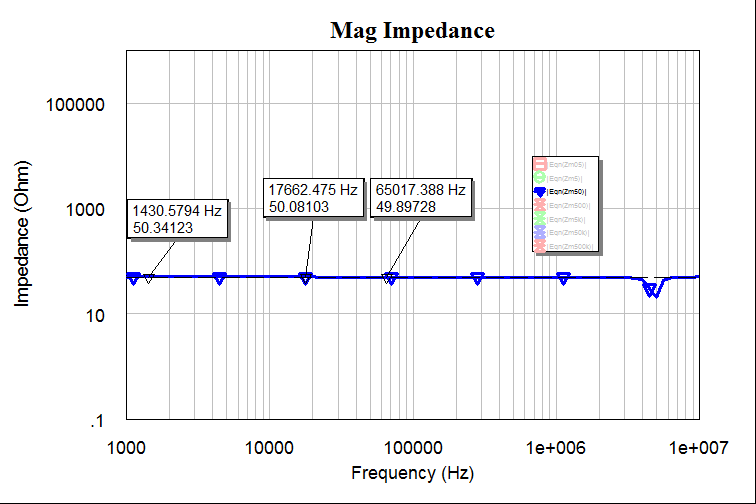

4. Data fusion

As it is shown upon data fusion is also include two steps which will be argued below.

Matching data

Initially, as the result of laser scanner generating numerous data, it is hard to notice which data represents the same point with the corresponded RFID data.

Consequently, a matching process will be needed.

In this project, all the position data is stored in form of (X, Y).

Therefore, for the matching process, the difference value between RFID data Xrfid and laser data Xlaser will be compared with the threshold.

If the difference value of X is greater than the threshold, then the next laser data will be checked.

If the difference value of X is less than the threshold, the next step is to compare difference value of Y with the threshold.

After matching data, the final optimised position will be computed.

Data fusing

the formula is shown in the flow chart upon

x=root(sqr(Xrfid)+sqr(Xlaser))/2

y=root(sqr(Yrfid)+sqr(Ylaser))/2

Map Building Algorithm

Results

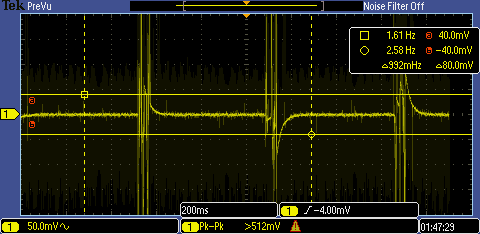

For the sensor module the localization results are shown below.

Test environment:

small room pass loss index: 3.4

the mapping result is shown below

There are three rectangular objects in an environment by using four-tag algorithm.

The purpose of this test is to verify the logic of the algorithm and the tag’s position in different condition (positive value and negative value).

The left lower rectangular object is trying to simulate a normal object when the robot received accurate position.

The right higher two graph are simulate two adjacent objects and the corner tags’ position the robot received are not accurate.

Conclusion

In a short range RFID error :3cm laser error:5cm total error: maximum 2.9cm. human influence RFID sensor. The robot is able to know which tags are describe the same object in the unknown environment The robot have already obtained a sematic database to describe the environment. works need to be done in the future:

1. Reducing human influences. 2. Cutting off twist action. 3. Long range RFID detection. 4. Real-Time mapping. 5. Tag define interface. 6. Link sematic database to Robot navigation package.

Reference

[1]M. Montemerlo, S. Thrun, D. Koller, and B. Wegbreit, "FastSLAM: A factored solution to the simultaneous localization and mapping problem," in AAAI/IAAI, 2002, pp. 593-598.

[2]F. Dellaert, D. Bruemmer, and A. C. C. Workspace, "Semantic slam for collaborative cognitive workspaces," in AAAI Fall Symposium Series 2004: Workshop on The Interaction of Cognitive Science and Robotics: From Interfaces to Intelligence, 2004.

[3]D. Lymberopoulos, Q. Lindsey, and A. Savvides, "An empirical characterization of radio signal strength variability in 3-d ieee 802.15. 4 networks using monopole antennas," in Wireless Sensor Networks, ed: Springer, 2006, pp. 326-341.

[4]D. B. R. Dr S.Davey, Dr N.Gordon "Multi-sensor Data Fusion," in Multi-sensor Data Fusion, ed. The University of Adelaide: The University of Adelaide, 2013, pp. 37-40.

[5]A. Doucet, S. Godsill, and C. Andrieu, "On sequential Monte Carlo sampling methods for Bayesian filtering," Statistics and computing, vol. 10, pp. 197-208, 2000.

Team

Group Members

Zhihao Xu

Zhi Qiao

Supervisors

Said Al-Sarawi

Damith Ranasinghe

Brad Alexander

The aim of this project is to Improve robot SLAM with RFID, laser and odometers