Projects:2015s1-06 Performance Evaluation of KALDI Open Source Speech Recogniser

Contents

Introduction

Project Background

This honours project has received project-based scholarship funding from DST. Apart from this Thesis, an evaluation report of Kaldi toolkit or a user instruction shall be produced at the end of the lifecycle of this project and assessed by DSTO, for future comparisons with the recognition performance of other prevalent speech processing toolkits, such as HTK , Sphinx and Julius .

Previous Work

This project is continuing work from summer project. Student involved in summer projects has built ASR system on their local laptops upon limited sets of data, and their outcomes was delivered to us. We shall revise and integrate their work into Kaldi evaluation via large volume of data.

Project Objectives

The principal goal of this research project is to evaluate the decoding performance of human speech by designated speech recogniser Kaldi. By building speaker-independent system via Kaldi toolkit, The quality of Kaldi transcription is indicated in terms of word error rate percentage (WER%), compared by human transcription collected in each corpora.

To understand the framework of Kaldi is another purpose of this project. The thesis will discuss about the contribution of each main module and interaction between. Apart from conventional recipes used in evaluation phase, the Thesis invokes a DNN recipe given by Kaldi in order to investigate the enhancement from DNN-based acoustic model.

Technical Background

The essential goal of speech recognition is to enable machine to understand and transcribe human speech, desirably of any content and any language, with any dialect, or uttered under any circumstance. The statistical methods, particularly probability theory have been employed so far to perform speech recognition.

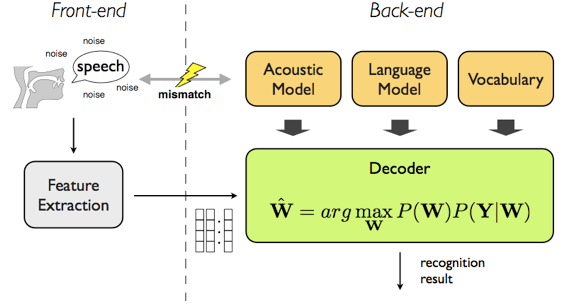

The equation included in Fig.1 is the core of speech recogniser and derived from Equation 1. If X denotes the feature vector derived from acoustic data and W denotes a string of words; therefore, the recogniser is capable of picking up the most preferred string by

where P(W|X) denotes the probability that W are uttered given that X is detected. The argmax function is referred to the most likely input that leads to maximum output. The Bayes’ rule then is applied to continue with above assumption by rewriting the right-hand side of formula,

where P(W) represents the probability that the string W would be spoken, P(X) indicates the probability of observing acoustic evidence X , and P(X|W) denotes the probability of detecting acoustic facts X given that W is uttered.

The term P(X) can be dropped because it is a normaliser and independent of string X [1], thus the recogniser shall determine the most likely content W ̃that matches with acoustic feature X by

Feature Extraction

Feature extraction of speech is either referred as speech parameterization. The objective of feature extraction is to analyse the audio signals in a short scale for statistically stationary purpose and to characterize the spectral features of signals in preparation for speech decoding [2]. The ideas behind feature extraction establish a bridge between audio information and statistical models in ASR. Mel-frequency cepstral coefficients (MFCC) [3] and perceptual linear prediction (PLP) [4] and linear predictive coding (LPC) [5] are the most widely popular methods to extract acoustic features.

The parameters derived from MFCC and PLP considers the nature of speech while LPC predicts the future features based on previous feature. Since human voice and human auditory system are nonlinear in nature, LPC is not a good option to estimate the acoustic feature on the grounds of evidence shown in [6]. MFCC and PLP are derived on the concept of logarithmically spaced filters and hence had better performance in comparison to LPC [7]. The Thesis picked up MFCC since a recipe used in later stage required MFCC features [8].

MFCC in Kaldi

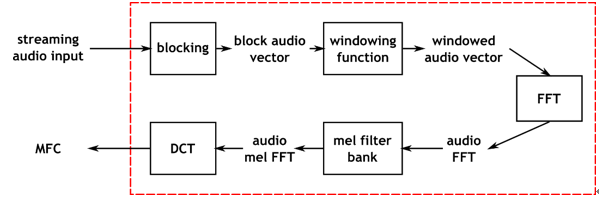

Figure 2 indicates key steps of MFCC computation in Kaldi. The steps can be succinctly described as follows [10].

- • Take 25ms window for a token, shifted by 10ms each time

- • For each window

- o Perform pre-emphasis and multiply by a Hamming window

- o Do Fast Fourier Transform (FFT)

- o Compute log energy in mel frequency bin

- o Do Discrete Cosine Transform (DCT) to get the cepstrum

- o Keep the first 13 coeffiencts from cepstrum

Statistical Methods: Language Model and Acoustic Model

The decoding performance of ASR system largely depends on the effects of language model and acoustic model. The objective of language modelling is to compute the probability of a string in current recognition task among large volume of texts. This procedure is critical to reduce the complexity of word searching in decoder [11]. At first glance we consider N-gram model, which is the most popular language model that was produced with no deep structure or thought behind.

The objective of acoustic modelling is to statistically describe words in terms of acoustic phonemes. A prevalent method known as Gaussian Mixture Model-Hidden Markov model (GMM-HMM). HMM is based on finite-state automation to derive the transition probabilities of states, so as to compute the probabilities of the word comprised of distinct phones [12]. HMM states typically constitute monophone model and triphone model where other methods are applied to further improve the modelling which will be shortly discussed in next section. GMM models the output observation of HMM states by mixtures of Gaussians. Thus various HMM states would be modelled in same Gaussian distribution by decision tree clustering in the stage of building monophone model.

Alternative method to train LM and AM is to attempt neural network based models that is aiming at perform estimation via large amounts of unknown inputs [13]. In the aspect of LM, it is proved by [14] that the application of recurrent neural network based language models (RNNLM) significantly reduce WER%. Meanwhile, methods for training acoustic model with aid of deep neural networks (DNN) can outperform conventional method, especially based on large datasets and large vocabularies [13] [15].

Speaker Adaptive Training (SAT) and Linear Transform

As for speaker-independent system build, SAT is widely used to normalise speaker contribution [16]. Maximum likelihood formulation is to identify speaker specific variation and phonetically relevant variation. The idea behind here is enable us to reduce the speaker-specific variance and more accurately represent context-dependent variation [16].

SAT is usually built from linear transform. The speaker-specific characteristics are captured by the Maximum Likelihood Linear Regression (MLLR) method. MLLR is to obtain the linear transformation which maximizes the likelihood of the adaptation data. Regarding modern ASR technique, MLLR has been consistently applied with Linear Discriminant Analysis (LDA), which is widely used to reduce dimension of covariance matrices.

How does DNN outperform GMM-HMM

DNN is a feed-forward neural network that has more than one layer of hidden units between its inputs and its outputs. [17] Each hidden unit typically manages to map its input from the layer below [17]. DNN is comparatively more suitable to distribute information embedded in a large frame [17]. It has the potential to do better modelling of data that lie on or near a nonlinear distribution. The researchers has achieved success using DNN with multiple hidden layers to predict HMM from MFCC representations of acoustic features [17].

DNN offers several obvious advantage over GMMs. The most important one is that “DNN use far more of data to constrain each parameter because the output on each training case is sensitive to a large fraction of the weights” [18]. And another improvement is that DNN can estimate posterior probabilities of HMM states without details of data distribution [19].

In our proposed hybrid acoustic model, a DNN is trained to output hidden Markov model (HMM) context-dependent states regarding posterior probabilities. From the papers reviewed [17], a learning algorithm based on restricted Boltzmann machine (RBM) has been considered to perform the job. Kaldi implemented this algorithm as part of DNN training.

About Kaldi toolkit

Kaldi is an open-source toolkit developed for ASR research. It was originally derived from HTK in 2009 and licenced under Apache 2.0 that encourages potential development [20]. Kaldi toolkit is written by C++ and preferably compiled in Unix-like environment while Windows distribution has not been regularly tested so that some of recent updates cannot be correctly compiled on Windows [21].

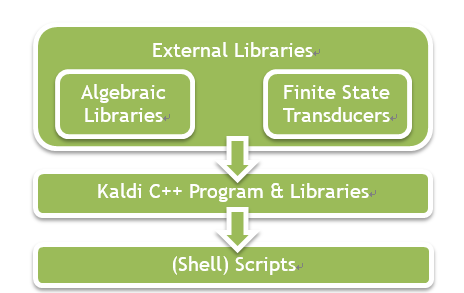

Figure 3 shows the relation between Kaldi itself and other entities. Apart from Kaldi C++ executables, the operation of Kaldi toolkit requires support from external libraries. One external support depends on framework of finite state transducers (FST) while another one is numeric algebra support that contributes to necessary linear algebra operations in terms of vectors and matrixes [20].

A finite-state transducer builds a path mapping from an input to an output and a weight encoding probabilities in our case could be put on transition path in addition which forms weighted finite-state transducer (WFST) [22]. An overall weight alongside paths could be accumulated and computed to extensively map an input to an output [22]. Kaldi uses a free library named as OpenFST to build FST-based framework, representing LM, AM, lexicon and overall decoding graph as FST, although Kaldi do implement some new algorithms to employ OpenFST library, i.e. converting LM of ARPA format 6.3 into FSTs [20].

As for algebra support, the Thesis selected ATLAS (Automatic Tuned Linear Algebraic Software) as it is the most preferred recommendation from Kaldi develop team and can be installed as part of essential Kaldi installation. ATLAS offers implementation of BLAS (Basic Linear Algebra Subroutines) and a subset of LAPACK (Linear Algebra PACKage), which are two of the most prevalent tools that provide linear algebra solution. Kaldi, in addition, implement some algebraic routines that are beyond scope of ATLAS [23].

Scripting languages such as Bash, Python and Perl are utilised to drive Kaldi executables and standardised scripts are offered as a part of whole recipe. Thus, to conduct a thorough ASR test via Kaldi toolkit, it is compulsory to include those three components, external libraries, Kaldi toolkit itself and command-line tools.

Comparison between Kaldi and other competitors

Other three notable open source ASR system are mentioned in the Introduction Chapter, which are HTK, CMU Sphinx and Julius. Kaldi inherited some functionalities from HTK such as feature generation and GMM build [20], and speeded up both training and decoding if compared by HTK.

As per functionality, Kaldi completely outperform Julius since Julius is only a decoder. However Julius comes with appealing performance of speed-up technique coping with large dictionary dictation work up to 60k words [24].

Sphinx has historically released four version where the latest update is Sphinx-4. Sphinx also has a derivative products PocketSphinx providing mobile solution whereas Kaldi has no chance to copy the success. Because Kaldi is written by C++ rather than Java as of Sphinx, this fact results in much longer compiling time and giant binary coding. What makes Kaldi stand out is the capability of DNN-based training while Sphinx do not have this approach and no plan for implementation in upcoming release [25].

Generally speaking, offering implementation of various state-of-art AM training algorithms and DNN support could be the most two competitive advantages of Kaldi. A flexible FST frame work is another bonus. In terms of decoding capability, Kaldi significantly outperformed other three softwares in this comparative analysis [26]. In addition, Kaldi develop team used to hold online forum in Sourceforge and now move to Google Group , which consistently maintains a thriving community environment and solid support for ASR researchers. Also, currently 35 Kaldi projects have shared their recipes and outcomes under Kaldi root repository in GitHub , providing abundant examples in system build and scripting.

System Overview

Experiment

Data Preparation

Corpora

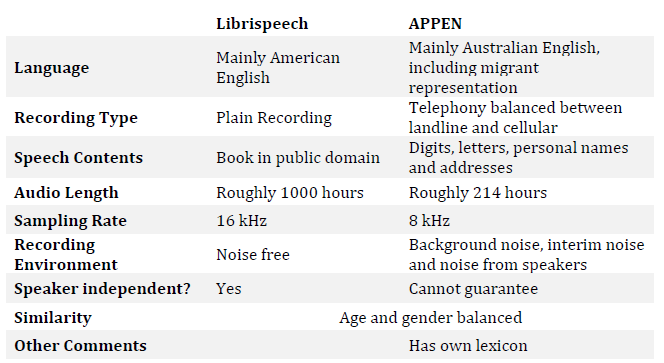

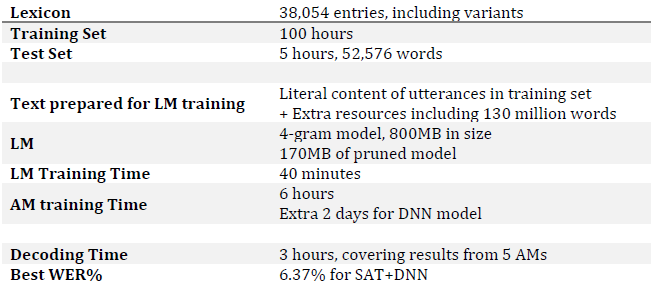

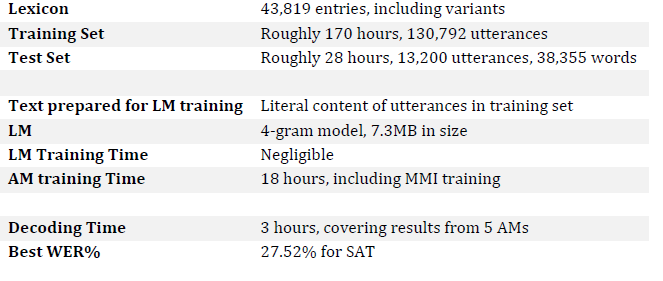

The evaluation phase of the Thesis employed two distinct linguistic corpora: Librispeech and APPEN . Librispeech is an open source corpus collecting book reading under quiet circumstances while the alternative corpus APPEN contains short telephony recorded in SALA environment . The table below compares two corpora on the grounds of acoustic features and recording information.

The 1000-hour collection of book readings in Librispeech is sourced from Project Gutenberg , essentially split into training set and test set and no speaker overlap between two sets. Librispeech corpus is established and maintained by Kaldi community so that the files inside are produced and organised in a way which is friendly to function arguments in Kaldi.

In this Thesis, APPEN in fact refers to two products under APPEN catalogue. APPEN is a company specialising in speech and search technology services, which produces and maintains an extensive linguistic databases covering various languages and dialects. The corpus that was delivered to us consists of two products identified as AUS_ASR001 and AUS_ASR002. Unfortunately speaker independence between two sets cannot be guaranteed due to lack of speaker information. However not a significant portion would be detected if overlap existing, explained in mail by one of APPEN employees. Therefore the Thesis still consider APPEN as a suitable database for speaker-independent test.

Separate Training Set and Test Set

Rather than speaker-dependent system, the Thesis is aiming at the Kaldi’s decoding capability of speaker-independent system. The characteristics of two systems could be distinguished by literal sense. The speaker-independent system do not contain acoustic features of speakers whose speeches will be passed into ASR system and be transcribed, whereas the speaker-dependent system decodes the utterances spoken by whom trained the acoustic model. Speaker-dependent system is tailored for personal use in despite of only accurate response to speaker who trained the system. Comparatively, building speaker-independent ASR system consume more computational power and training time; but nowadays speaker-independent solutions are more widely adopted in commercial ideas such as server-based ASR. In order to conduct speaker-independent test, the sound collections were split into training set and test set, with no repeating contribution from same speaker. This task was essentially carried out in Librispeech attributed to a clear assortment of audio files. There are three groups of data dedicated to training set, train-clean-100, train-clean-360, train-other-500 where the integer indicates the audio length; and another two datasets organise test data, test-clean and test-other, both of which lasts 5 hours. While no inherent separation in APPEN corpora, scripts were written to perform the job that separated training set from test set. It was not necessary to create two directories that respectively retained each dataset. The alternative approach used in the Thesis was to finalise the two dataset when processing utterance information, which is discussed in next section.

Prepare utterance information

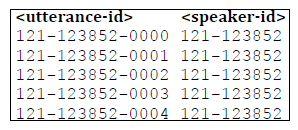

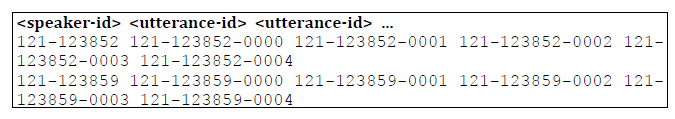

Except linguistic corpus, users are supposed to provide three text files which cover key information of utterances. These three files are named as utt2spk, wav.scp and text. utt2spk maintains a list of correspondence between utterance and speaker. Take one file prepared for Librispeech test as example.

Then the twin document spk2utt can be produced with aid of one Kaldi script utils/utt2spk_to_spk2utt.pl.

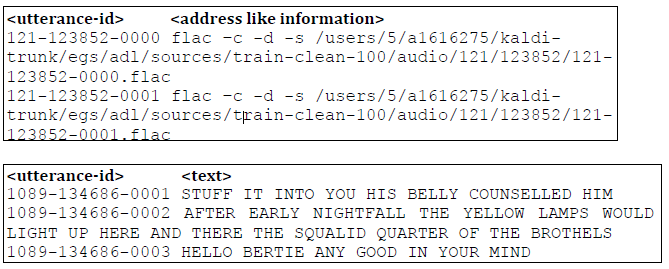

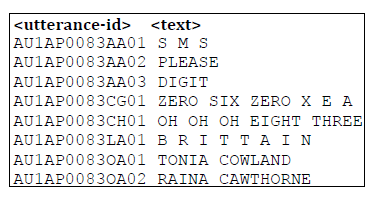

wav.scp specifies local address of each utterance or sometimes a command to read in utterance such as example below. text sorts out text of utterance.

Another text example from APPEN.

Both training dataset and test dataset should produce all of four files as input arguments to later stages. Thus as for APPEN, scripting for the purpose of file preparation essentially separated training set and test test.

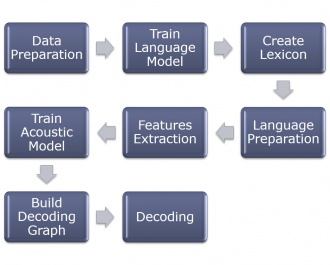

Create Lexicon

Since APPEN offers own lexicon, the Thesis only paid attention to lexicon component of Librispeech. CMUdict was selected as the base phonetic dictionary. CMUdict is an open-source pronunciation dictionary that is created and maintained by Carnegie Mellon University.

Scripts were generated in order to find corresponding pronunciation in dictionary for the words which were uttered. After the first pass, roughly eight hundreds of out-of vocabulary (OOV) words were filtered out. Then the online LOGIS tool designed by CMU as well, was employed to generate phonetic pronunciations of those OOV words. The Thesis chose LOGIOS for the sake of consistence between this tool and CMUdict.

Language Model Training

Prepare Tokens

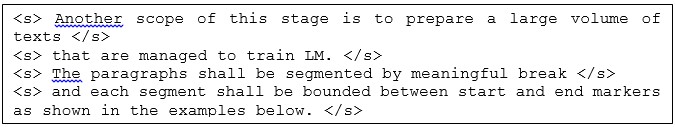

Due to weak relation between words of text, APPEN did not benefit from language model, the Thesis did not have to find suitable resource so as to expand language model. Instead, only text in training dataset was considered as text source to train language model. As for Librispeech test, except text in training set, a large pool of plain texts were prepared for the purpose of LM. The plain text covered 14,500 public books from Project Gutenberg with roughly 130 million words, rearranged by Kaldi community. Before feeding text into LM toolkits, it was necessary to ensure the content only represented in capital letter due to requirement from SRILM. In order to make god use of text, scripts were written to segment paragraphs by meaningful break and each segment was bounded between start marker and end marker as shown in the examples below.

Scripting and Arguments

There was only one argument need as shown from scripts above, which was pre-processed text ready for LM training. The first function called by command was the main program building N-gram LM and N was assigned to 4. However, in the case of Librispeech, the argument lm.txt was in a size of 800 MB, which was large enough to violate RAM in later stages. Appendix B attaches a screenshot of operating system status once RAM was nearly fully occupied during decoding phase. This was due to large LM and ASR took a high risk of forced termination. Therefore the Thesis called another function to prune the large LM into smaller size.

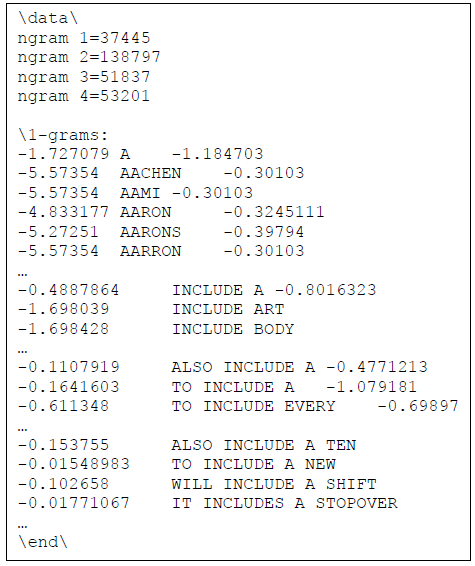

LM Format

Take LM of APPEN test as an example. The file attached below shows ARPA format of language model that SRILM produces. Starting with numbers of stored entries for each order of N-gram model, ARPA LM makes a list of each single entry that the probability was computed and represented as log base in front of word. For those words that became a prefix of higher order N-grams, a back-off weight was assigned behind the word, in log base as well [28].

Acoustic Model Training

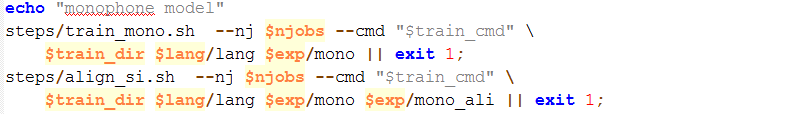

Scripting and Arguments

Take monophone model as an example. The script pasted above is top-level script of AM training. Kaldi takes two arguments into first pass of acoustic modelling, which are utterance related files stored in $train_dir, FST framework and phone topology in $lang/lang. Alignment is required to map transition probability in AM to acoustic features after each pass of AM training. The transion-ids are saved in $exp/mono_ali/ali.*gz

Train Acoustic Model

The training task of Kaldi uses Viterbi beam search algorithm to obtain HMM states for AM training. Beam search is a heuristic algorithm that identifies and strengthens the most appropriate path along with the most possible states in decision tree [29]. The goal of beam search is to predict the complete solution upon partial solution where only a limited number of best states shall be retained in each level of decision tree, which is defined as beam width [29]. Therefore, it is necessary to trim the audio files into short period of duration and maintain a reasonable large beam width such that less information will be pruned through beam search algorithm, which trains a more accurate and appropriate acoustic model. The parameter beam-width involved in beam search algorithm shall be determined by trial and error, starting with a reasonable value such as Kaldi default of 20 then booming up if necessary. The training pipeline for two corpora were varied which will be discussed in detail as follows.

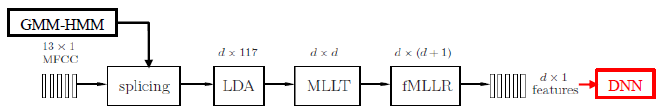

Librispeech

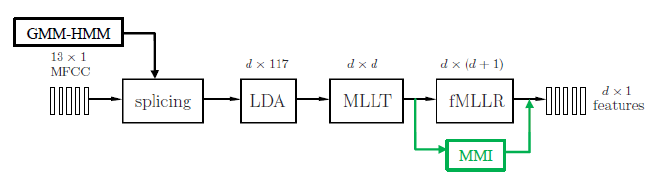

Figure 5 illustrates the pipeline used that includes conventional model for AM training. d was assumed be 40 in conventional model. After monophone pass and triphone pass, a sophisticated GMM-HMM based AM was available for further enhancement. The baseline contained several stages of transform. The first step was splicing that all 13-dimensional front-end features were spliced across ±4 frames to produce 117 dimensional vectors. LDA then was used to reduce the dimension to d. The HMM states aligned in triphone model were used for the LDA estimation [8]. Then using MLLT as feature transform that makes the features more accurately modelled by Gaussians and further de-correlated [8]. The last step was to perform SAT that normalise speaker variation by the technique of feature-space Maximum Likelihood Linear Regression (fMLLR) [8]. In addition, a hybrid DNN-HMM recognizer was built to extend the baseline solution. The DNN model represented in the Thesis was set up on top of SAT model, which implied that the input were standardised 40-dimentional adapt features generated by the baseline. Then DNN would pop out posterior in lob base that was corresponding to HMM states in triphone models [8]. Even with basic set-up of experiment, it took two days to train DNN model and get the transcription result.

APPEN

APPEN test followed with baseline just like Librispeech experiment. What distinguished these two sets were different add-on methods. APPEN test introduced discriminative training methods which comprised of the original form of Maximum Mutual Information (MMI), and its modified form named as boosted MMI (bMMI) developed by Kaldi principal developer Daniel Povey [30]. Discriminative training manages to improve the AM modelling by creating an objective function from a set of observation and maximising the likelihood of observation from transcription [31]. MMI objective function applies the same theory. bMMI boosts the GMM-HMM MMI DNN GMM-HMM Figure 5 AM training pipeline for Librispeech [8] Figure 6 AM training pipeline for APPEN [8] 24 probabilities of the utterances carrying with more errors so that correct hypotheses can be isolated [30]. The reason why the Thesis chose MMI as an alternative method is that we found the WERs of transcription decoded by AM with MMI was significantly improved in other Kaldi projects. Especially in one of projects coping with telephony, WER% was reduced with rate of 30% from LDA+MLLT transform, i.e. from 15% to 10% [32]. Thus, it was determined to try this algorithm in APPEN test.

Decoding

Build Decoding Graph

Similar FST framework, which played a role as Kaldi grammar, was used in both training phase and test phase. Appendix C attaches an illustration of how Kaldi could produce transcription from acoustic feature regarding its grammar. Generally there are four layers in decoding graph and a composition of four components was expected to construct the graph HCLG = H◦C ◦L ◦G [33]

- H – mapping from HMM transitions to context-dependent labels

- C – mapping from context-dependent labels to phones

- L – mapping from phones to words, i.e. lexicon

- G – Language model, i.e. both inputs and outputs are words

Decoding and Lattice Rescoring

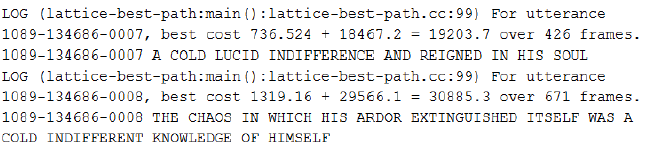

Since language model was pruned due to limited RAM, hence naturally we had to find an approach to making up loss in AM modelling due to truncation. A method called lattice rescoring was adopted by Kaldi to provide the solution. Lattice is defined as alternative transcription of utterances, basically of statistical representation with timing information [34]. Figure 7 shows a file containing decoding result and lattices.

Figure 7 Transcription and Lattices

Figure 7 Transcription and Lattices

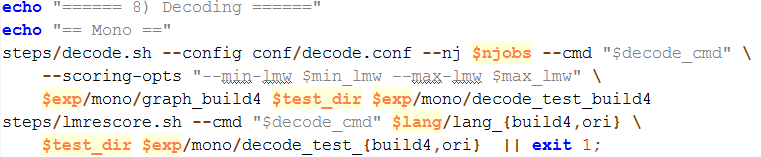

The scripts pasted below shows a decoding pattern that comprises of actual decoding and rescoring. Looking up to the command line that called Kaldi decoder. Two arguments were required, decoding graph in regards to monophone model $exp/mono/graph_build4 and utterance related files of test dataset $test_dir. The weight of language model about which decoder would consider was ensured by argument –scoring-opts, usually limited from 9 to 20. Run-time parallelisation was defined in command file $decode_cmd and the number of pipelines was assigned to the number of CPU cores, njobs=$(nproc). Decoding results including transcription and WER% scoring were saved under directory $exp/mono/decode_test_build4.

LM rescoring took both original LM and pruned LM as arguments. During decoding phase, only pruned LM score was included to compute the final score to pick up the most like sequence of test token. The rescoring combined AM scores and original LM scores to derive a new score, possibly resulting in different transcription [34].

Result

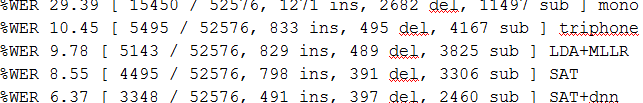

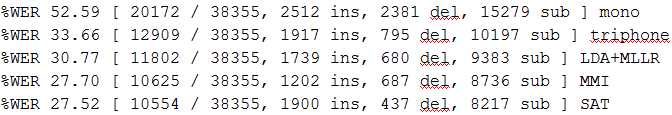

Figure 8 and Figure 9 assess the decoding performance in terms of WER%. Each of errors fell under either of three categories, insertion error (ins), deletion error (del) and substitution error (sub).

Compared with published result from Librispeech team [35], the Thesis presents lower %WER in decoding from SAT AM and DNN+SAT AM. The slight variation in the Thesis is likely due to more training data used during the first three passes (mono, triphone & LDA+MLLR). While Librispeech team only used tenth of 100-hour data to train GMM-HMM, and made alignment to more data in next stage, up to fully loaded when training SAT model. Another reason of difference could be different figures generated from random functions in the system code. Thus, the Thesis did not recognise the variation in %WER as improvement. The main reason of employing Librispeech corpus as an experiment database is to reproduce the published result so as to families ourselves with ASR techniques and Kaldi architectures. We have accomplished this task as proposed.

As for APPEN test, since utterance are neither with strong linguistic connection nor phonetically rich sentence, APPEN hardly benefited from language model and the %WER was expected to be higher than Librispeech result. MMI did have good performance but still cannot compete with SAT model.

To sum up, Table 2 and Table 3 provide details of both tests, followed by comparison between original text and transcription from Kaldi toolikit.

Conclusion

The project conducted research on automatic speech recognition (ASR) system, building own research system via Kaldi open source speech recognition toolkit. By configuring and using Kaldi toolkit, we prepared own lexicon components, trained own language models and acoustic models so as to build a complete ASR system and then conduct performance evaluation of Kaldi as a speaker-independent speech recogniser. Kaldi has good performance over clean recording but it is still challenging to decode audio files with noise.

Team

Supervisors

- Dr Said Al-Sarawi

- Dr Ahmad Hashemi-Sakhtsari (Defense Science and Technology Organisation)

- A/Prof Mark McDonnell (University of South Australia)

Students

- Wei Gao

- Xujie Gao

Resources

Hardware Environment

One dual-processor HP Z820 Workstation is delivered by sponsor, configured with 24 processing cores, 64 GiB plus 60 GiB of RAM and 200 GiB HD. This fast machine enable us to compile Kaldi executables through various pipelines so as to reduce total running period

Software Environment

One of Linux distribution, Kubuntu 14.0411, has been installed in dedicated computer as mentioned above.

Before configuring Kaldi with local machine, it is necessary to ensure that we have installed at least but not limited to those Linux packages as listed below, which might not be included as default packages during Linux installation.

git

gcc

wget

gawk

automake

libtool

autoconf

patch

awk

grep

bzip2

gzip

zlib1g-dev

libiconv

Install Kaldi

The latest version of Kaldi toolkit can be downloaded from GitHub repository . The installation instruction is saved as text file INSTALL in Kaldi root directory. In order to properly run scripts in Kaldi, the type of shell in local Linux should be link to Bash shell. It is recommended to run sudo ln -s -f bash /bin/sh [27] prior to Kaldi installation. Also, CPU throttling shall be disabled for ATLAS installation.

References

[1] F. Jelinek, “A Mathematical Formulation,” in Statistical Methods for Speech Recognition, MIT Press, 1997, pp. 4-5.

[2] L.R.Rabiner and B.H.Juang, “Speech Recognition: Statistical Methods,” 2006. [Online]. Available: http://courses.cs.tamu.edu/rgutier/cpsc689_s07/rabinerJuang2006statisticalASRoverview.pdf. [Accessed 11 April 2015].

[3] Steven Davis and Paul Mermelstein, “Comparison of parametric representations for monosyllabic word recognition in continuously spoken sentences,” IEEE Transactions on Acoustics, Speech and Signal Processing, vol. 28, no. 4, pp. 357-366, 1981.

[4] H. Hermansky, “Perceptual linear predictive (PLP) analysis of speech,” The Jouranl of the Acoustical Society of America, vol. 87, 1990.

[5] F. Itakura, “Minimum prediction residual applied to speech recognition,” IEEE Trans, Acoustics, Speech, Signal Processing, vol. 23, no. 1, pp. 67-72, 1975.

[6] C. Lévy, G. Linarès and N. Pascal, “Comparison of several acoustic modeling techniques and decoding algorithms for embedded speech recognition systems,” in Workshop of DSP on Mobile and Vehicular Systems, Nagoya, 2003.

[7] N. Dave, “Feature Extraction Methods LPC, PLP and MFCC in Speech Recognition,” INTERNATIONAL JOURNAL FOR ADVANCE RESEARCH IN ENGINEERING AND TECHNOLOGY, vol. 1, no. 6, 2013.

[8] P. S. Rath, D. Povey and K. Vesel, “Improved feature processing for Deep Neural Networks,” in Proceedings of Interspeech, Lyon, 2013.

[9] J. Crittenden and P. Evans, “Speaker Recognition,” 2008. [Online]. Available: https://courses.cit.cornell.edu/ece576/FinalProjects/f2008/pae26_jsc59/pae26_jsc59/. [Accessed 20 October 2015].

[10] “Feature Extraction,” Kaldi, [Online]. Available: http://kaldi.sourceforge.net/feat.html. [Accessed 20 October 2015].

[11] A. G. Adami, “Automatic Speech Recognition: From the Beginning to the Portuguese Language,” in International Conference on Computational Processing of the Portuguese Language 2010, Porto Alegre, Brazil, 2010.

[12] S. Young, “HMMs and related speech recognition technologies,” in Springer Handbook of Speech Processing, Springer Verlag, 2008, p. 539–557.

[13] H. Bourlard and N. Morgan, Connectionist Speech Recognition: A Hybrid Approach, Norwell, MA, USA,: Kluwer Academic Publishers, 1993.

[14] T. Mikolov, M. Karafiat, L. Burget, et. al, “Recurrent neural network based language model,” in Interspeech 2010, 2010.

[15] G. Hinton, L. Deng, D. Yu,, “Deep Neural Networks for Acoustic Modeling: The Shared Views of Four Research Groups,” IEEE SIGNAL PROCESSING MAGAZINE , pp. 82-97, 2012.

[16] P. Woodland, “Speaker Adaptation for Continuous Density HMMs: A Review,” in ISCA Tutorial and Research Workshop (ITRW), Sophia Antipolis, 2001.

[17] Hinton, G. ; Li Deng ; Dong Yu ; Dahl, G.E. et.cl , “Deep Neural Networks for Acoustic Modeling in Speech Recognition: The Shared Views of Four Research Groups,” Signal Processing Magazine, IEEE, vol. 29, no. 6, pp. 82-97, 2012.

[18] Mohamed, A. ; Univ. of Toronto, Toronto, ON, Canada ; Dahl, G.E. ; Hinton, G., “Acoustic Modeling Using Deep Belief Networks,” Audio, Speech, and Language Processing, IEEE Transactions , vol. 20, no. 1, pp. 14-22, 2011.

[19] Y. Xiao, Y. Si, J. Xu, J. Pan and Y. Yan, “Speeding up deep neural network based speech recognition,” Journal of Software, vol. 9, no. 10, pp. 2706-2711, 2014.

[20] D. Povey, A. Ghoshal, G. Boulianne, et. al, “The Kaldi speech recognition toolkit,” in IEEE 2011 Workshop on ASRU, Hawaii, 2011.

[21] “The build process (how Kaldi is compiled),” [Online]. Available: http://kaldi.sourceforge.net/build_setup.html. [Accessed 27 May 2015].

[22] M. Mohri, F. Pereira and M. Riley, “Weighted finite-state transducers in speech recognition,” Computer Speech and Language, vol. 16, pp. 69-88, 2002.

[23] Kaldi, “External matrix libraries,” [Online]. Available: http://kaldi.sourceforge.net/matrixwrap.html. [Accessed 18 October 2015].

[24] “Open-Source Large Vocabulary CSR Engine Julius,” Julius, [Online]. Available: http://julius.osdn.jp/en_index.php. [Accessed 20 October 2015].

[25] “Upcoming CMU Sphinx Software Releases,” CMU Sphinx, 23 February 2015. [Online]. Available: http://cmusphinx.sourceforge.net/wiki/releaseschedule. [Accessed 20 October 2015].

[26] C. Gaida, P. Lange, R. Petrick, P. Proba, A. Malatawy and a. D. Suendermann-Oeft, “Comparing Open-Source Speech Recognition Toolkits,” in Organisation of Alberta Students in Speech, Alberta, 2014.

[27] “check_dependencis.sh,” [Online]. Available: https://svn.code.sf.net/p/kaldi/code/trunk/tools/extras/check_dependencis.sh. [Accessed 29 May 2015].

[28] “ngram-format,” SRI, [Online]. Available: http://www.speech.sri.com/projects/srilm/manpages/ngram-format.5.html. [Accessed 20 October 2015].

[29] S.Abdoua, M.S. Scordilis, “Beam search pruning in speech recognition using a posterior probability-based confidence measure,” Speech Communication, vol. 42, pp. 409-428, 2004.

[30] D. Povey, D. Kanevsky, B. Kingsbury, B. Ramabhadran, G. Saon and K. Visweswariah, “BOOSTED MMI FOR MODEL AND FEATURE-SPACE DISCRIMINATIVE TRAINING,” in Acoustics, Speech and Signal Processing, 2008. ICASSP 2008. IEEE International Conference, Las Vegas, 2008.

[31] K. Vertanen, “An Overview of Discriminative Training for Speech Recognition,” University of Cambridge, Cambridge, 2004.

[32] O. Plátek, “kaldi/egs/vystadial_en/s5/RESULTS,” 1 April 2014. [Online]. Available: https://github.com/kaldi-asr/kaldi/blob/master/egs/vystadial_en/s5/RESULTS. [Accessed 20 October 2015].

[33] “Decoding graph construction in Kaldi,” Kaldi, [Online]. Available: http://kaldi.sourceforge.net/graph.html. [Accessed 20 October 2015].

[34] D. Povey, M. Hannemann, G. Boulianne, L. Burget and A. Ghoshal, “GENERATING EXACT LATTICES IN THE WFST FRAMEWORK,” in Acoustics, Speech and Signal Processing (ICASSP), 2012 IEEE International Conference, Kyoto, 2012.

[35] V.Panayotov, G.Chen, D.Povey, S.Khudanpur, “LIBRISPEECH: AN ASR CORPUS BASED ON PUBLIC DOMAIN AUDIO BOOKS,” in ICASSP 2015, Brisbane, Australia, 2015.

[36] “Yi Wang’s Tech Notes” [Online]

Available: https://cxwangyi.wordpress.com/2010/07/28/backoff-in-n-gram-language-models/ [Accessed 13 Oct 2015]