Projects:2015s1-42 Rule-based AI Agent Development: Tic Tac Toe

Introduction

This project aims to use Street Language to develop complex artificial intelligence agents, which connect to a robotic arm to play Tic-Tac-Toe with a human player. Possible future implementation of this agent in hardware would provide a means of testing the effectiveness of the Street Engine.

This project has developed two working agents for Tic Tac Toe, developed using Street Language. The development of these agents has been effective in testing and proving the capabilities of Street Language. An interface has also been created to link the agents to an environment.

Background

Within the University of Adelaide, a research group has been exploring the application of artificial intelligence agents on Field-Programmable Gate Arrays. The group has modified concepts from the Soar Cognitive Architecture created by Prof. John Laird at the University of Michigan in order to develop a parallel processing language called Street Language. Street Language is designed to be implemented in hardware to create a Street Engine: a standalone machine capable of implementing artificial intelligence based on pattern-matching rules.

Meanwhile, in 2014, final year project students at the university developed software to control a robotic arm, as well as an algorithmic program which enabled it to play Tic-Tac-Toe[1]. This robotic arm has since been upgraded to perform visual recognition of the Tic-Tac-Toe board.

This project involves the use of Street Language to define an artificial intelligence agent in production rules which will play Tic-Tac-Toe in the real world through the use of the robotic arm. The resulting product will be a standalone Tic-Tac-Toe machine. After this has been completed, a new agent will be created to play a more complicated game. Knowledge gained from the development of the TTT agent will be employed to create the more complex agent.

Motivation

A new type of computer called the Street Engine is currently being researched and developed within the university. The desired outcome is for Street Engines to achieve advanced cognitive computation in real-time, with greater power efficiency compared to conventional computers[2]. Our project ties in very closely with their research, as it aims to create a Street Agent which can be used to demonstrate the capabilities and performance of a Street Engine, once the technology becomes available. This project also serves as a learning experience in techniques which can be used to effectively develop a Street Agent.

It is expected that Street Engines will prove to be more power-efficient than conventional computers, as the highly parallel architecture allows for the same amount of work to be done per second, but at a lower clock rate[2]. This would allow for a lower supply voltage, which would result in significant savings in power. The demonstration of this would be notable as currently the performance growth of conventional machines is limited by the increasingly infeasible method of scaling down CMOS circuits to reduce the supply voltage[3]. This means that increases in clock speed are slowing down significantly, as it results in a higher power dissipation[3].

Project Details

Objectives

- Write, in Street Language, a rule-based AI agent to play Tic-Tac-Toe

- The Tic-Tac-Toe (TTT) agent shall be defined in production rules for the Street Engine and its behaviour shall be simulated and verified.

- Create an interface between the Street Engine and the robotic arm.

- Previous work done on the robotic arm includes the establishment of a protocol for communication between the robotic arm and an agent. The TTT agent is required to communicate with the robotic arm using this protocol, which is defined in S. AL-Rashid’s “Robotic Arm for Trash Collecting Robot” final year report. The interface created will convert the outputs of the agent into a list of commands in this format, and the outputs of the robotic arm into the appropriate Street formatted input.

- Create standalone TTT machine.

- The Street Agent shall be loaded onto a standalone mini-computer board, such as the Raspberry Pi, where it will run on the Java Street Simulator. This will be interfaced with the robotic arm to create a fully functional Tic-Tac-Toe-playing AI machine which simulates the behaviour of a Street Engine.

- Develop and implement a Street Agent which plays a more complicated game.

- The new agent will possibly be designed to play Connect Four or a game of similar complexity. The game which is ultimately chosen will depend heavily on the knowledge gained throughout the development of the TTT agent.

Development Tools

JavaStreet Simulator

A Street Language simulator and debugger has been developed by the research group within the university. This simulator can be used to test and demonstrate the Street Language. Numerous case study agents have been previously developed which run on the simulator and are able to demonstrate/test the function of Street Language[2].

Tic-Tac-Toe Environment

The research group also developed a Tic-Tac-Toe environment which allows a user to play the game against an agent running on the JavaStreet simulator, through a graphical interface. The environment sends outputs to the agent when the state of the game changes and waits for the agent to respond appropriately, according to a predefined list of inputs and outputs.

AI Agents

Two Tic-Tac-Toe agents are being developed concurrently for this project, each employing a different learning method. One agent uses episodic memory to recall its previous moves and their success, and the other agent constructs a state tree of possible choices, using minimax theory to select its move.

Episodic Memory Agent

From the cognitive approach to intelligent behaviour research, researchers believe that intelligent creatures make actions based on the knowledge they have. The knowledge is built up through experience and stored in chemical elements in the nerve cells. Each individual experience may be linked with an associated time and outcome after execution; this is called episodic memory. Using honey bee foraging as an example: the honey bee is able to memorize the approximate path of its previous foraging activity, and after the honey bee has gathered nectar and returned to its hive, the foraging trip will be recorded along with the direction travelled and quantity of the nectar collected. Before this bee commences on a new nectar-gathering journey, it will compare its past experiences and choose to embark in a direction similar to that which which has previously resulted in a plentiful harvest.

With the inspiration of the explanation of intelligent behaviour, the project team chose to develop an agent which behaves in the same manner, recording its past experiences and making new decisions by evaluating the success of these previous experiences. Specifically, this agent is capable of making game moves based on previous records. The functionality of this agent is divided into two major components - one which records all the moves of the previous games as experience, and another to select moves with winning results or without losing records. For the first function, the agent keeps the records of each game it went through in its memory. The game records are stored in the form of elements, which have the reference game number and move number for the tracing and sorting actions. After the movements have been recorded, the other function - which makes decisions based on experience - is called. This function allows the agent to compare the current situation with the records in memory. When a match for this situation is found, the agent is able to make a better move selection, which avoids choosing moves with previous records of losing the game. If a previous move from this situation led to victory, the agent will select this move again. With these two functions working together, the agent is able to increase its accumulated experience and thus improve its effectiveness at playing the game. In other words, the agent is able to learn by playing the game.

Currently, most of the functions designed for the agent have been completed and the agent is able to operate using the JavaStreet Simulator and Tic-Tac-Toe Environment. The agent is initialised to have some elements which store the current state of the game. It interacts with the simulator and environment in real-time. While the environment provides updates on the current state of the game board, the agent will update its internal state elements and record the moves with the game number, move number and nought or cross. It is necessary to point out that these element are stored in the agent itself and each element is stored with a reference number, which is constructed using the game number and move number. A counter will be incremented each time a new element is stored. When the environment provides the outcome of the game, the agent will also record the game number. When the environment asks the agent to make a move, the agent will compare the current state of the game with its memory elements and search for a match. If there is no match, the agent will randomly make a move selection. If there is a match, the agent will use the recorded results to determine its move as discussed above.

Minimax Agent

The agent is used to determine a move in the game of Tic Tac Toe. The agent builds a state tree and computes a value through a position evaluation calculation for each node.

Game State

A game state refers to the placement of nought and cross marks on the 3x3 grid that represents the Tic Tac Toe game board.

Game/State Tree

The state tree is a representation of the possible states of the game. It is a graph data structure which is well suited to being represented in Street’s working memory.

The agent creates a game tree when it is its turn to determine which move to make. The initial/root node of the tree is the current state of the game. This node then branches into numerous other nodes. The state tree depth is limited in the agent.

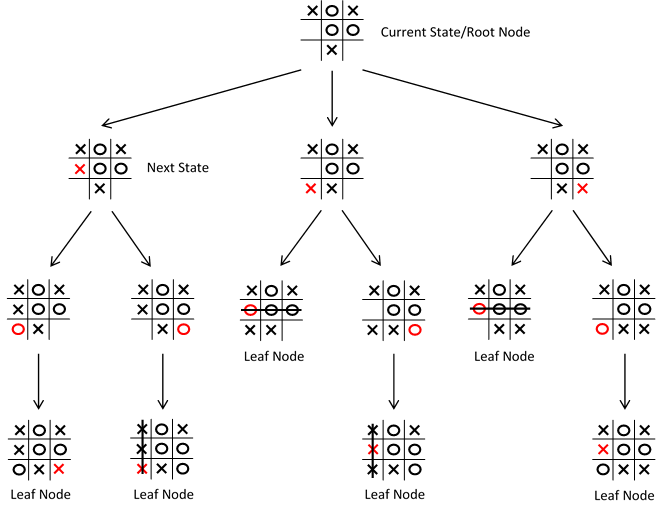

An example of a state tree can be seen below.

- Figure 1 - State Tree

Heuristics

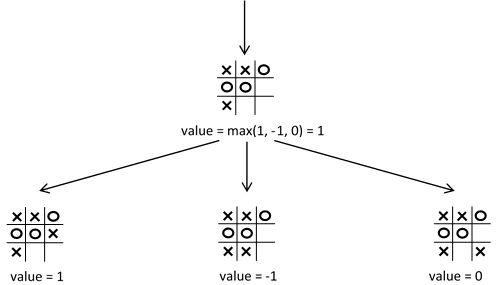

To determine which move to make the agent calculates heuristic values for each state in the game tree. The state with the best value can then be used to determine the move that should be made.

Calculation of the heuristic value for root nodes involves determining the number of rows for which it is possible for each player to possibly win. The initial value is zero, for each row in which it is possible for the agent to still win, the value is incremented. For each row is possible for the opposing player to win the value is decremented.

Other nodes are then assigned a value based on the values of the branching nodes. An example is shown below.

- Figure 2 - Heuristics Example

Environment Interaction

The agent receives input representing any changes to the game state. Using this input it can update its own representation of the current state in its memory. The agent begins the process to decide a move only when it receives an input indicating that it is its move.

When the agent receives input indicating a change to the environment, it outputs an acknowledgment indicating it has received the change. During the agents turn, when it has decided upon a move, it outputs its decision by indicating which square of the Tic Tac Toe state it would like to play.

Interface

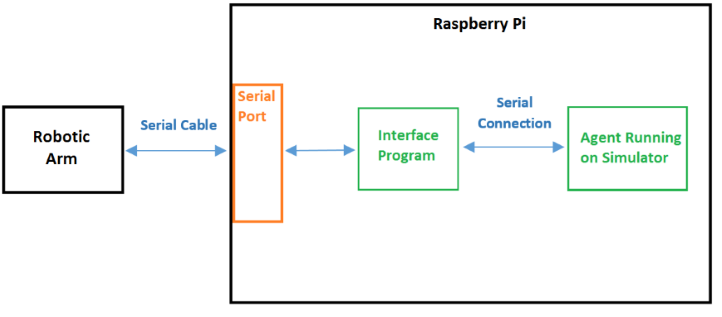

For the agent to control the robotic arm to play the game, an interface must be developed. This interface will include the functionality of the Tic-Tac-Toe Environment as well as converting data between Street format and the format required for the robotic arm. A serial connection will be used for the transfer of data between the interface and the robotic arm, as well as between the interface and the agent running on the simulator. All interactions with the robotic arm are through the interface, so the agents only know how to play Tic-Tac-Toe and are unaware of the robotic arm's existence. Figure 3 shows a high-level view of how the various elements of this project interact.

- Figure 3 - High-level Interactions

Technical Background

Soar

Street Language was developed from concepts and ideas from a language called Soar. Soar is a language that is used to create artificial intelligence agents, with a focus on general intelligent agents. A general intelligent agent doesn't solve a single problem using a set method. Instead it can pursue numerous tasks through acquiring experience to build memory across numerous environments[4]. Soar is a cognitive architecture, which refers to a focus on the structure of the human mind. Soar therefore attempts to develop intelligent agents through the high-level modelling of human cognition[4]. This involves using concepts such as memory, reinforcement learning, etc.

Street

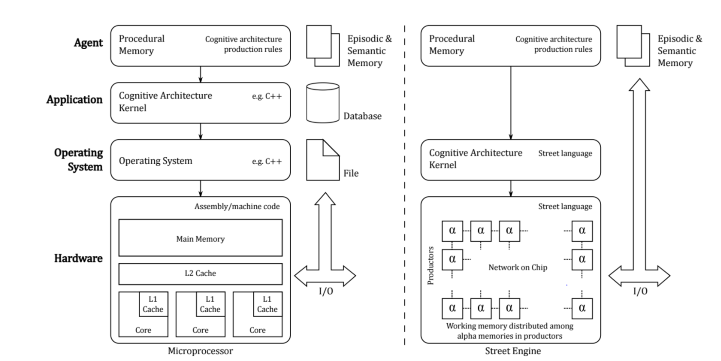

Street consists of the Street Engine and the Street Language. The Street Language is a parallel processing, rule-based language[2]. Street is a highly parallel processing architecture which differs from conventional computer architecture which uses sequential execution paths and centralised memory. The purpose behind Street is to provide a computing architecture that can realise intelligent agents, processed in real time and with improved power efficiency compared to current architectures[2].

- Figure 4 - Hardware/software stack on a conventional computer and a Street Engine[2].

Figure 4 compares a conventional computer architecture with the Street Engine. One main difference highlighted by the above figure is how the Street agent is implemented using Street Language directly onto the Street Engine (hardware), compared to the conventional computing architecture which uses an application and operating system between itself and the hardware. Another difference is the use of alpha memory in the Street Engine. Memory is distributed throughout alpha memories in productors on the Street Engine. Rearrangements of the distribution of memory through the alpha memories can reduce latency and power consumption.

Street Language

Street Language uses two main components, Working Memory and Production Rules. Working Memory consists of a set of elements (known as Working Memory Elements, WMEs). Each of these elements is made of three components – an object, an attribute and a value. Production Rules consist of a set of condition and a set of actions. When the set of conditions are satisfied by the current state of the working memory, the set of actions will be triggered. The set of actions change the state of the working memory by adding or deleting elements from the working memory[5].

Robotic Arm

The software for a robotic arm was developed by a group of honours students in 2014 as part of their honours project. This project involved developing two main components, a motion module and a communication module[1]. The motion module calculates the necessary actions of the numerous actuators to move the arm. The communication module is provides the interface between the arm and an agent.

The Team

Supervisors

- A. Prof. Michael Liebelt

- Dr. Braden Phillips

Students

- Daniel Valana

- Jared Bouchier

- Xin Yuan

References

[1] S. AL-Rashid, “Robotic Arm for Trash Collecting Robot”, unpublished, Final Year Report, Oct. 24, 2014.

[2] J. Frost et al., “A New Computer for Cognitive Computing”, unpublished.

[3] S. H. Fuller and L. I. Millett, “Computing Performance: Game Over or Next Level?”, IEEE Computer Society, 2011. [Online], Available: https://www.inf.pucrs.br/~moraes/prototip/artigos/ComputingPerformanceGameOverorNextLevel.pdf

[4] R. M. Yound and R. L. Lewis, “The Soar Cognitive Architecture and Human Working Memory”, New York: Cambridge University Press, 1997. [Online], Available: http://www0.cs.ucl.ac.uk/staff/R.Young/publications/99.Young-Lewis.pdf

[5] B. Phillips and J. Frost, “A Brief Description of Street Language”, unpublished, Mar. 17, 2015.

[6] Y. Zhong, "A Cognitive Approach to Artificial Intelligence Research," Cognitive Informatics, 2006. ICCI 2006. 5th IEEE International Conference on , vol.1, no., pp.90,100, 17-19 July 2006.

[7] Y. Zhong, "Mechanism of intelligence formation and unified theory of AI," Natural Language Processing and Knowledge Engineering, 2005. IEEE NLP-KE '05. Proceedings of 2005 IEEE International Conference on, vol., no., pp.540, 542, 30 Oct.-1 Nov. 2005.

[8] J. E. Laird, “The Soar Cognitive Architecture”, The MIT Press, 2012.