Projects:2018s1-100 Automated Person Identification with Multiple Sensors

Contents

Project Team

Students

Maxwell Standen

Archana Vadakattu

Michael Vincent

Supervisors

Dr Brian Ng

Dr David Booth (DST Group)

Sau-Yee Yiu (DST Group)

Abstract

This project seeks to develop the capability to identify individuals based on their whole body shape and 3D facial characteristics. This will involve:

- 1. Determining the most suitable sensor type(s) for the task.

- 2. Data collection, using the most appropriate sensor(s) selected in (1).

- 3. Data alignment, and extraction of 3D face and body shape features.

- 4. The development of a recognition and fusion capability using the extracted 3D face and body shape features.

- 5. Performance assessment.

The project will involve elements of literature survey (both sensor hardware and algorithmic techniques), software development (ideally in Matlab) and performance assessment, possibly by means of a small trial. This project is sponsored by DST Group.

Introduction

Automated person identification is a vital capability for modern security systems. Normal security environments feature uncooperative subjects so robust methods are required. The current challenge with person identification is to identify uncooperative or semi-uncooperative subjects such as those who don`t interact with the identification system directly. Soft biometrics are identifying characteristics that can be gathered without the cooperation of a subject. These can be features such as the way you walk, the lengths of your limbs or the shape of your face. This is different to hard biometrics which features a cooperative subject and involves data such as fingerprints or DNA.

The overall project will develop the capability to identify individuals based on their whole body shape and 3D facial characteristics. Our aims are:

- Create an automated person identification system using 3D data

- Use realistic data that is extendable to real-world applications

- Fuse different methods to improve reliability of identification

The main objective is to implement a system that can recognise a person using soft biometric data, given image sequences captured using a Microsoft Kinect, which includes both depth and RGB cameras. From this objective, the project is divided into three main stages: investigation of preprocessing methods for the Kinect data, implementing face and body recognition techniques and performing fusion of techniques to improve robustness of the system.

Background

This project is part of a larger collaboration between the University of Adelaide and DST Group on biometric data fusion.

Microsoft Kinect Sensor

One of the sensors is a Microsoft Kinect, which is used to acquire depth videos from a frontal perspective. The Kinect sensor is a motion sensing device produced by Microsoft Corporation in 2010. It was developed as an input device for the Xbox 360 gaming console and as such, has capabilities such as face and body detection and tracking [1]. The device also utilises cooperative person recognition for its gaming applications (e.g. user authentication and game controls).

A Kinect device features a depth sensor and RGB camera which it uses to recognise the position in space of different body parts for up to 6 people in a single frame. The depth sensor works using a CMOS camera to capture an image of an IR pattern [2]. The depth at each pixel in the image is calculated by the image processor in the Kinect device using the relative positions of dots in the pattern. The individual video frames captured by the Kinect can be viewed as either a "depth image" or as a point cloud, which shows the Kinect depth data after conversion to real world (XYZ) coordinates.

The Kinect captures depth videos at 30 frames per second in a 512 by 424 resolution, and operates in a range of 0.5 to 8 metres. It processes the depth data to compute a number of joint positions, specified as the distances from the camera in Cartesian coordinates. 20 joints were computed originally, but this was increased to 25 in the second version of the hardware. This set of joints is shown in the figure. Skeletal tracking operates effectively in the range of 0.8 to 4 metres.

.

Supervised Learning

Recognition is performed using a machine learning pipeline which involves:

- Acquiring data from a subject

- Feature extraction to find identifying characteristics

- Classification to identify the subject

Fusion

In non-cooperative environments such as surveillance, collecting high quality biometrics can be difficult due to factors such as poor lighting and long range from the sensor. Using low quality biometrics degrades the performance of such systems which use only a single trait of the person. Fusion is a technique used to merge the results of two or more independent classifiers to obtain a stronger classifier. In this system the classifiers refer to the facial and body recognition algorithms, the outputs of which assign a person’s traits to a single label.

Methodology

- 1. Acquire data

- 2. Preprocess data to improve quality

- 3. Extract features from preprocessed data

- 4. Use extracted features to create a classifier

Data Acquisition

Data is acquired using the Microsoft Kinect. RGB, depth and skeletal data is captured.

Preprocessing

Preprocessing is used to enhance the quality of the captured data and the facilitate more accurate extraction of features present in the data. Depth videos are segmented into sequences of frames by extracting gait cycles, which allows for feature averaging. This provides frame sequences which are suitable for extracting the features used in the project. Faces are also cropped for use in facial recognition algorithms.

Facial Recognition

Facial features are extracted from the depth data.

Local Feature Extraction

Local feature extraction analyses local patterns and textures to determine the similarity between images. Instead of a global approach, this method extracts textures and patterns from a subject’s face by looking at a pixel's local neighborhood to determine patterns. This technique is very resilient in changes to rotation and resolution.

Local Binary Patterns (LBP) is a local feature extraction technique that is used for feature extraction of textures. A single local binary pattern describes the patterns surrounding a central pixel. The local binary pattern is calculated for all pixels in a depth image and these are collected into a histogram where each bin is the value of the local binary pattern. This histogram is then normalised and the distribution of patterns is then used as a descriptor of the image.

Histogram of Oriented Gradients (HOG) is a facial analysis technique that uses the distribution of gradients in segments of a subject's face to create a descriptor of that subject. HOG is performed by determining the gradient at each of the pixels. Each gradient in the segment is collected into a bin in the histogram descriptor of that segment. The angle of the gradient is used to determine which bin the absolute value of the pixel's gradient is added to. Once all gradients are collected then the histogram is normalised to determine the distribution of gradients across the segment. By grouping the gradients into discreet bins, some rotational robustness is added.

Local Phase Quantisation (LPQ) is a texture analysis technique that uses Fourier coefficients to create a blur invariant descriptor of a subject's face. To create the LPQ descriptor a two dimensional Fast Fourier Transform (FFT) is applied to a segment of the face. The real and imaginary values of 4 points of the FFT are assembled into a histogram to create the LPQ descriptor. These 4 points are at the coordinates of (0,0) (0,a), (a,0) and (a,a) where a is a sufficiently small value\cite{lpqbasic} and (0,0) is the centre of the segment.

Eigenfaces

Eigenfaces (also known as Principal Components Analysis) is a facial recognition method which captures the variation in a collection of face images and uses the information to compare faces. This information is used to encode subject’s faces for comparison-based identification.

Broadly speaking, PCA is used to reduce the dimensions of data. Considering a training set of face images, each image represents an N×N array of intensity values, or a vector of dimension N squared. Examples of values include RGB values for 2D imagery or depth values for 3D. PCA is first applied to the training set of images. This is done by computing a covariance matrix. Eigenvectors of this matrix describe the set of axes along which the most variance occurs in the data and eigenvalues represent the degree of variance along each axis. In principle, any face can be constructed using a linear combination of the eigenvectors and their associated eigenvalues. The number of eigenvectors used can be reduced by choosing the smallest number of eigenvectors to capture the variance in the training images. To compare a new image to the set of vectors obtained from the training set, the new image must first be resized and projected into the surface space using the previously calculated eigenvectors. Two faces can then be compared by calculating the distance between two vectors and the smallest distance is selected as the most similar face ,from which an identification label can be given to the new image.

Body Recognition

Body features are extracted from the skeletal data that is captured by the Kinect. They can be categorised into two types: anthropometrics and gait analysis. These two methods are combined at the feature level in order to produce a single body classifier.

Anthropometrics

Anthropometrics is the analysis of body measurements and is used to identify subjects. This is done by calculating body part lengths based on the positions of skeletal joints relative to each other. Static features such as height, arm length, leg length, and shoulder width are calculated using joints extracted from the Kinect. Anthropometric features are averaged over all of the frames in each gait cycle to provide a single more accurate result. This also facilitates a feature level fusion with features extracted using gait analysis.

Gait Analysis

Gait analysis involves extracting features related to the movement of individuals. Skeletal joints are tracked as a person walks and are used to calculate things such as stride length and degree of arm swing. These tend to be quite individualistic and so are useful for classification. Joints are also used in this method, however the features are dependent on the subject’s motion. They include things such as stride length, speed, elevation angles of the arms and legs, and average angles of the elbows and knees.

Fusion

The fusion stage aims to combine the face and body recognition results in a structured manner to improve robustness of the person identification system. This stage merges the face and body features to create a unified person identity classifier, merging outputs to arrive at a final decision.

Score-level fusion was used to combine the outputs of the Eigenfaces, the Local Feature Extraction and body classifiers. In particular the score normalisation method known as "min-max normalisation" was used in the developed system.

Results

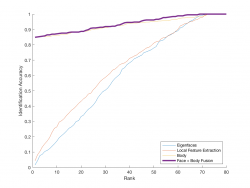

The results show that the best non-fusion method was gait analysis, which performed better than anthropometrics, Eigenfaces and the local feature extraction methods. Body features consistently performed better than methods which used facial data. Local feature extraction performed better than Eigenfaces. Despite the large difference in identification accuracy between each method, fusion of face and body data improved the overall performance of the system. Fusion did not always increase the performance of the system. Local feature fusion had a lower performance in general than the HOG method.

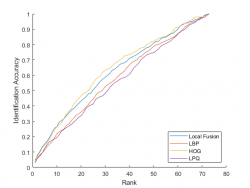

Local Features

The initial performance of all of the local feature methods is very low. This indicates that local features are poor exact identifiers. None of the methods reach perfect accuracy until an very high rank is looked at. This means that local features are not capable of guaranteeing an accurate narrowing of a subject base. HOG performs best out of the local feature extraction techniques. Local fusion does not have a higher performance than the HOG method. As a result the fusion decreased performance and using HOG by itself would have been a better method. LPQ was the worst performing algorithm. LBP performed better than LPQ but worse than fusion and HOG.

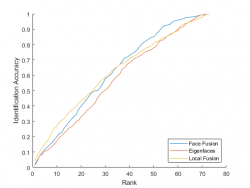

Face Fusion

Local fusion has a higher initial accuracy than Eigenfaces and face fusion. Face fusion does exceed the performance of local fusion at higher ranks and it has a faster increasing accuracy than either Eigenfaces and local fusion. Eigenfaces consistently performs worse than local fusion. However, an investigation has shown evidence that the identification accuracy of Eigenfaces can be improved by additional face data preprocessing stages such as pose correction and face cropping.

Body Features

As shown in the graph, body features are effective for the given dataset, achieving a rank 1 identification accuracy of 84.88\%. This increases linearly to 100\% as the rank is increased to 73, indicating that the classifier is "all or nothing" in terms of its prediction. This means that it would not be very useful for narrowing down a list of potential subjects for manual classification.

Conclusion

The aims of the project were achieved, as shown by the following key findings:

- 3D data can be used to create an automated person identification system

- Realistic data can be used to accurately identify people

- Fusion of different methods improves performance

References

- ↑ J. Han, L. Shao, D. Xu, J. Shotton, “Enhanced computer vision with microsoft kinectsensor: A review,”IEEE transactions on cybernetics, vol. 43, no. 5, pp. 1318-1334, Oct.2013

- ↑ M.R. Andersen, T. Jensen, P. Lisouski, A.K. Mortensen, T. Gregersen, P. Ahrendt,“Kinect depth sensor evaluation for computer vision applications,”Electrical and Com-puter Engineering Technical Report ECE-TR-6, 2012