Difference between revisions of "Projects:2016s1-102 Classifying Internet Applications and Detecting Malicious Traffic from Network Communications"

(→Stage 2: Calibration) |

(→1. Creating Vertex Histograms) |

||

| Line 158: | Line 158: | ||

Let deg(v) be the total degree of a node v. | Let deg(v) be the total degree of a node v. | ||

| − | Let deg ̂_k(v) be the normalised degree, | + | Let deg ̂_k(v) be the normalised degree, deg ̂_k(v) = deg_k (v)/deg(v) |

For each node v in the TAG: | For each node v in the TAG: | ||

| − | Create a histogram h with N elements,such that h[k] = | + | Create a histogram h with N elements,such that h[k] = deg ̂_k (v) |

Assign this histogram as a node attribute to v | Assign this histogram as a node attribute to v | ||

Revision as of 20:10, 26 October 2016

Contents

Project Team

Karl Hornlund

Jason Trann

Supervisors

Assoc Prof Cheng Chew Lim

Dr Hong Gunn Chew

Dr Adriel Cheng (DSTG)

Introduction

The project aims to use machine learning to predict the application class of computer network traffic. In particular, we will explore the usefulness of graph based techniques to extract additional features and provide a simplified model for classification; and, evaluate the classification performance with respect to identifying malicious network traffic.

Objectives

- Implement a supervised machine learning system which utilises NetFlow data and spatial traffic statistics to classify network traffic, as described by Jin et al. [12] [18] [19].

- Achieve an appropriate level of accuracy when benchmarked against previous years’ iterations of the project and verify the results of Jin et al. [18].

- Evaluate the effectiveness of using spatial traffic statistics, in particular with respect to identifying malicious traffic.

- Explore improvements and extensions on the current method prescribed by Jin et al. [12] [18] [19].

Stage 1: Bootstrap

The bootstrap stage begins by constructing the edge level features to be used as inputs to the supervised machine learning system. Edge level features are built from the flow level features of the NetFlow data, as part of the process of building the Traffic Activity Graph (TAG).

1. Constructing the Traffic Activity Graph

The TAG is constructed as follows:

(1) Map each unique host in the network to a node in the TAG.

(2) For each flow in the network, create a directed edge between the respective nodes in the TAG, corresponding to the source and destination hosts of the flow. Assign the label of that flow as an edge attribute; do the same for duration, packets, and bytes.

(3) Calculate two additional edge attributes:

mean packet size = bytes/packets

mean packet rate = packets/duration

(4)For each set of edges with both nodes in common, perform the following simplification:

Assume x edges e_1,e_2,…,e_x =(u,v).

Create a new undirected edge e_(x+1),and assign it the following attributes:

label(u,v) ∶= the most common application class label among e_1,e_2,…,e_x.

minduration ≔ minimum duration among e_1,e_2,…,e_x.

minpacket size ∶= minimum mean packet size among e_1,e_2,…,e_x.

minpacket rate ∶= minimum mean packet rate among e_1,e_2,…,e_x.

maxduration ≔ maximum duration among e_1,e_2,…,e_x.

maxpacket size ∶= maximum mean packet size among e_1,e_2,…,e_x.

maxpacket rate ∶= maximum mean packet rate among e_1,e_2,…,e_x.

bytes_uv ≔ sum of bytes flowing from u to v.

bytes_vu ≔ sum of bytes flowing from v to u.

symmetry ∶= min((bytes_uv)/(bytes_vu ),(bytes_vu)/(bytes_uv ))

Remove edges e_1,e_2,…,e_x from the TAG.

(5) Remove any loops (edges which leave from, and enter the same node) from the TAG.

The following set of edge level features are used for bootstrap classification:

- Min Packet Size

- Max Packet Size

- Min Duration

- Max Duration

- Min Packet Rate

- Max Packet Rate

- Symmetry

2. Optimal Bootstrap Feature Selection

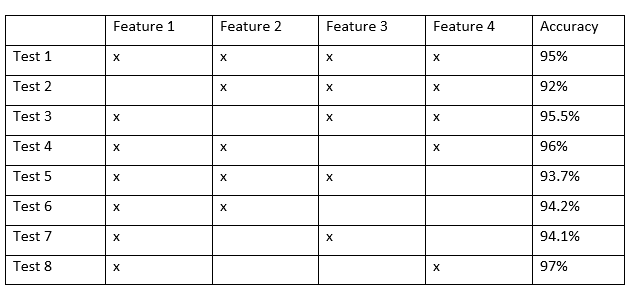

From the given edge level features above, to find the optimal set of features to be used within the bootstrap classifier, a brute force (trial and error) method was used. The method is outlined below:

1.Use all x available features in ML algorithm and record bootstrap results

2.Test and record all combinations of x-1 features

3.Evaluate bootstrap results from step 2 and compare which feature removal results in the largest decrease in accuracy

4.Repeat step 2 for x-2 combinations of features but retain the features that contribute the most to the final bootstrap accuracy and remove features that have little contribution to final accuracy

5.Repeat reduction in x feature combinations until accuracy results do not improve.

The resulting optimal set of edge features is:

- Min Packet Size

- Max Packet Size

- Min Packet Rate

- Max Packet Rate

- Symmetry

In the example above, from test 2-5, the accuracy shows that Feature 1 & 4 has a significant impact in the reduction of bootstrap accuracy, meaning that keeping the features are essential to a high accuracy. Thus in test 6-8, by retaining Feature 1 (which resulted in the largest decrease in accuracy), by testing different feature combinations with two features, the results verify that Features 1 & 4 are the most important features to generate the highest bootstrap accuracy.

3. Tree Based Classifier

The project will used a Random Forest Classifier in R, such that results can be directly compared to those form the 2014 project.

There are two main parameters to optimise the Random Forest algorithm:

ntree ≔ Number of decision trees to grow

nodesize ≔ Size of terminal node or the depth of the tree

The optimal nodesize was found by an iterative brute-force method.

Assume N features to be used.

Try nodesize = √N.

Then try nodesize = 2k√N, and nodesize = √N/(2k), k=1,2,3…

The value which led to the highest classification accuracy in the tests was chosen as the optimal parameter value.

To settle on a value for ntree, again an iterative process was used. By starting with an arbitrarily small value, and observing the out-of-bag error rate (OOB) as ntree is increased, a value for ntree was chosen as the point where the OOB plateaus. (Note that the out of bag error rate refers to the prediction error of ML algorithm.)

The parameter values to be used for the bootstrap classifier are as follows:

ntree = 20

nodesize = 10

Stage 2: Calibration

In the second stage of the system, a graph-based approach to extract spatial traffic statistics from the initial application label predictions will be used. These spatial traffic statistics will then be used as features in a second iteration of ML classification, effectively calibrating the initial classification.

1. Creating Vertex Histograms

In this step spatial traffic statistics are extracted, and the information stored in a histogram as a node attribute.

Assume N application classes. Number each class 1 to N.

Let deg_k(v) be the degree of a node v only counting edges with application class k, k ∈ [1,N].

Let deg(v) be the total degree of a node v.

Let deg ̂_k(v) be the normalised degree, deg ̂_k(v) = deg_k (v)/deg(v)

For each node v in the TAG:

Create a histogram h with N elements,such that h[k] = deg ̂_k (v)

Assign this histogram as a node attribute to v

2. Creating Spatial Traffic Statistics Features

The spatial traffic statistics to be used as features in the calibration stage are extracted from the vertex histograms as follows:

Assume N application classes.

For each edge e = (u,v) in the TAG:

Let h_u be the histogram of vertex u, h_v the histogram of vertex v

Assign edge attributes x_1, x_2,…, x_n, x_deg, y_1, y_2,…, y_n, y_deg to e such that:

x_k = h_u[k], y_k = h_v[k], k ∈ [1,N]

x_deg = deg(u), y_deg = deg(v)

For N application classes, this will result in 2*(N+1) features to be used for calibration classification.