Difference between revisions of "Projects:2017s1-111 OTHR Alternative Computing Architecture"

(Added Images for Equations) |

(Added CPU Experiments) |

||

| Line 2: | Line 2: | ||

== Project Team == | == Project Team == | ||

Daniel Lawson | Daniel Lawson | ||

| − | |||

== Supervisors == | == Supervisors == | ||

| Line 41: | Line 40: | ||

[[File:FFT Algorithm.JPG]] | [[File:FFT Algorithm.JPG]] | ||

| + | |||

| + | == CPU Experimentation == | ||

| + | '''Devices Available''': Intel i5-3570k (Ivy Bridge) and Intel Xeon E5-2698 | ||

| + | '''Tools Used''': Compiler directives in the form of OpenACC through the PGI Compiler and OpenMP through the Intel Compiler. | ||

| + | |||

| + | <br> | ||

| + | '''Experiment 1: Choice of Compiler with No Directives used'''<br> | ||

| + | [[File:Compilers.JPG|200px|thumb|left|Effect of Compiler on Run Time]] | ||

| + | A number compilers were chosen and compared against the single core implementation of the reference algorithm where the following was found:<br> | ||

| + | 1. With no optimisation the best performer was the PGI compiler while the worst was Intel. | ||

| + | 2. As optimisation levels increased this difference became saturated and minimal difference in run time was found. | ||

| + | |||

| + | <br> | ||

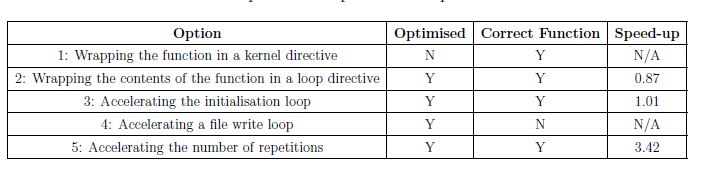

| + | '''Experiment 2: Effect of Compiler Directives'''<br> | ||

| + | Using OpenACC compiler directives through the PGI compiler, a number of options for where to accelerate the code was tested with differentiating results as seen by the Table below: | ||

| + | [[File:CompilerSummary.JPG]] | ||

| + | |||

| + | Based on these results the following observations were made: | ||

| + | 1. Race conditions were discovered if trying to parallelism arrays over multiple simulations or at the output text file. | ||

| + | 2. Adding directives to small loops can decrease overall performance due to overhead in setting up memory. | ||

| + | 3. Large code blocks correctly parallelised can be sped up by a large amount. | ||

| + | |||

| + | <br> | ||

| + | '''Experiment 3: Effect of the number of Cores'''<br> | ||

| + | The final experiment conducted was to evaluate the number of cores. It was found that the compiler can be set to target any number of cores on the system, with difference latency results. As the number of cores were increased, up to a maximum of 4 the total latency decreased. However beyond this point the increased overhead started to dominate the program and thus performance decreased. | ||

| + | [[File:Cores vs Runtime.png|200px|thumb|right|Effect of Number of Cores on Runtime]] | ||

| + | |||

| + | == GPU Experimentation == | ||

| + | == FPGA Experimentation == | ||

| + | == ASIC Experimentation == | ||

| + | |||

| + | == Comparison of Final Architectures == | ||

| + | |||

| + | == General Case Mapping Insights == | ||

| + | |||

| + | == Lessons Learnt == | ||

| + | |||

| + | == Future Work == | ||

Revision as of 15:00, 29 October 2017

Contents

Project Team

Daniel Lawson

Supervisors

Dr Braden Phillips

Mr Shane Breandler BAE Systems (External)

Abstract

The aim of this project was to investigate the four computer architectures most commonly used for signal processing and radar to determine which would be most suitable for use in the JORN Phase 6 upgrade using auto code generation tools.

The architectures chosen were CPU, GPU, FPGA and ASIC with each compared across a range of metrics including run-time, utilisation, power, thermal and cost, base on previous work in computer architecture comparisons. Each system was explored for feasibility through experimentation on a wave propagation algorithm to evaluate device parameters and the measurement tools. Feasible architectures were then compared against each other using a second algorithm representative of expected workload where it was found that a GPU based system produced the best results with due to high performance with large data sets and strong developer support through tools and testing

As the project involved mapping a single algorithm to a number of different architectures, insight into the general process of high level synthesis was also discovered. It was found that even architectures designed for maximum portability required some manual rewriting of code to fully take advantage of parallelism with correct output.

Introduction

As part of the latest (Phase 6) upgrade to the Jindalee Operational Radar Network (JORN) project, BAE Systems Australia expect that the demand for radar simulation algorithms will increase over the duration of the networks lifetime. In expectation for this increased demand, this project will investigate the feasibility or a range of different architectures to identify the most suitable architecture for radar simulation. The methods found and results gained from this project will provide a basis for both strategic decisions for the JORN 6 upgrade, as well as a potential design guide for future algorithms.

Project Constrains and Assumptions

In was noted that JORN already possesses a large pre-existing code base designed for a CPU architecture. To reduce engineering costs of mapping the entire code base to a new architecture automatic code generation tools were used. This constraint acknowledges the fact that results may change if optimal implementations of each architecture were designed manually.

Methodology

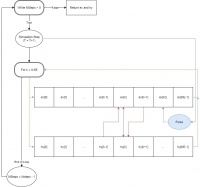

1) An initial reference algorithm was mapped to each of the four architectures to show that it was possible to generate an implementation on that system with the tools available. 2) The system was then explored and the effect of different device parameters experimented on to determine optimal settings. 3) If the architecture was deemed feasible for use with JORN then the second algorithm that represents the expected system workload was mapped and data recorded for latency, utilisation, power, thermal and cost. 4) Each of these results was then compared against each other and plots produced. From these results and the observations made during implementation, the architecture that was most suitable for JORN was determined.

Reference Algorithm: Wave Simulation

The initial reference algorithm was based on simulating a finite array for a specified number of time steps implementing the wave equations for a Gaussian Pulse source. This algorithm was chosen based on its simplicity with only a few operations on a large data set, the array size and number of simulations steps were both changeable and the application is somewhat related to radar.

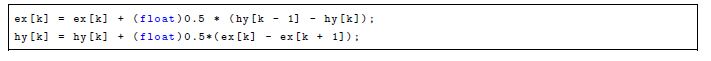

Comparison Algorithm: Cooley-Tukey FFT

It was known that the JORN system uses a Polyphase channeliser to down convert the received signal, filter out noise and extract the relevant data from each channel in one system block. As this system was not implementable due to time constraints, a portion of the channeliser in the form of the FFT block was used. The Cooley-Tukey algorithm is a well known standard that computes an NxN complex Fourier Series with Nlog(N) time complexity rather than N^2 such as occurred in older implementations. Both a recursive implementation based on divide and conquer and an in-place bit shifting algorithm was generated with the recursive version preferred due to intuitiveness.

CPU Experimentation

Devices Available: Intel i5-3570k (Ivy Bridge) and Intel Xeon E5-2698 Tools Used: Compiler directives in the form of OpenACC through the PGI Compiler and OpenMP through the Intel Compiler.

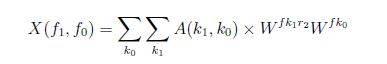

Experiment 1: Choice of Compiler with No Directives used

A number compilers were chosen and compared against the single core implementation of the reference algorithm where the following was found:

1. With no optimisation the best performer was the PGI compiler while the worst was Intel. 2. As optimisation levels increased this difference became saturated and minimal difference in run time was found.

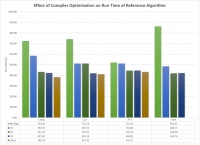

Experiment 2: Effect of Compiler Directives

Using OpenACC compiler directives through the PGI compiler, a number of options for where to accelerate the code was tested with differentiating results as seen by the Table below:

Based on these results the following observations were made:

1. Race conditions were discovered if trying to parallelism arrays over multiple simulations or at the output text file. 2. Adding directives to small loops can decrease overall performance due to overhead in setting up memory. 3. Large code blocks correctly parallelised can be sped up by a large amount.

Experiment 3: Effect of the number of Cores

The final experiment conducted was to evaluate the number of cores. It was found that the compiler can be set to target any number of cores on the system, with difference latency results. As the number of cores were increased, up to a maximum of 4 the total latency decreased. However beyond this point the increased overhead started to dominate the program and thus performance decreased.