Projects:2017s1-103 Improving Usability and User Interaction with KALDI Open- Source Speech Recogniser

Summary: KALDI speech recognition software is designed for use by researchers and, as a result, is not user friendly. A project evaluating the performance of KALDI was able to demonstrate that operating KALDI via a graphical user interface was possible with a proof-of-concept. This project builds upon the proof-of-concept with a focus on usability to enable non-technical users to use KALDI for decoding speech from both pre-recorded audio and live through a recording device, using models of their choosing.

Contents

Project Team

Students

- George Mao (usability design, implementation and evaluation)

- Vinil Chukkapally (live decoding investigation and integration)

Supervisors

- Dr. Said Al-Sarawi

- Dr. Ahmad Hashemi-Sakhtsari (DST Group)

Project Context

This project follows from a 2015 project which examined the performance of the KALDI speech recognition toolkit, with the support of DST Group.

What is KALDI?

KALDI is a free and open-source software toolkit for automatic speech recognition. It is designed for speech recognition researchers [1], and so requires speech recognition knowledge and familiarity with scripting to operate. As such, it is difficult for those without such knowledge or familiarity to use.

Previous Work

While the 2015 project did produce a user interface, it was primarily proof-of-concept. As such, it was not created with usability in mind and so is not particularly user friendly. The "on-line" (live) decoding function featured by the interface is also limited in functionality, running for a fixed duration of approximately 60 seconds and giving no choice of models.

Aim of the Project

The aim of this project is to enable users to access functionalities of KALDI without the knowledge of scripting, a language like Bash, or detailed knowledge of the internal algorithms of KALDI by the design and development of a graphical user interface (GUI).

Furthermore, attempts will be made to transcribe live audio speech continuously, with functionality operable from the interface.

Background

Acoustic and Language Models

It can be said that spoken speech is language that is "coded" into sounds, and thus the transcription of verbal speech can be considered as "decoding" the audio. In speech recognition, such "code" is characterised by acoustic and language models. Acoustic models describe the words or sounds of a language by their acoustic features (properties). Language models define the structure of a language, using statistics of the probability of a sequence of words forming a valid sentence. By using appropriate acoustic and language models, a speech recogniser can, in theory, decode for any combination of language and speaker.

Usability

Usability is commonly described as how easy a system is to use by its target demographic in its intended operating environment [3]. In general, usability engineering models describe measures of usability in terms of [3][4][5]:

- Learnability (ease of learning).

- Efficiency of use after learning.

- Ability for infrequent use without needing to relearn.

- The frequency and severity of user errors.

- Subjective user satisfaction.

It then follows that a system with high usability is one that is easy to learn, efficiently used after learning, intuitive, prevents user errors and subjectively satisfying to the user.

Principles for developing usable systems are well-established, and the project design is informed by the following usability principles [6]:

- The interface should give the user visual feedback on the system state in reasonable time.

- The interface should provide a parallel between the system and real world by presenting information in a natural and logical order.

- Language within the interface should be consistent, and platform conventions followed.

- The interface should minimise the cognitive burden on the user by encouraging recognition rather than recollection.

- The interface should not present irrelevant information.

- The interface should assist the user in error recognition and recovery by using familiar and constructive language, and by indicating problems precisely.

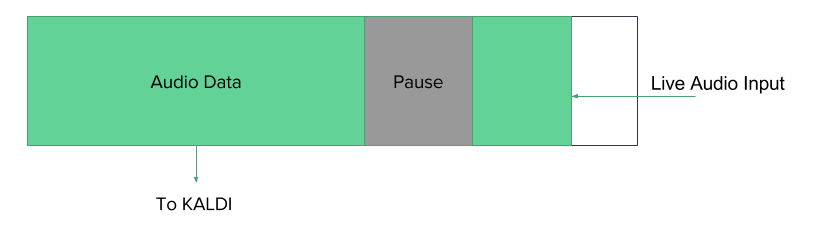

Live Decoding

The approach towards live decoding is to use an audio input buffer to capture incoming data from the active audio recording device. Using voice activity detection, periods of silence are checked for and a long enough silence is treated as the end of a sentence. Audio is sent per-sentence to KALDI for decoding.

System Approach

The approach used towards the development of the user interface is to have the interface act as a configuration "front-end" for the user. The interface constructs an appropriate command to execute KALDI scripts based on user input.

This approach offers the advantage of cleanly separated layers of abstraction, where the interface would be concerned with the interface-scripting level and live decoding efforts with the script-KALDI level. It also allows operational functionality to be changed independently of the interface and reduces the required learning efforts in design and implementation. Furthermore, as the interface would be behaving the same way as a technical user would in operation, there is a stronger parallel between the system and the use case.

Usability Tests

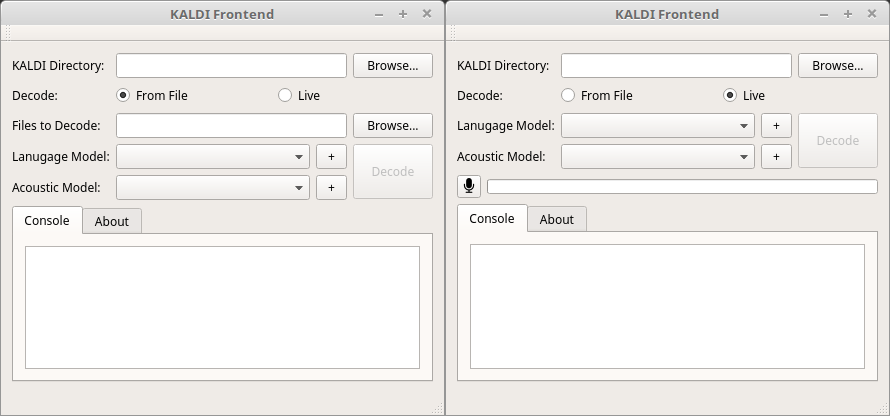

State of the Interface

The interface during usability appeared as shown below. It was capable of decoding audio files from a directory containing pre-recorded audio. Live decoding functionality was not implemented into software at that stage and visible elements were placeholders.

Methodology

Usability of the interface was evaluated through the use of feature inspection, cognitive walkthrough and heuristic analysis.

- Feature inspection is performed by listing the sequence of features of a system used to perform typical tasks. Long, cumbersome or unnatural steps are checked for. [7]

- In a cognitive walkthrough, the problem solving process of a user is simulated and checked to see if it can be assumed to lead to the next correct action at each step. [2][7]

- Heuristic analysis involves evaluating the usability of a system using a set of guidelines. [2][7][8]

Feature Inspection: Features

The features used to accomplish typical tasks were identified as:

- Line edit elements paired with browse buttons to add KALDI and decoding directories

- Radio buttons to select decoding method

- Combo boxes paired with buttons to add language/acoustic models

- The decoding button and microphone on/off controls

Cognitive Walkthrough: Simulated Use Case

The cognitive walkthrough was performed as a run-through of a typical use case with the client. The steps presented during the walkthrough, as a simulation of the use case for decoding from a directory, were:

- The user specifies a directory for the KALDI installation by using the browse function.

- The user selects a decoding method (decoding from files).

- The user switches between decoding methods.

- The user adds a language model using the browse function.

- The user selects an acoustic model that has been added previously.

- The user engages the decoding process by pressing the decode button.

- The user edits the transcript presented by the interface after decoding has completed.

Heuristic Analysis: Guidelines

The guidelines used for heuristic analysis were defined based on the outlined usability principles:

- Provide relevant visual feedback, where possible.

- Interface controls should respond to user interaction in an expected manner.

- The user should be aware of the actions of the system.

- Present information to the user where applicable to enable learning of interface usage.

- The functionality of interface elements should be unambiguous.

- The user should be prevented from performing actions that are not relevant to the system.

Summary of Results

The results of usability testing are summarised in the table below showing the identified usability issues, the method that identified it, a description of the issue and the expected behaviour. The extent of the identified usability issues are not considered severe and during cognitive walkthrough, the interface was received positively by the client, indicating a reasonably high degree of usability. The expected behaviours were each implemented into the final interface, in at least one of the suggested forms.

| Issue | Testing Method | Problem Description | Expected Behaviour |

|---|---|---|---|

| Repetitive usage in common interface elements | Feature Inspection | Items added using browse buttons are not retained when the interface is closed, requiring the user to add them to the interface manually each time. This makes usage cumbersome to the user, especially when certain values are not expected to change between sessions. | The interface should retain certain values (KALDI directory, LM/AM) so that the user does not have to repeat the process every time the interface is started. |

| Potential confusion when changing decoding methods | Cognitive Walkthrough | The interface hides irrelevant elements when the user changes between decoding methods. Due to the arrangement of visual elements, common elements visibly move in the hiding process, possibly misleading the user to believe that they have also been hidden. | Dynamic interface elements should be moved, such that there is less visible change when the user changes the decoding method. |

| Potential ambiguity in microphone mute/unmute | Cognitive Walkthrough | The button for microphone mute/unmute function changes icons to indicate the current status. These icons are black-and-white, which may cause difficulty to the user on discerning the state of the microphone. | Use colour indication for the icons (e.g. green for unmuted, red for muted), or use an interface element that is less ambiguous. |

| Lack of signifiers for adding language and acoustic models | Cognitive Walkthrough, Heuristic Analysis | The buttons used to add language and acoustic models to the interface do not give strong enough indication of their purpose. | Add tooltips to elements of the interface, so that the interface provides more information for the user. |

| Lack of visibility for user edits in the transcript | Cognitive Walkthrough | When editing the transcript, user input is inserted in the same format as the produced text. The user may find it difficult to see parts that were edited. | Make user edited text a different colour, such as green, to increase the visibility of edits. |

| Lack of access to decoded files | Cognitive Walkthrough | The user may want to play back audio files that have been transcribed to verify the accuracy of the transcription and make corrections. There is no method within the interface to enable convenient access to the files. | Make file names in the transcript clickable links, such that they may open the file of choice from within the interface, or provide an interface element to open the directory of files so that the user may locate and play back the file in the native file browser. |

| Visual feedback when decoding from file depends on the user manually switching tabs | Heuristic Analysis | When the user initiates decoding from file, script output is sent to the console tab. The interface does not change tabs, so the user may not be able to see the response. | The interface should switch to the console tab when decoding is initiated. The user is able to see the output of the decoding script. Once decoding completes, the interface should switch back to the transcript. |

| Interface options can be changed while decoding is in progress | Heuristic Analysis | While the software is decoding, the user is able to interact with interface options, such as decoding mode and decoding directory. This may cause confusion during use, as interface elements change based on decoding type. | The user should not be able to modify the interface options when decoding is in progress. Visual options should be kept consistent with the current decoding job. |

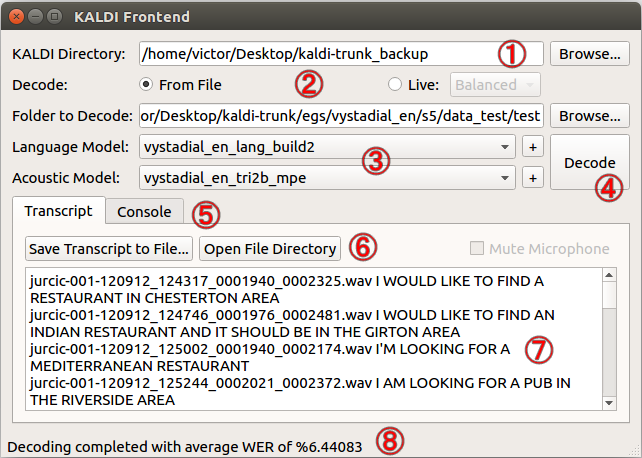

Project Result

The project produced a GUI based on the 2015 work, but with usability as a major design consideration. The interface is capable of decoding audio from both pre-recorded audio and live through an audio input device. The user is able to select their choice of language and acoustic models for both decoding methods and live decoding runs indefinitely until the user manually stops the decoding process.

The GUI features:

- Logical order of operations

- The interface presents its options such that in its intended use case, the user starts with the controls at the top of the interface and goes downwards.

- Once an option is selected, there is minimal need to use controls presented above.

- This provides a natural workflow to the user by presenting a parallel between the interface and the real world.

- Visual indication of option availability

- The interface visibly disables or hides options that are unavailable for the user, preventing confusion.

- In the case shown, the option to select a live decoding profile is disabled when the software is set to decode from files.

- When decoding live, the user is able to choose a trade-off between decoding speed and accuracy, using pre-defined decoding profiles (fast/balanced/accurate).

- Truncation of model names

- Language and acoustic model directories are automatically shortened to the name of their folders when added to the interface.

- This minimises the cognitive load on the user by presenting only the information useful for identifying the models.

- It also allows the user to use model names of their choosing by naming the model directories.

- Error prevention when engaging decoding

- The user is prevented from starting the decoding process until all necessary information is supplied to the interface (disabled button is not shown).

- The interface also performs a simple check to validate the information, reducing the possibility for user errors resulting from invalid information supplied to the software.

- Access to console

- The interface provides access to a console tab, which contains outputs from the executed scripts that would normally appear when run in the UNIX terminal.

- This provides optional information for advanced users as well as debugging information, which can assist in the resolution of errors.

- Option to save results

- A method of saving transcription results is presented from within the interface, reducing user effort and memory load.

- Editable transcript

- Transcribed results are presented from within the interface to the user once decoding is completed.

- The results are editable by the user, and edits are reflected in the saved transcript when (6) is used.

- This reduces the effort required from the user in correcting transcription results.

- Status bar

- The current state of the interface is reported to the user by messages shown in the status bar.

- This informs the user of the actions of the interface by providing visual feedback.

Conclusions

The resulting interface produced by the project builds upon its predecessor with usability as a major design focus. The results of usability testing indicate that, given the user has properly prepared and trained models, the interface has a reasonably high degree of usability for non-technical users. The tests were conducted small-scale, however, and so additional usability testing to a greater depth may identify more usability issues.

Live decoding was modified to enable an advanced user to supply custom parameters, increasing flexibility in usage and enabling control over the trade-off between decoding speed and accuracy. This is presented towards non-technical users in the form of pre-defined decoding profiles.

The project primarily focused on improving the usability towards non-technical users for the decoding process. Further efforts could be made to enable non-technical users to create, prepare and train their own language and acoustic models. When paired with the software produced by this project, this could enable a user to use KALDI to a greater depth, expanding its accessibility. Furthermore, some usability and live decoding features were not fully explored due to constraints in the project. These features could be further improved with additional efforts.

References

[1] Kaldi, "About the Kaldi project." [Online]. Available: http://kaldi-asr.org/doc/about.html [Accessed: 13 March 2017]

[2] L. R. Rabiner, B. H. Juang, B. Keith, "Speech recognition: Statistical methods", Encyclopedia of Language & Linguistics, Elsevier, pp. 1-18, 2006.

[3] A. Holzinger, “Usability engineering methods for software developers,” in Communications of the ACM 48, no. 1 (2005): pp. 71-74.

[4] J. Nielsen, “The usability engineering life cycle,” IEEE Computer 25(3), pp.12-22, 1992.

[5] J. Nielsen, “Iterative user-interface design,” IEEE Computer 26(11), pp. 32-41, 1993.

[6] J. Nielsen, "10 usability heuristics for user interface design," Nielsen Norman Group 1, no. 1 (1995).

[7] J. Nielsen, “Usability inspection methods,” in Conference companion on Human factors in computing systems: pp. 413-414. ACM, 1994.

[8] J. Nielsen, “Finding usability problems through heuristic evaluation,” in Proceedings of the SIGCHI conference on Human factors in computing systems: pp. 373-380. ACM, 1992