Projects:2017s1-103 Improving Usability and User Interaction with KALDI Open- Source Speech Recogniser

Contents

Project Team

Students

- George Mao (usability design, implementation and evaluation)

- Vinil Chukkapally (live decoding investigation and integration)

Supervisors

- Dr. Said Al-Sarawi

- Dr. Ahmad Hashemi-Sakhtsari (DST Group)

Project Context

This project follows from a 2015 project which examined the performance of the KALDI speech recognition toolkit, with the support of DST Group.

What is KALDI?

KALDI is a free and open-source software toolkit for automatic speech recognition. It is designed for speech recognition researchers [1], and so requires speech recognition knowledge and familiarity with scripting to operate. As such, it is difficult for those without such knowledge or familiarity to use.

Previous Work

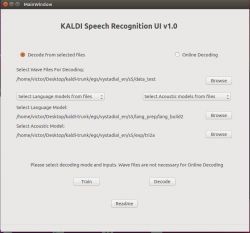

While the 2015 project did produce a user interface, it was primarily proof-of-concept. As such, it was not created with usability in mind and so is not particularly user friendly. The "on-line" (live) decoding function featured by the interface is also limited in functionality, running for a fixed duration of approximately 60 seconds and giving no choice of models.

Aim of the Project

The aim of this project is to enable users to access functionalities of KALDI without the knowledge of scripting, a language like Bash, or detailed knowledge of the internal algorithms of KALDI by the design and development of a graphical user interface (GUI).

Furthermore, attempts will be made to transcribe live audio speech continuously, with functionality operable from the interface.

Background

Acoustic and Language Models

It can be said that spoken speech is language that is "coded" into sounds, and thus the transcription of verbal speech can be considered as "decoding" the audio. In speech recognition, such "code" is characterised by acoustic and language models. Acoustic models describe the words or sounds of a language by their acoustic features (properties). Language models define the structure of a language, using statistics of the probability of a sequence of words forming a valid sentence. By using appropriate acoustic and language models, a speech recogniser can, in theory, decode for any combination of language and speaker.

Usability

Usability is commonly described as how easy a system is to use by its target demographic in its intended operating environment [3]. In general, usability engineering models describe measures of usability in terms of [3][4][5]:

- Learnability (ease of learning)

- Efficiency of use after learning

- Ability for infrequent use without needing to relearn

- The frequency and severity of user errors

- Subjective user satisfaction

It then follows that a system with high usability is one that is easy to learn, efficiently used after learning, intuitive, prevents user errors and subjectively satisfying to the user.

Principles for developing usable systems are well-established, and the project design is informed by the following usability principles [6]:

- The interface should give the user visual feedback on the system state in reasonable time

- The interface should provide a parallel between the system and real world by presenting information in a natural and logical order

- Language within the interface should be consistent, and platform conventions followed

- The interface should minimise the cognitive burden on the user by encouraging recognition rather than recollection

- The interface should not present irrelevant information

- The interface should assist the user in error recognition and recovery by using familiar and constructive language, and by indicating problems precisely

Live Decoding

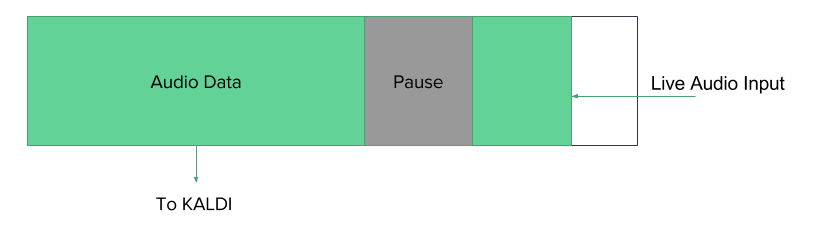

The approach towards live decoding is to use an audio input buffer to capture incoming data from the active audio recording device. Using voice activity detection, periods of silence are checked for and a long enough silence is treated as the end of a sentence. Audio is sent per-sentence to KALDI for decoding.

System Approach

The approach used towards the development of the user interface is to have the interface act as a configuration "front-end" for the user. The interface constructs an appropriate command to execute KALDI scripts based on user input.

This approach offers the advantage of cleanly separated layers of abstraction, where the interface would be concerned with the interface-scripting level and live decoding efforts with the script-KALDI level. It also allows operational functionality to be changed independently of the interface and reduces the required learning efforts in design and implementation. Furthermore, as the interface would be behaving the same way as a technical user would in operation, there is a stronger parallel between the system and the use case.

Usability Tests

References

[1] Kaldi, "About the Kaldi project." [Online]. Available: http://kaldi-asr.org/doc/about.html [Accessed: 13 March 2017]

[2] L. R. Rabiner, B. H. Juang, B. Keith, "Speech recognition: Statistical methods", Encyclopedia of Language & Linguistics, Elsevier, pp. 1-18, 2006.

[3] A. Holzinger, “Usability engineering methods for software developers,” in Communications of the ACM 48, no. 1 (2005): pp. 71-74.

[4] J. Nielsen, “The usability engineering life cycle,” IEEE Computer 25(3), pp.12-22, 1992.

[5] J. Nielsen, “Iterative user-interface design,” IEEE Computer 26(11), pp. 32-41, 1993.

[6] J. Nielsen, "10 usability heuristics for user interface design," Nielsen Norman Group 1, no. 1 (1995).